INTRODUCTION

Throughout the history of education, assessment has been essential for learning. Its main role has historically been as a mechanism for teachers to support learning and enhance students’ capabilities and knowledge (Hayward, 2015). Nevertheless, based on new educational reforms since the 1990s, the influence of New Public Management, and neoliberal practices, assessments are now marked by competition and performance mechanisms (Verger, Parcerisa et al., 2019) that are endangering the original essence of evaluation and, consequently, of education. This phenomenon, that Ball (2003, 2012c) denominates ‘performativity’, narrows and fragments learning (Wyse et al., 2015) and has a built-in over-preoccupation with metrics, measurement, and numbers (Ball, 2015). Thus, it obstructs and limits the possibilities of education, rather than expands and enriches them (Ball, 2012a, 2012b). These trends endanger the learning processes that should occur in classrooms (Madaus & Russell, 2010), as well as influence human beings’ essence (Ball, 2017).

Nowadays, what happens internationally in a great number of classrooms depends on a series of factors that are not exactly based on the needs, interests and capabilities of the children that populate those classrooms (Hoyuelos & Cabanellas, 1996). Instead, they are determined by high-stakes testings (HSTs) or large-scale assessments (LSAs) in general and, with them, standardised processes (Ball, 2003, 2017; Madaus & Russell, 2010). Thus, assessments, and the learning that might enhance capabilities and create authentic knowledge, have reduced teachers to focusing on preparing students for the tests (Berliner, 2011). The reason for this is that assessments have become an end in themselves, with a focus on accountability (Hayward, 2015) and rankings (Jones & Ennes, 2018). Likewise, test results are tied to judgements of school and teacher performance and, as long as this dynamic remains unchanged, assessment for learning and all classroom experiences will be determined by it (Hayward, 2015).

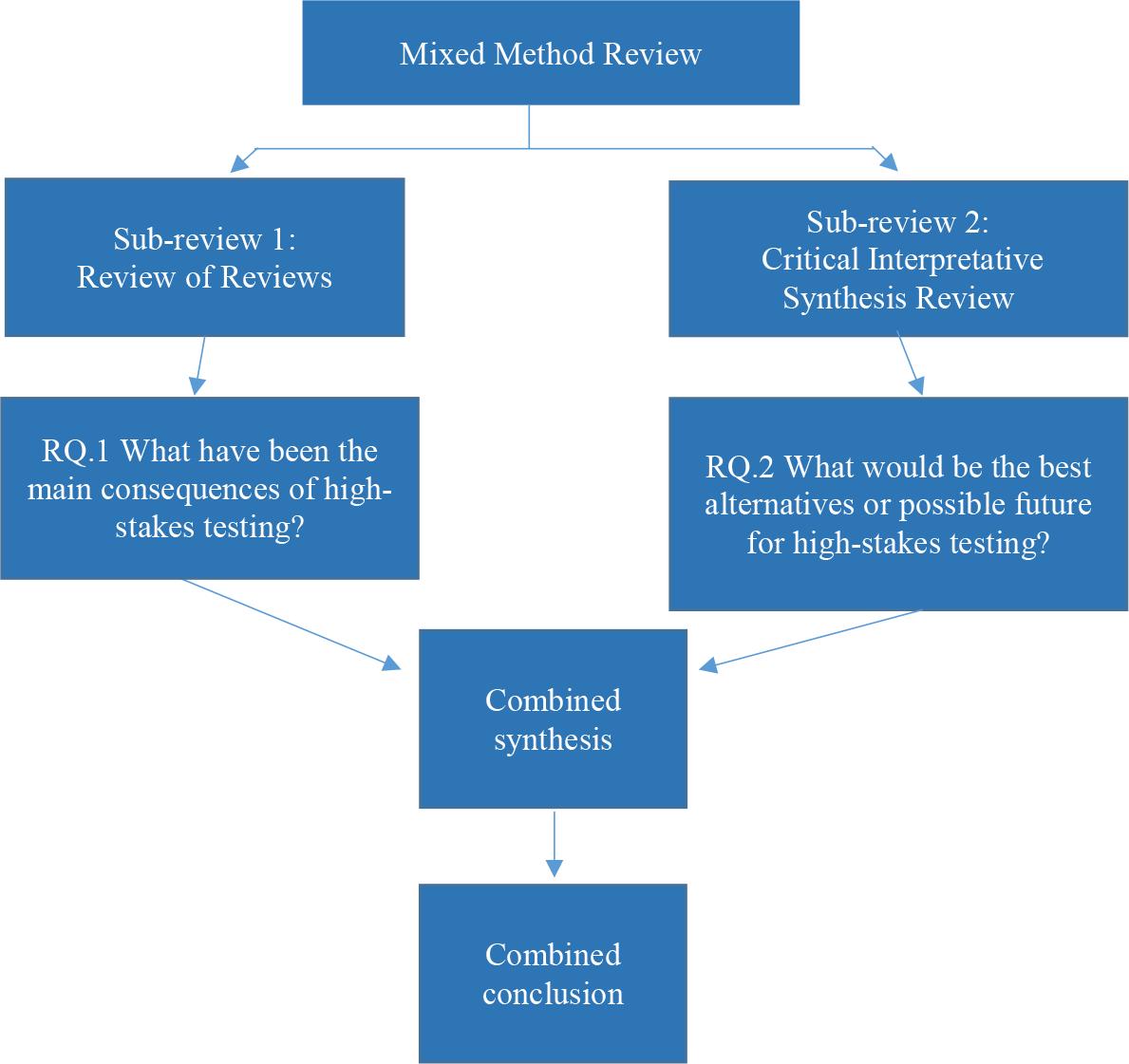

Thus, although HSTs fulfil an accountability role that is necessary for society (Bovens et al., 2008) because of the significant data they provide (Schillemans et al., 2013), due to their structure and the stakes involved, they are endangering education, people’s subjectivities, learning and the formative meaning of education in the contexts where they take place (Madaus & Russell, 2010; Helfenbein, 2004; Schillemans, 2016; Falabella, 2021). Therefore, the present study will explore the strengths and shortcomings of this mechanism and possible ways to improve the current situation. This rationale and preoccupation translate into a main question, which will be the driving force of this research: How can the future of large-scale assessment serve as both a facilitator for enhanced learning and a robust mechanism for ensuring quality within education systems, and thus improve the current large-scale assessments? This will be done using a Mixed Method Systematic Literature Review which, on the one hand, will enquire what have been the main consequences of HST through a Review of Reviews and, on the other hand, using a Critical Interpretative Synthesis Review, will explore the best alternatives or possible future for high-stakes testing.

HISTORY OF THE HIGH-STAKES TESTING

The history of high-stakes testing begins with a significant change in governance that occurred in the 1990s, initially in some Western nations (Murphy, 2021) such as the United States, the United Kingdom, and European countries (Levi-Faur, 2012; Lynn, 2012). Over time, it spread to other territories, such as Latin America (Verger, Fontdevila et al., 2019; Rhodes, 1996). This change implied that governments alter their way of governing from a centralised structure in terms of power and control to a decentralised one, consisting of giving both powers and control to new entities such as markets, political agencies or institutions, regional governments, and non-governmental organisations, among others (Levi-Faur, 2012; Rhodes, 2007). Scholars named this phenomenon “New Governance” (Lynn, 2012).

This New Governance (Lynn, 2012) was characterised by “steering at a distance” (Murphy, 2021, p. 53), which was distinguished by regulation, networking, and the creation of standards (Levi-Faur, 2012). Along with this expansion of government and decentralisation came the “problématique” (Levi-Faur, 2012, p. 13). This required that, in order to maintain legitimacy and effectiveness, and preserve the quality of services (Börzel, 2010; Schillemans et al., 2013; Link & Scott, 2010), essentially to demonstrate “Good Governance” (Murphy, 2021, p. 33), many governments had to opt for using mechanisms that the academy has called “managerial technics” (Ackerman, 2004; Hood & Dixon, 2016). These mechanisms or strategies became known as “new public management, or NPM for short” (Murphy, 2021, p. 42).

One of the measures for safeguarding “Good Governance” is high-stakes testing (Nichols & Berliner, 2007), which is a “policy instrument” (Levi-Faur, 2012) that seeks to measure the “learning” or performance of students, teachers, and schools through standardised tests to evaluate education and ensure its quality (Nichols & Berliner, 2007; Muller, 2018). Furthermore, this type of assessment is characterised by the fact that they have “high stakes” (Jones & Ennes, 2018), because they have crucial impacts on educational agents like promoting educators and students, adjusting salaries, and allocating resources (Jones & Ennes, 2018; Gregory & Clarke, 2003).

Naturally, there are multiple causes and factors that influence the consolidation of high-stakes testing. Along with the evolution of governance (Levi-Faur, 2012), there is another aspect involved, the introduction of Western European mass education in the 19th century. This involved the incorporation and expansion of state-controlled, compulsory general education (Soysal & Strang, 1989) that, according to some authors, was linked to economic development and growth (Zinkina et al., 2016; Westberg et al., 2019). This historical phenomenon included the United States (Beadie, 2019) and then, in the late 19th and early 20th centuries, was introduced into Latin America (Frankema, 2009), Australia, New Zealand and, to a lesser extent, into Asia and Africa (Zinkina et al., 2016).

Mass education was crucial to the formation of national education systems and, thus, to constructing and unifying their national policies (Ramirez & Boli, 1987); it is considered to be “a by-product of industrialization” (Green, 2013, p. 47). Between the 19th and 20th centuries, many changes occurred in the education systems due to mass education, some of which were the unification of the curriculum, regulation of the entry requirements at different levels of the system and, which is more relevant for the present study, education was focused on reproduction, mechanisation, memorisation (Benavot et al., 1991). Subsequently, national assessment measures arose (Green, 2013) and systematisation was essential to demonstrate abilities and distinguish people, giving prominence to meritocracy and competence (Green, 2013).

Additionally, mass education systems entailed the states taking a fundamental role in education: not only in funding but also in regulation and administration which, as mentioned above, gave rise to national curricular and assessment structures (Ramirez & Boli, 1987). Since then, these evaluation systems have evolved and, in the middle of the 19th century, schools started applying standardised tests that were purely memory-based (predominantly oral) (Huddleston & Rockwell, 2015). With the convergence of international influences and historical events, national assessments emerged. Then, with the Second World War, international measurement systems were formalised and spread (Kamens & McNeely, 2010). Although the timing of countries’ adoption of these dynamics varies, and some countries have not adopted these mechanisms, LSAs and HSTs have been increasingly expanding their scope, nationally and internationally, and they are shaping each other (Verger, Parcerisa et al., 2019).

Further on, these large-scale assessments and policy instruments progressively began to take more standardised structures as a result of education reforms and policies (Ball, 2003). Over time, they were imbued with functions that impose significant impacts on individuals and institutions, and so these examinations commenced to have more at stake (Nichols & Berliner, 2007). Thus, performance began to determine the granting of diplomas, access to the next levels of education, teachers’ salaries and, more importantly, this new trend created rankings and league tables on which many reputations and billions of pounds are based (Zhao, 2014). Consequently, numbers, statistics, standards, measurements, and performativity became dominant forms in modern society and, with them, high-stakes testing (Ball, 2003; Gregory & Clarke, 2003; Zhao, 2014; Muller, 2018).

METHODOLOGY

The methodology used in the present study is a Systematic Literature Review (SLR), which gives an overview of what is known about a specific topic (Gough et al., 2013). Specifically, this is a Mixed Method SLR because it “has sub-reviews that ask questions about different aspects of an issue” (Gough et al., 2012, p. 6). The first sub-review was a Review of Reviews (Gough et al., 2017), and it attempted to respond to what have been the main consequences of HSTs. For clarity purposes, this research question was assigned the number 1 (RQ.1). The second sub-review was a Critical Interpretative Synthesis Review which summarises data, challenges and questions assumptions that are taken-for-granted (Dixon-Woods et al., 2006, p. 4), and seeks to answer what would be the best alternatives or possible future for HSTs. This research question was assigned the number 2 (RQ.2). Both reviews aim to answer a major question which is, how can the future of large-scale assessment serve as both a facilitator for enhanced learning and a robust mechanism for ensuring quality within education systems and, thus, improve the current LSAs?

This SLR used a mixed methodology because finding patterns in the papers, analysing the data, and creating codes were qualitative; and, associating codes to the patterns and then quantifying them in a graph was quantitative. For a clearer understanding of the methodology, Figure 1, based on Gough et al. (2012), presents a representation of the structure.

Source: Author’s elaboration (2024).

FIGURE 1 Structure of the Mixed Method Review conducted in the present study, based on the proposal of Gough et al. (2012)

Stages of the Systematic Literature Review

The stages that were followed to realise this Mixed Method SLR are based on the proposal of the University College London (UCL) (2023) and Gough et al. (2013). In the first sub-review, 15 papers were selected; in the second sub-review, 25 papers were selected. The commands used for the searches are detailed in appendices A and B. The second sub-review demanded 25 searches, multiple synonyms and the reading of 200 abstracts. Nevertheless, when this second search yielded a scarce number of papers relevant to the question, the Gough et al. (2013) strategy of consulting experts was used. Hence, Professor Clive Dimmock and Dr. Clara Fontdevila provided what, according to their criteria, were relevant articles for the search. More details are in Appendix C.

Inclusion and exclusion criteria used for the selection of the studies are detailed below, in Table 1. It is important to note that the literature review considered exclusively publications written in English.

TABLE 1 Criteria based on Gough et al. (2012) and UCL (2023)

| SUB-REVIEW | INCLUSION CRITERIA | EXCLUSION CRITERIA | INCLUSION CRITERIA (IN COMMON) |

EXCLUSION CRITERIA (IN COMMON) |

|---|---|---|---|---|

| 1) Review of Reviews | • Only Systematic Literature Reviews were selected | • Every paper not related to the effects or consequences of high-stake testing (or other synonyms) |

• Geographic range: international • Language: English • Papers were selected based on their relevance (capacity of response) to their corresponding research questions • Time scope: 1990 to the present day, 2023 • Must contain valid ethical considerations (Petticrew & Roberts, 2006) • Only includes peer-reviewed papers • Only studies focused on high-stake tests on students • Papers could consider effects on children and teachers |

• Papers focused on exit examinations • Focused on tertiary or higher education • Focused on impacts on computer assisted testing and evaluation of teachers |

| 2) Critical Interpretative Synthesis Review | • All types of data collection (qualitative and quantitative) • Studies must have a robust methodology and detailed ethical considerations |

Source: Author’s elaboration (2024).

Quality appraisal

Firstly, the primary criterion for ensuring quality was the relevance of the selected studies in relation to the research questions (Gough et al., 2013). Secondly, the rigorousness of the methodology (Gough et al., 2013). Thirdly, only peer-revied papers were selected. Fourth and lastly, only papers from journals, and no other documents such as blogs or grey literature were included, to ensure reliability, because blogs and grey literature can be biased, incomplete, and methodologically challenging to evaluate (Hopewell et al., 2005).

Synthesis and data analysis

The present study examined the data, looked for patterns in them, created codes, and values were assigned to those codes. Subsequently, they were organised into charts and interpreted (Gough et al., 2013). To convert the data from qualitative to quantitative, the two reviews were different. Sub-review 1, as it measures consequences and the weight of them, different scores were associated based on how rigurous and robust the papers were. To measure this, the following five quality criteria were selected:

1) Number of studies reviewed.

2) Presents criteria that safeguard quality.

3) Describes the database used.

4) Presents the words used for the search.

5) States the inclusion and exclusion criteria.

Thus, according to the number of criteria that each paper met, a score was assigned to it. These scores, when associated with the consequence codes, would give a final, summative score and, therefore, a weight for each paper. The details of score allocation are given, below:

3 points: if it meets 4 or more criteria.

2 points: if it meets 3 criteria.

1 point: if it meets 2 or less of the above criteria.

Likewise, in sub-review 2, as they were the proposals themselves that were important, rather than the robustness of the research, it is the idea itself that matters. Therefore, they all have the same validity.

FINDINGS

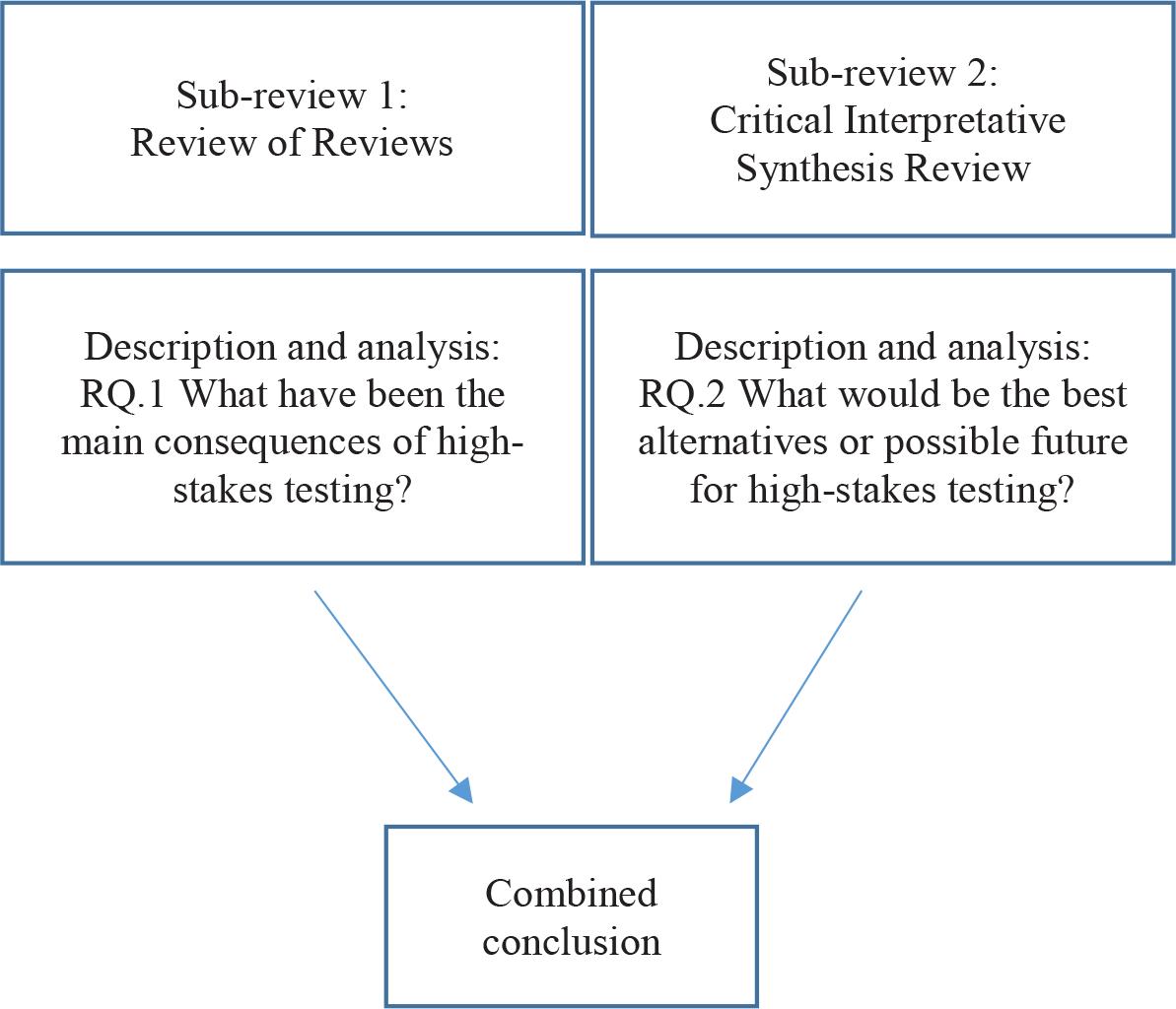

Initially, the findings will be explained for each research question, separately. Finally, both research questions will be combined in the discussion and conclusion. This organisation is illustrated in Figure 2.

Source: Author’s elaboration (2024).

FIGURE 2 Organisation of the presentation and analysis of results

Findings RQ.1 - What have been the main consequences of high-stakes testing?

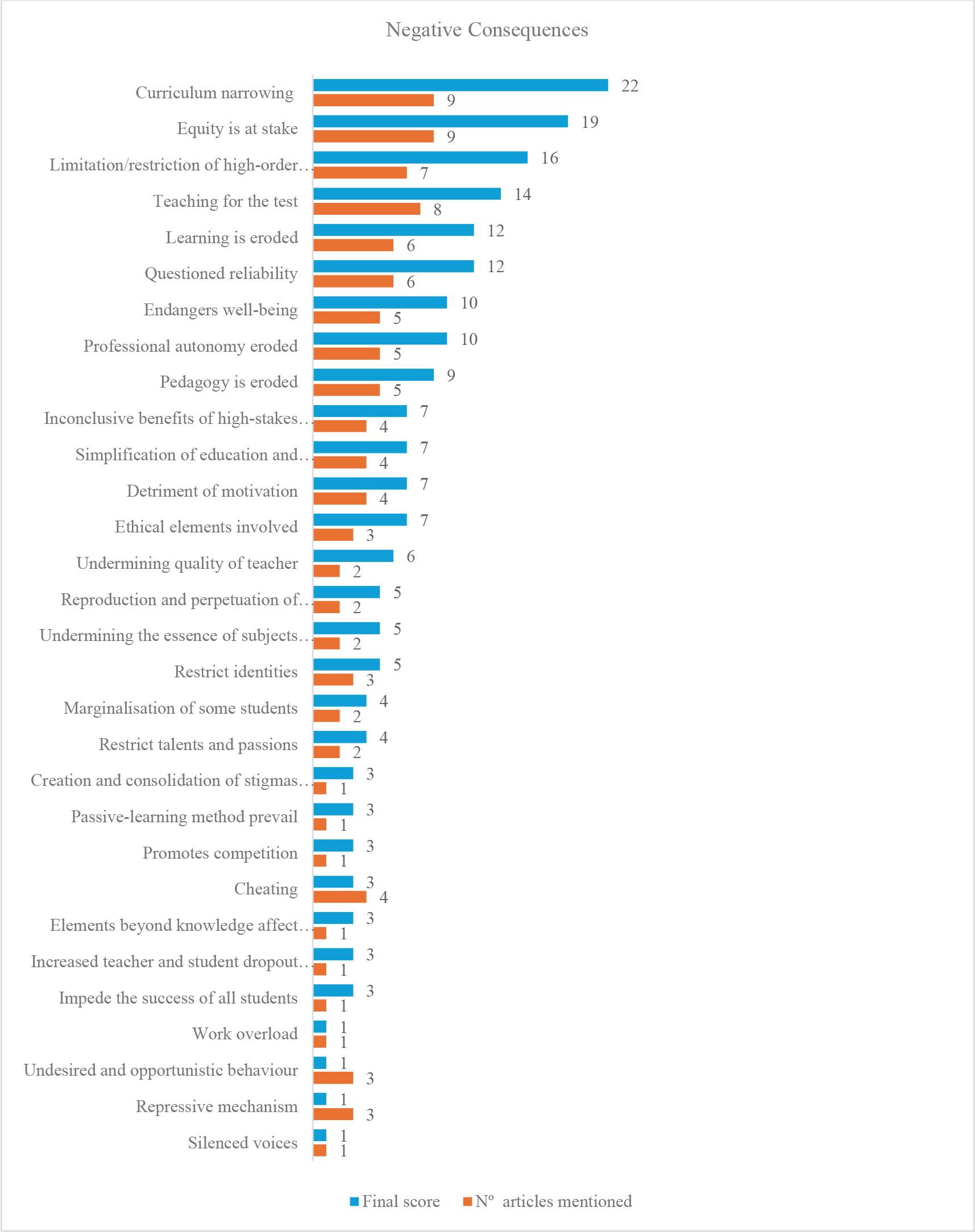

To identify predominant consequences in the existing literature and to determine those articles that provided substantial support and empirical evidence, all articles were assessed according to their robustness. The following graph, presented in Figure 3, displays the frequency of each consequence mentioned in the papers and, additionally, it assigns a score from 1 to 3 that reflects the level of rigour and robustness. These scores are based on the criteria outlined in the Synthesis and data analysis section.

Source: Author’s elaboration (2024).

FIGURE 3 Chart obtained from sub-review 1: Review of Reviews focused on answering RQ.1 - What have been the main consequences of high-stakes testing?

The results of the Review of Reviews suggest that, among the consequences with the highest scores, and those repeated most frequently, were: curriculum narrowing (Acosta et al., 2020; Au, 2009; Sigvardsson, 2017; Boon et al., 2007; Verger, Fontdevila et al., 2019; Verger, Parcerisa et al., 2019; Anderson, 2012; Cimbricz, 2002; Emler et al., 2019; Harlen & Crick, 2003), endangering equity (Acosta et al., 2020; Au, 2009; Boon et al., 2007; Bacon & Pomponio, 2023; Hamilton et al., 2013; Verger, Parcerisa et al., 2019; Anderson, 2012; Emler et al., 2019; Lee, 2008), limitation or restriction of high-order abilities (Acosta et al., 2020; Au, 2009; Verger, Fontdevila et al., 2019; Verger, Parcerisa et al., 2019; Anderson, 2012, Emler et al., 2019), teachers teaching for the test (Acosta et al., 2020; Au, 2009; Hamilton et al., 2013; Cimbricz, 2002; Ehren et al., 2016; Emler et al., 2019; Nichols, 2007; Harlen & Crick, 2003), learning eroded by the HST (Boon et al., 2007; Hamilton et al., 2013; Anderson, 2012; Cimbricz, 2002; Emler et al., 2019; Nichols, 2007), and the questioned reliability of them (Acosta et al., 2020; Verger, Fontdevila et al., 2019; Hamilton et al., 2013; Cimbricz, 2002; Lee, 2008; Nichols, 2007).

In further detail, the negative consequences after codifications resulted in a total of 30. Similar codes were organised into six themes: (1) the curriculum and what happens in the classroom, (2) how they have influenced teachers, (3) the consistency and reliability of high-stakes testing, (4) school culture, (5) equity, and (6) influence on people’s subjectivity. Firstly, the studies reviewed suggest that education has been affected because high-stakes testing has led to curricular narrowing (Acosta et al., 2020; Au, 2009; Boon et al., 2007; Verger, Fontdevila et al., 2019; Verger, Parcerisa et al., 2019; Anderson, 2012; Cimbricz, 2002; Emler et al., 2019; Harlen et al., 2002). Furthermore, this reduction implies a view of the curriculum in its broad spectrum, considering the teaching, content and skills that are developed, as well as the interactions that take place (and do not take place) within the classroom. A concrete example of this is presented by Au (2009), regarding the situation in the United States, “71% of the districts reported cutting at least one subject to increase time spent on reading and math as a direct response to the high-stakes testing mandated under NCLB” (Renter et al., 2006, as cited in Au, 2009, p. 46).

Additionally, several authors stress that high-order abilities such as critical, divergent, and creative thinking do not have priority in the classroom (Acosta et al., 2020; Au, 2009; Verger, Fontdevila et al., 2019; Verger, Parcerisa et al., 2019; Anderson, 2012; Cimbricz, 2002; Emler et al., 2019); thus, reducing teaching (Bacon & Pomponio, 2023; Ehren et al., 2016; Emler et al., 2019) to mere repetition, and memorisation of facts (Au, 2009). Increasingly, teachers have focused on preparing students for the tests (Emler et al., 2019; Nichols, 2007) and, consequently, subjects that are not assessed in the high-stakes tests (Harlen & Crick, 2003), such as art, music, sports, poetry, among others have been diminished in importance (Au, 2007), therefore, culminating in learning detriment (Boon et al., 2007; Hamilton et al., 2013; Anderson, 2012; Cimbricz, 2002, Emler et al., 2019; Nichols, 2007). Acosta et al. (2020, p. 536) highlight a student’s opinion, “We’re only learning the content of the tests and not what we’re supposed to know and go to college”.

Secondly, these negative consequences have also had an effect on teachers, their profession and professionalism. On the basis of strong rewards and sanctions, teachers have fallen into attitudes toward gaming or cheating (Ehren et al., 2016; Emler et al., 2019; Hamilton et al., 2013). HST has led several educators to leave their schools (Boon et al., 2007), has increased their work overload (Ehren et al., 2016), competitiveness (Verger, Parcerisa et al., 2019), endangered teachers’ well-being (Anderson, 2012; Cimbricz, 2002; Ehren et al., 2016; Emler et al., 2019; Harlen & Crick, 2003), makes teachers go against their beliefs and values (Ehren et al., 2016), lose their motivation (Hamilton et al., 2013; Emler et al., 2019) erodes the essence of subjects and teaching (Sigvardsson, 2017; Anderson, 2012), among other elements presented in Figure 3. Moreover, along with the harm to teachers, there is the phenomenon of de-professionalisation of teachers, which implies the loss of their professional autonomy (Verger, Fontdevila et al., 2019; Anderson, 2012; Cimbricz, 2002; Ehren et al., 2016; Emler et al., 2019). This has also contributed to the detriment of the quality of teachers (Verger, Parcerisa et al., 2019; Anderson, 2012).

Thirdly, HST fails in the consistency of the information that it provides about learning; 6 of the 15 studies (with a total score of 12) declare that the reliability of the mechanisms is questionable (Cimbricz, 2002; Lee, 2008; Nichols, 2007; Hamilton et al., 2013; Verger, Fontdevila et al., 2019; Acosta et al., 2020); 4 papers with a total of 7 points mention that the studies on benefits are inconclusive (Hamilton et al., 2013; Verger, Parcerisa et al., 2019; Lee, 2008; Nichols, 2007); and 1 study with 3 points, therefore a strong study, declares that performance measurement and scores reflect outcomes that are not directly related to learning and knowledge (Boon et al., 2007). Information is restricted, as it is limited in most cases by multiple-choice questions or closed-ended questions and, in this scenario, HST is not able to provide sufficient information to assess learning and its complexity (Boon et al., 2007; Acosta et al., 2020).

Fourth, studies suggest that HST has generated a competitive culture in schools, and the education system in general (Verger, Fontdevila et al., 2019), where teachers have even turned against some students who perform poorly, marginalising them (Bacon & Pomponio, 2023; Hamilton et al., 2013). As a result, education, teachers, learning, and pedagogy itself, have been affected (Au, 2009; Hamilton et al., 2013; Anderson, 2012; Cimbricz, 2002; Emler et al., 2019).

Fifth, there is equity, which was mentioned in 9 of the total of 15 papers (which is substantial in relation to the other consequences). Those studies indicate that equity is at risk due to HST, because tests tend to perpetuate injustice and inequalities (Emler et al., 2019; Bacon & Pomponio, 2023; Harlen & Crick, 2003) that affect students with disabilities, those who are English learners, and those from disadvantaged communities (Boon et al., 2007). Studies also suggest that HST increases racial and socio-economic disparities (Acosta et al., 2020; Emler et al., 2019). Emler et al. (2019, p. 589) provide more details noting that,

LSAs have consistently revealed large gaps in scores among different groups of students. The gaps are primarily a result of socioeconomic and racial inequality and other factors that schools and teachers cannot control. . . . In other words, the efforts to close the achievement gap have widened the opportunity gap, creating more inequity and injustice.

Sixth, one of the most striking consequences of HST is the erosion of the human complexity of students, teachers, and education itself (Emler et al., 2019; Acosta et al, 2020; Bacon & Pomponio, 2023; Ehren et al., 2016). Due to standardisation and homogenisation (Emler et al., 2019), the narrowing of the curriculum and teaching (Acosta et al., 2020; Boon et al., 2007), the prevalence of passive learning methods (Anderson, 2012), and the restriction to low-level cognitive skills such as memorisation and repetition (Au, 2009), subjectivity, diversity, and individualisation have been put at risk. This is mentioned in four studies with a total score of seven (Au, 2009; Emler et al., 2019; Harlen & Crick, 2003; Hamilton et al., 2013). Additionally, other studies declare that high-stakes testing limits the possibility for all students to succeed (Acosta et al., 2020) and that it restricts the development of some talents and passions (Emler et al., 2019; Harlen & Crick, 2003).

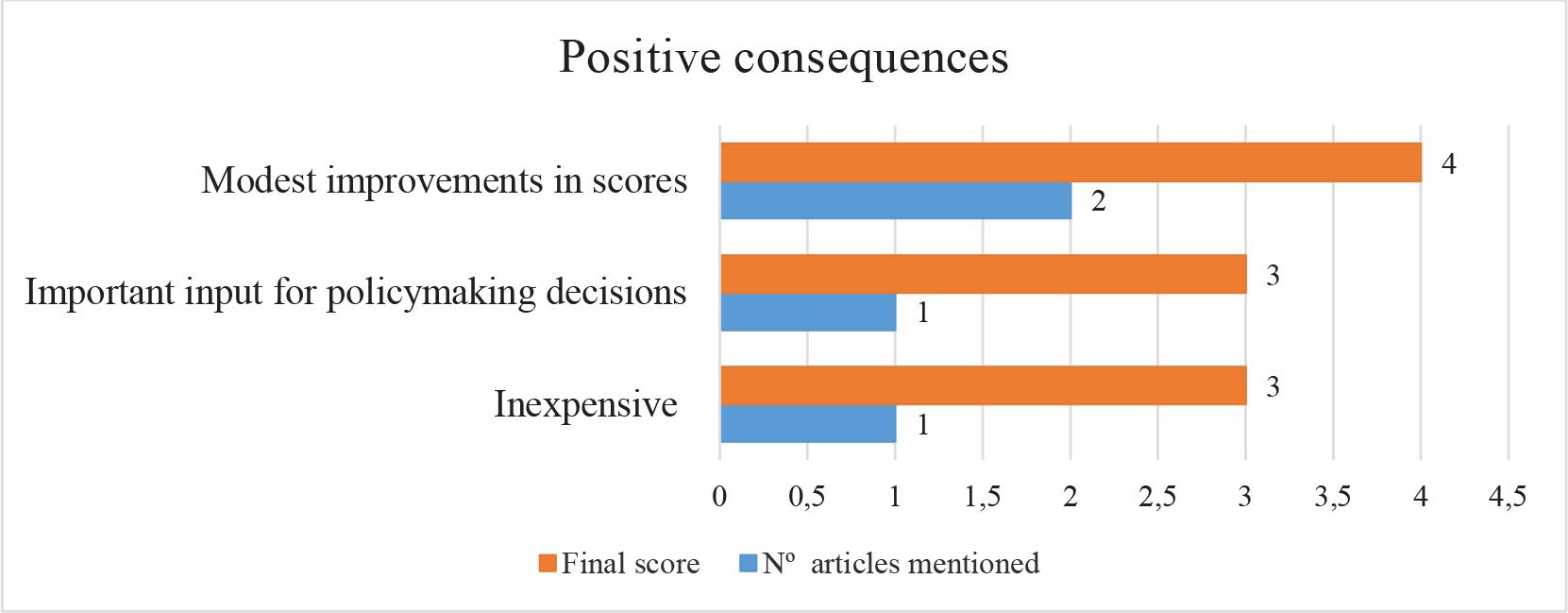

The three positive consequences identified in Figure 4 were found by reviewing these 15 papers. These consequences were drawn from 4 different texts, 3 of which are three-point texts, and therefore are strong, rigorous, and robust studies. This demonstrates that these implications are legitimate, important and should be considered (Verger, Fontdevila et al., 2019; Verger, Parcerisa et al., 2019; Lee, 2008; Anderson, 2012).

Source: Author’s elaboration (2024).

FIGURE 4 Chart obtained from sub-review 1: Review of Reviews focused on answering RQ.1 - What have been the main consequences of high-stakes testing?

As shown in Figure 4, these studies present positive consequences of HST related to structural and functional elements. They are centred mainly on political elements, policy-making and financial implications (Verger, Fontdevila et al., 2019; Verger, Parcerisa et al., 2019) which aim for transparency and good governance, and indicate that high-stakes testing responds to a need, especially in terms of accountability (Anderson, 2012). In addition, it is relevant to note that the current large-scale assessment mechanisms are inexpensive in comparison to other options, and therefore attractive for policymaking in different countries (Verger, Fontdevila et al., 2019).

Another consequence is a moderate increase in scores present in two papers (Lee, 2008; Anderson, 2012). This is controversial because it raises the question of how those scores were increased or what those scores mean. These increases in scores could be related to one of the phenomena presented in the negative consequences such as cheating, teaching to the test, the narrowing of the curriculum, among others (Hamilton et al., 2013; Boon et al., 2007). There are critical elements that weaken the argument that favours the improvement in performance, for example, the questions on its reliability (6 papers, score of 12) (Verger, Fontdevila et al., 2019), and the inconclusive beneficial effects that several papers (4 with a score of 7) yielded (Verger, Parcerisa et al., 2019; Hamilton et al., 2013). The reality is that there are contradictory results.

In summary, HST has strengths that should be considered when thinking about future possibilities for these types of evaluation. Although HST is able to respond to a valid need for objectivity, these mechanisms have substantial, unintended consequences with negative effects on education, teachers, students, their learning and individualities, and compromise ethical and equity factors. Therefore, for the future, it is necessary to reconcile strengths with the improvement of weaknesses.

Findings RQ.2 - What would be the best alternatives or possible future for high-stakes testing?

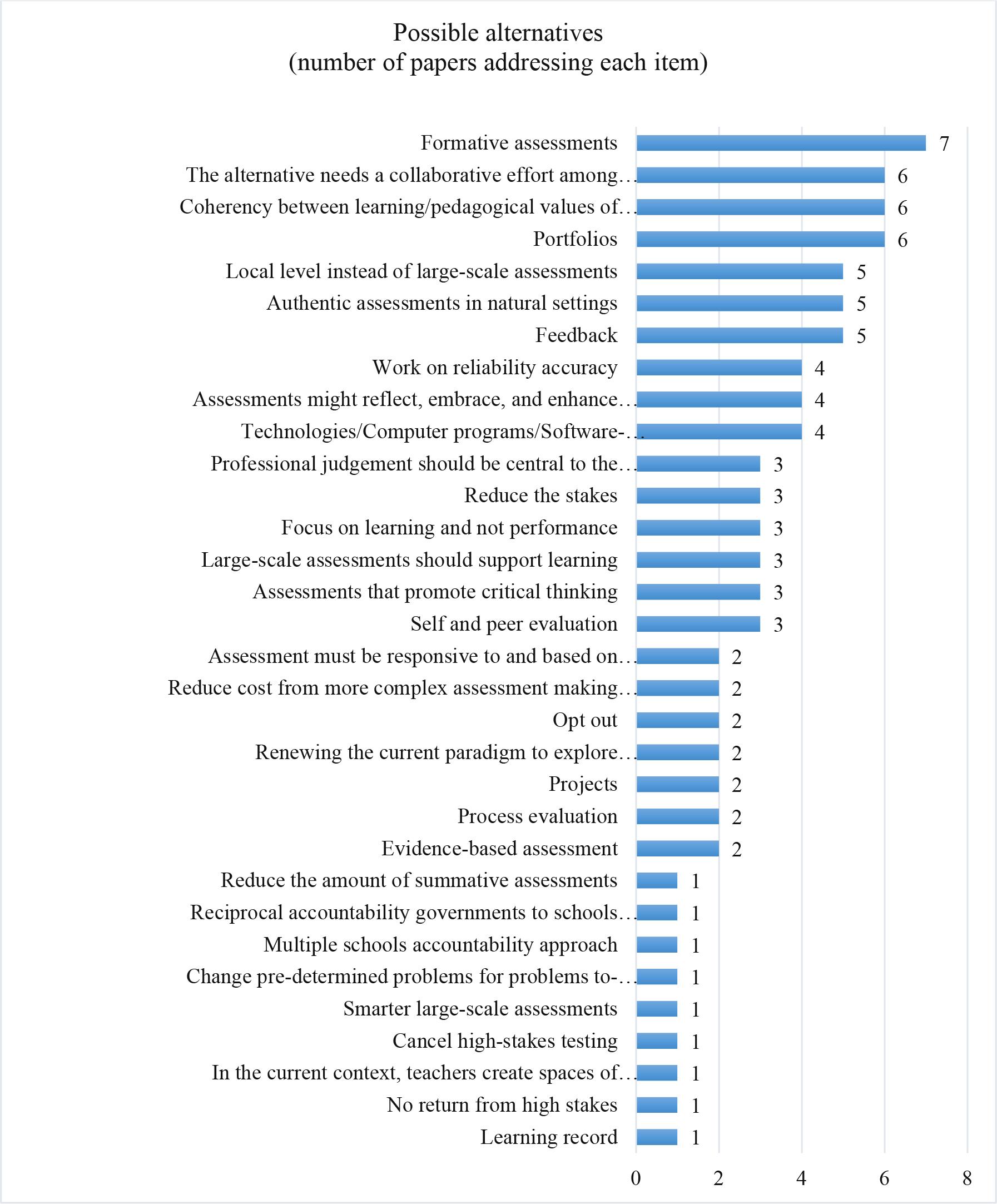

The following compilation represents a sample of the extensive body of literature on the subject. The results presented are based on the review of the 25 selected articles.

Most of the results shown in Figure 5 provide ideas and possibilities for improvements to the current large-scale assessment methods. However, two papers suggest abandoning them completely, opting either for the exit of students and schools (Wang, 2017; Ashadi et al., 2022), or cancelling them (Ashadi et al., 2022). These options, although extreme, present a position, but in relation to what is mentioned previously, they fulfil a current need and have strengths that are relevant when thinking about possible futures.

Source: Author’s elaboration (2024).

FIGURE 5 Chart obtained from sub-review 2: Critical Interpretative Synthesis Review focused on answering RQ.2 - What would be the best alternatives or possible future for high-stakes testing?

Conversely, the rest of the reviewed literature recognises the value of these mechanisms for gathering and providing information about learning, the quality of education, responding to the need of public educational institutions to be accountable, and allowing for obtaining input about processes that enables in-depth discussions about processes occurring in the classroom (Dorn, 1998; Chudowsky & Pellegrino, 2003). Likewise, HST functions as a student selection and allocation tool at crucial educational junctures, such as progressing from primary to secondary school, advancing from school to higher education, and making decisions on resource allocation (Suto & Oates, 2021). They also indicate the urgent need to improve, rethink current HST and reconsider the current paradigm (Chudowsky & Pellegrino, 2003; Volante, 2007). Otherwise, the negative consequences and their weaknesses will continue to have a negative impact on learning and perpetuate inequalities (Volante, 2007; Lingard, 2009; Syverson, 2011).

The results of the present review were organised into three clusters. First, concrete alternatives that can provide simpler solutions; second, systemic changes that involve more complex and deeper alternatives; and third, suggestions regarding how the processes of developing new alternatives to high-stakes testing may be conducted.

Firstly, the authors reviewed suggest: the incorporation of feedback (Cato & Walker, 2022; Chudowsky & Pellegrino, 2003; Brown et al., 2014; Zimmerman & Dibenedetto, 2008; Beyond Test Scores Project [BTS Project] & National Education Policy Center [NEPC], 2023), consideration and combination of evaluation in authentic and spontaneous contexts (Syverson, 2011; Roberson, 2011; Brown et al., 2014; Volante, 2007; Lingard, 2009), process evaluation (Behizadeh & Lynch, 2017; Zimmerman & Dibenedetto, 2008), selfand peer-evaluation (Açıkalın, 2014; Chudowsky & Pellegrino, 2003; Roberson, 2011), projects (Açıkalın, 2014; BTS Project & NEPC, 2023), more emphasis on formative assessments to reduce the prominence of summary assessments (Açıkalın, 2014; Chudowsky & Pellegrino, 2003; Roberson, 2011; Brown et al., 2014; Gillanders et al., 2021; Zimmerman & Dibenedetto, 2008; Hutchinson & Hayward, 2005; Hayward et al., 2004; Hayward & Spencer, 2010; BTS Project & NEPC, 2023), foster critical thinking and high-level skills (Ab Kadir, 2017; Roberson, 2011; Brown et al., 2014), focus on learning rather than performance (Volante, 2007; Lingard, 2009; BTS Project & NEPC, 2023), and allow spaces for non-pre-determined forms of evaluation (Beghetto, 2019).

More concretely, some authors recommend: portfolios (Syverson, 2011; Açıkalın, 2014; Chudowsky & Pellegrino, 2003; Behizadeh & Lynch, 2017; Herman & Winters, 1994; BTS Project & NEPC, 2023) and smarter large-scale assessments through the use of technologies, computer programmes, software and artificial intelligence that allow information to be gathered in a formative manner, providing feedback and many of the aforementioned elements (Beghetto, 2019; Chudowsky & Pellegrino, 2003; Behizadeh & Lynch, 2017).

Secondly, regarding the systemic and complex alternatives, the studies propose that policy should aim for coherence between curriculum and assessment, and that both should be aligned with learning and pedagogical purposes (Ab Kadir, 2017; Dorn, 1998; Chudowsky & Pellegrino, 2003; Volante, 2007; Zimmerman & Dibenedetto, 2008; BTS Project & NEPC, 2023). Using the same logic, several papers point out that it is necessary for these mechanisms to promote and address the complexity and comprehensiveness of human beings and education (Roberson, 2011; Volante, 2007; Hayward & Spencer, 2010; BTS Project & NEPC, 2023). Furthermore, the literature suggested that large-scale assessments should be more focused at local levels (Dorn, 1998; Gillanders et al., 2021; Moss, 2022; Ashadi et al., 2022; Volante, 2007; BTS Project & NEPC, 2023).

Several studies emphasised the importance of devolving responsibility and trust in the professional role of teachers, returning their professional autonomy (Hutchinson & Hayward, 2005; Hayward & Spencer, 2010; Lingard, 2009). Additionally, authors mentioned that the biggest problem with HST is the stakes, and therefore, regardless of what decision is made based on improvements or alternatives, the form of assessment must reduce the stakes (Behizadeh & Lynch, 2017; Hooge et al., 2012; BTS Project & NEPC, 2023).

Thirdly, regarding how to undertake the process of transformation, there are two crucial elements. The first is the need for a collaborative approach, involving all stakeholders and specialists (Chudowsky & Pellegrino, 2003; Volante, 2007; Behizadeh & Lynch, 2017; Hutchinson & Hayward, 2005; Hooge et al., 2012; BTS Project & NEPC, 2023). The second is the fact of rethinking and embracing new paradigms, different from current thinking beyond the boundaries of the system (Chudowsky & Pellegrino, 2003; Volante, 2007).

Lastly, Syverson (2011, p. 4) perfectly illustrates the focus needed for the possible future of HST, saying that “standardized testing has focused on standardizing the content of what is assessed, rather than standardizing the architecture in which diverse kinds of evidence of learning can be collected, organized, understood, and evaluated”.

DISCUSSION

The present study found that high-stakes testing does indeed have substantively harmful, negative consequences (Verger, Fontdevila et al., 2019). Yet, at the same time, it provides an opportunity and is a necessary form of transparency and democracy, fundamental to today’s world (Schillemans et al., 2013; Bovens, 2010). Moreover, as Hayward (2015, p. 38) points out, evaluation has to be “as, for and of” learning; therefore, stakeholders involved in education have the opportunity to reverse and improve the situation and transform these mechanisms to the benefit of learning and education. However, this requires two elements to be considered: collaboration that enables people to see what cannot be seen individually and to find solutions that have not yet been considered (Chudowsky & Pellegrino, 2003; Volante, 2007; Hutchinson & Hayward, 2005; Hooge et al., 2012; BTS Project & NEPC, 2023); and, that policy makers permit themselves to analyse new structures and paradigms (Volante, 2007; Chudowsky & Pellegrino, 2003).

Expanding on the previous idea, concrete examples appear in the second sub-review, demonstrating that new movements and possibilities have emerged. In the United States, some individuals and schools have opted out of HST systems; they have created the “FairTest” movement (Syverson, 2011). Likewise, in Scotland, a formative assessment project has also started and has been accompanied by the government in partnership with the academy and schools (Hutchinson & Hayward, 2005; Hayward et al., 2004; Hayward & Spencer, 2010). Using the same logic, the solutions and options presented in the studies yield simple, concrete and meaningful alternatives, such as, for example, the lowering of stakes (Hooge et al., 2012; BTS Project & NEPC, 2023). This is a small step, but it would have significant effects on classrooms, schools and learning, possibly reducing some of the unintended consequences.

On the other hand, addressing only superficial aspects, rather than tackling the root cause of a problem, will only maintain the problem. For instance, focusing solely on specific content or skills as a remedy. In the case of PISA, which has incorporated creativity into its assessments, this could lead countries and schools to adopt unethical behaviours, teaching to the test, and narrowing curricula to prioritise this new skill (Beghetto, 2019). Therefore, achieving meaningful and profound improvements requires addressing problems at their foundation (Beghetto, 2019).

Similarly, another highly realistic solution is to implement different forms of assessment (Hooge et al., 2012), complementing the summative with the formative, and facilitating it through group assessments by territory (Volante, 2007; BTS Project & NEPC, 2023). In this way, it is not overly expensive, and the high amount of effort required would be done in small stages. In addition, the incorporation of technologies in the day-to-day classroom allows the evaluation of processes and giving of feedback from a more ludic and pedagogical perspective, which is also a reasonable and effective alternative (Behizadeh & Lynch, 2017; Beghetto, 2019). Lastly, there is the proposal of thinking about assessment forms without pre-determined ideas, with open-ended possibilities of questions or exercises, allowing students to determine their own ideas, without markers having a pre-determined idea of a correct answer (Beghetto, 2019).

Finally, it is relevant to consider that education not only shapes the system itself but also influences society and, consequently, humanity: “what students should know and what students do not know are all highly controlled by the examinations” (Emler et al., 2019, p. 281). The consequences of this situation become more apparent when individuals are not aware of their knowledge gaps. Thus, individuals are not even able to realise that there are things they do not know, but this is a topic for further investigation. However, as long as high-stakes assessments persist as they are now, the content taught in classrooms will be limited to what is measured. Everything outside that spectrum will remain unknown or unexplored (Emler et al., 2019).

CONCLUSION

Overall, the present study initially addressed the history of HST, related concepts, and phenomena in order to facilitate understanding and enable the reader to contextualise. Then, the methodology delved into the two sub-reviews that constituted the general SLR, which facilitated an in-depth enquiry. The subsequent step involved presenting the obtained results, followed by the discussion, implications, and recommendations.

Hence, it is necessary to return to the main research question, presented at the beginning of this study: how can the future of large-scale assessment serve as both a facilitator for enhanced learning and a robust mechanism for ensuring quality within education systems? The answer is composed of a few ideas. First, there is a need for collaborative partnerships that consider students and policymakers. From there, it is necessary to recognise what works and what does not, and think about how to improve it. While questioning and understanding underlying power dynamics is fundamental, it is necessary to move toward mechanisms that empower everyone.

The literature already contains some of this completed work, including the main consequences and ways to improve. However, it is now time to create, to think beyond the boundaries, and to put together the pieces of the puzzle that were presented in the present study. Thus, it is time to build mechanisms that utilize formative and summative assessments (Brown et al., 2014) in authentic settings (Syverson, 2011), that enhance high-order skills (Hayward & Spencer, 2010), that give space for the to-be-determined problems (Beghetto, 2019), that generate local solutions and use technologies (Behizadeh & Lynch, 2017). Likewise, reduction of the stakes should be reconsidered (Hooge et al., 2012). The introduction of resources such as portfolios (Chudowsky & Pellegrino, 2003), projects (BTS Project & NEPC, 2023), peer and self-assessment (Açıkalın, 2014), the incorporation of software that measures processes and provides feedback (Behizadeh & Lynch, 2017), among many other options that are detailed in Figure 5 should be explored. However, it is necessary to highlight that, whatever the assessment is, it must respond to the context in which it is immersed and be a means of promoting and becoming part of the learning process. In essence, a balance can be established between enhanced learning and a robust mechanism for ensuring quality within education systems.

In conclusion, the changes require that governments take the initiative and begin to think collaboratively outside the boundaries to create new LSA mechanisms. These mechanisms should allow for creative, complex futures in which education preserves its primary purpose, learning, and allows students to succeed and flourish according to each of their interests and talents (Nussbaum, 2011; Emler et al., 2019), that expand the possibilities of education and pursue equity rather than perpetuate inequalities (Zhao, 2014).

texto em

texto em