Serviços Personalizados

Journal

Artigo

Compartilhar

Educação em Revista

versão impressa ISSN 0102-4698versão On-line ISSN 1982-6621

Educ. rev. vol.39 Belo Horizonte 2023 Epub 23-Fev-2023

https://doi.org/10.1590/0102-4698237523

ARTICLE

SCIENTIFIC LITERACY ASSESSMENT INSTRUMENTS: A SYSTEMATIC LITERATURE REVIEW

21 Centro de Investigação em Educação e Psicologia da Universidade de Évora (CIEP-UE)/Center for Research in Education and Psychology of the University of Évora (CIEP-UE). Évora, Portugal.

The study aimed to analyze scientific literacy assessment instruments. In this sense, a systematic literature review was carried out in the B-On, SciELO, Scholar Google, and RCAAP databases to identify studies using scientific literacy assessment instruments. The selection criteria included articles published between 1990 and 2020 in Portuguese, English, or Spanish, which developed and/or used scientific literacy assessment instruments. Articles that did not address scientific literacy in the title or abstract did not cite instruments and results of assessing students' scientific literacy, reviews, case studies, and articles that assessed specific subjects or subjects were excluded. Thirteen scientific literacy assessment instruments were identified. Most of the studies were conducted in Brazil, Indonesia, and the United States, predominating with Secondary School students. Higher Education students were the ones with the most positive results. The most evaluated dimensions of scientific literacy were related to different scientific literacy skills. The respondents’ classifications were obtained through the descriptive frequencies of responses to the items, with no standardization in the categorization processes of the results. Our findings led us to conclude that caution is required when comparing the results of the studies, since many instruments were applied at educational levels and in contexts different from those for which they were developed.

Keywords: scientific literacy; assessment; instruments; systematic literature review

O estudo teve por objetivo analisar instrumentos de avaliação da literacia científica, nesse sentido, foi realizada uma revisão sistemática de literatura (RSL) nas bases de dados B-On, SciELO, Google Académico e RCAAP com vista a identificar estudos que utilizaram instrumentos de avaliação da literacia científica. Os critérios de seleção incluíram artigos publicados entre 1990 e 2020, em português, inglês ou espanhol, que desenvolveram e/ou utilizaram instrumentos de avaliação da literacia científica. Foram excluídos os artigos que não abordaram a literacia científica no título ou no resumo, não citaram instrumentos e resultados da avaliação da literacia científica de alunos, revisões, estudos de casos e artigos que avaliaram disciplinas ou assuntos específicos. Foram identificados 13 instrumentos de avaliação da literacia científica. A maioria dos estudos foi realizada no Brasil, Indonésia e Estados Unidos, predominando as pesquisas com alunos do Ensino Secundário. Os alunos do Ensino Superior foram os que apresentaram resultados mais positivos. As dimensões de literacia científica mais avaliadas relacionaram-se com as diferentes habilidades de literacia científica. A classificação dos inquiridos foi obtida através das frequências descritivas de resposta aos itens, não havendo uma padronização nos processos de categorização dos resultados. Concluímos ser necessária alguma precaução na comparação dos resultados dos estudos, uma vez que muitos instrumentos foram aplicados em níveis de ensino e em contextos diferentes daqueles para os quais foram desenvolvidos.

Palavras-chave: literacia científica; avaliação; instrumentos; revisão sistemática de literatura

El estudio tuvo como objetivo analizar los instrumentos de evaluación de la alfabetización científica, en este sentido, se realizó una revisión sistemática de la literatura (RSL) en las bases de datos B-On, SciELO, Google Académico y RCAAP con el fin de identificar estudios que utilizaron instrumentos de evaluación de la alfabetización científica. Los criterios de selección incluyeron artículos publicados entre 1990 y 2020, en portugués, inglés o español, que desarrollaron y/o utilizaron instrumentos de evaluación de la alfabetización científica. Se excluyeron los artículos que no abordaban la alfabetización científica en el título o resumen, no citaban instrumentos y resultados de la evaluación de la alfabetización científica de los estudiantes, revisiones, estudios de caso y artículos que evaluaban materias o materias específicas. Se identificaron 13 instrumentos de evaluación de la alfabetización científica. La mayoría de los estudios se realizaron en Brasil, Indonesia y Estados Unidos, predominantemente investigaciones con estudiantes de secundaria. Los estudiantes de Educación Superior fueron los que obtuvieron los resultados más positivos. Las dimensiones más evaluadas de la alfabetización científica se relacionaron con diferentes habilidades de alfabetización científica. La clasificación de los encuestados se obtuvo a través de las frecuencias descriptivas de las respuestas a los ítems, sin estandarización en los procesos de categorización de los resultados. Concluimos que se requiere cierta cautela al comparar los resultados de los estudios, ya que muchos instrumentos se aplicaron a niveles educativos y en contextos diferentes a aquellos para los que fueron desarrollados.

Palabras clave: alfabetización científica; evaluación; instrumentos; revisión sistemática de la literatura

INTRODUCTION

Scientific literacy has been the subject of numerous research studies that aim to define its concept and assess the scientific literacy level of individuals from different sectors of society. Although extensively studied, it continues to be the subject of challenging questions and gaps to be filled. The term scientific literacy emerged in the 1950s, right after World War II and at the genesis of the space race. Manifesting itself as a vague and imprecise term, scientific literacy has received greater attention since the 1980s, during which time various studies have been produced to conceptualize it (DeBoer, 2000; Miller, 1983; Shamos, 1995).

According to Laugksch and Spargo (1996a), the study of Miller (1983) was the precursor to most of this research. In this article, the author presented three dimensions of scientific literacy: nature of science, science content knowledge, and the impact of science and technology on society. Miller (1983) also disseminated strategies for assessing these three dimensions. Since then, numerous studies have been conducted to develop instruments for assessing scientific literacy. Laugksch and Spargo (1996b) present the most recognized instruments up to the date of publication of their article, namely: the Test on Understanding Science (TOUS), the Nature of Scientific Knowledge Scale (NSKS), the Nature of Science Scale (NOSS) and the Views of Science-Technology-Society (VOSTS). However, Laugksch and Spargo (1996b), Gormally, Brickman, and Lutz (2012), and Fives, Huebner, Birnbaum, and Nicolich (2014) argue that these instruments assess only individual, narrow aspects of scientific literacy and that none can assess all of its dimensions.

Within this perspective, this exploratory study aimed to extensively conduct a systematic literature review (SLR) on the instruments used to assess scientific literacy since the 1990s. It is intended to analyze the following characteristics of the instruments: purpose, target audience, format and quantity of items, and the process of categorizing the results. The study also aims to analyze the results of the scientific productions regarding the methodological and contextual characteristics, the forms of presentation and analysis of the results, and the main results concerning the dimensions of scientific literacy assessed, the students' performance and the existence or not of significant differences among the respondents.

METHODOLOGY

Research Strategy

According to Galvão and Pereira (2014), a systematic literature review (SLR) is characterized as "a type of research focused on a well-defined question, which aims to identify, select, evaluate and synthesize the relevant available evidence" (p. 183). In this sense, SLR is a research strategy that elaborates a research question and uses systematic methods to identify and select articles, synthesize and extract the data, and write up and publish the results, allowing the researcher to produce new knowledge (Briner & Denyer, 2012; Galvão & Pereira, 2014).

This SLR was conducted in the period from September 2020 to February 2021 in two subsequent stages. The first corresponded to the planning phase of the SLR protocol (Box 1), in which the following were defined: the objective of the SLR; the formulation of the research question; the databases to be consulted; the keywords for the search; and the inclusion and exclusion criteria for the literature review.

The second step consisted of a literature review based on the established protocol. The scientific productions were identified by combining the keywords in the searches in the defined databases. Those that fit the stipulated selection criteria were selected by reading the titles and abstracts. In cases where reading the abstract was not enough to determine its inclusion or exclusion, the study was read in its entirety.

Data Analysis

The publications were analyzed by reading in full the studies that met the stipulated selection criteria. The analysis protocol was structured utilizing a database in Microsoft Excel software, using a specific form. It included the details of the identification data of the study and authors, the objective, sample size, methodology, results, discussion, and conclusion. Subsequently, a descriptive analysis of the frequency distribution of the following variables was performed: type of instrument to assess scientific literacy, study design, place of origin of the studies, the context of the studies, and how the results on scientific literacy were presented. The main characteristics of the selected studies and the most frequent instruments for assessing scientific literacy were organized in tables.

RESULTS AND DISCUSSION

We identified 189 scientific productions published in Portuguese, English, and Spanish. Of these, 146 were excluded for not meeting the inclusion criteria, limiting to 43 the number of productions selected for analysis (Table 1).

Table 1 The number of scientific productions selected for the SLR.

| Productions | English | Portuguese | Spanish | Total |

|---|---|---|---|---|

| Identified | 139 | 49 | 1 | 189 |

| Excluded | 114 | 32 | 0 | 146 |

| Selected | 25 | 17 | 1 | 43 |

Source: own author.

Of the selected studies, the oldest publication is from 1996, and the most recent is from 2020. Although all of them address aspects related to the theme of scientific literacy assessment, only a few scientific productions refer to the development and validation of new instruments.

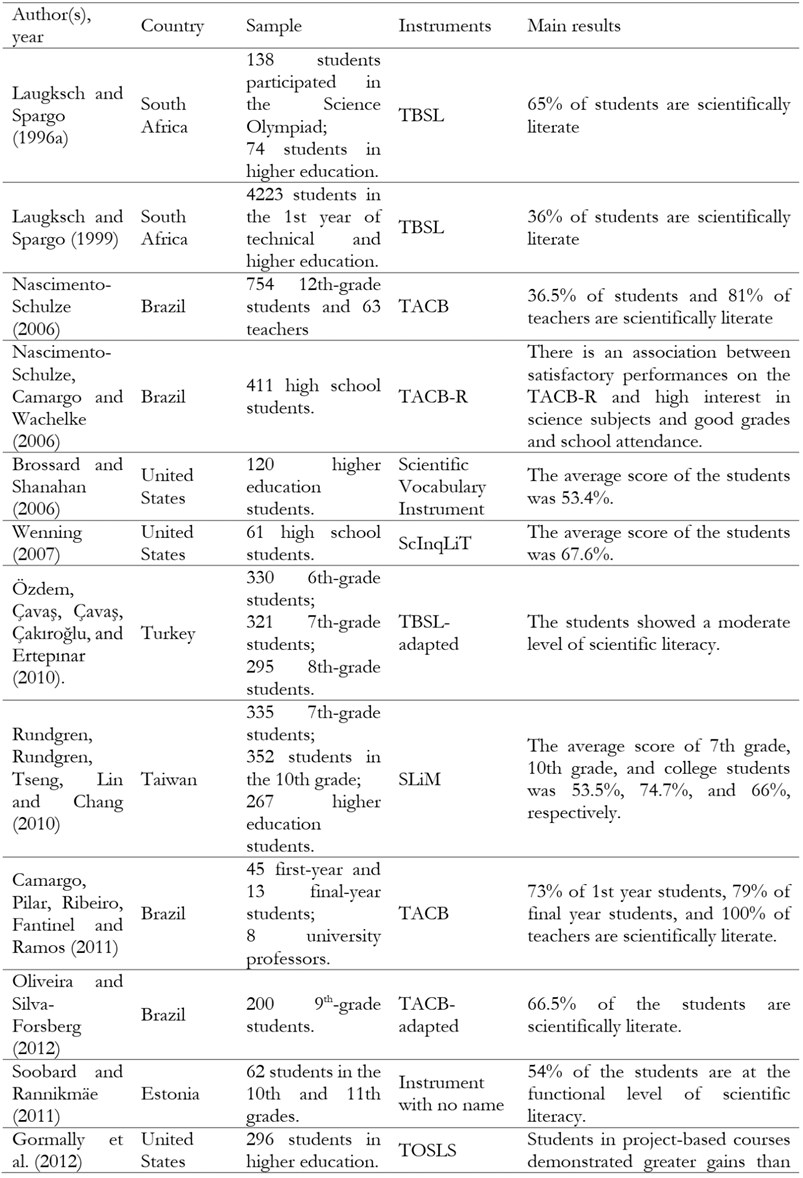

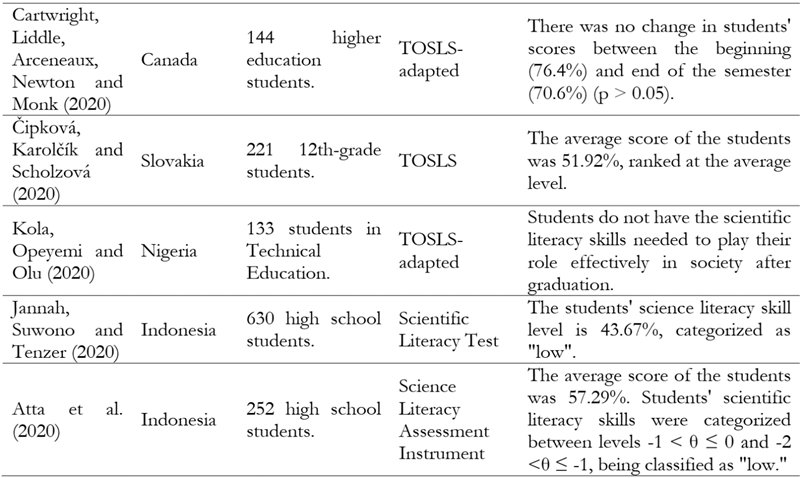

Box 2 Summary of studies selected for analysis of scientific literacy assessment instruments (n = 43).

Note. TBSL: Test of Basic Scientific Literacy; TACB: Test of Basic Scientific Literacy; TACB-S: Simplified Test of Basic Scientific Literacy; TACB-R: Reduced Test of Basic Scientific Literacy; ScInqLiT: Scientific Inquiry Literacy Test; SLiM: Scientific Literacy Measurement; TOSLS: Test of Scientific Literacy Skills; SLA: Scientific Literacy Assessment; GSLQ: Global Scientific Literacy Questionaire; ScInqLiT: Scientific Inquiry Literacy Test; SToSLiC: Test of Scientific Literacy Integrated Character. Source: own author.

Scientific literacy assessment tools

In the 43 studies analyzed, 13 scientific literacy assessment instruments were identified: the TBSL, the TOSLS, the SLA, the ScInqLiT and their respective adapted versions, the Scientific Literacy Test, the SLiM, the Media Scientific Literacy Instrument, the Scientific Literacy Assessment Instrument, the Scientific Literacy Assessment, the SToLiC, the GSLQ, and the instruments of Santiago et al. (2020) and Soobard and Rannikmäe (2011), which do not present nomenclature.

The TBSL and its versions were used in 18 studies (41.9%), conducted in South Africa, Brazil, and Turkey. TOSLS and its versions were applied in the United States, Brazil, Indonesia, Canada, Slovakia, and Nigeria, as indicated in ten articles (23.2%). The SLA and its versions were adopted in three studies (7.1%) in the United States and Indonesia. The GSLQ was also referred to in three articles (7.1%), and was applied in Australia, China, South Korea, and Indonesia. The ScInqLiT was used in the United States and Indonesia, as described in two articles (4.6%). The other seven instruments are mentioned in one article each (2.3%) in Indonesia, the United States, Thailand, Taiwan, Brazil, and Estonia.

There is a predominance of studies conducted in Brazil, Indonesia, and the United States. Of the 43 surveys, 18 (41.9%) were conducted in Brazil, with a predominance of the application of the TACB and TACB-S; seven (16.3%) in Indonesia, among which used different instruments to assess scientific literacy; and seven (16.3%) in the United States, in which the TOSLS and the SLA stood out.

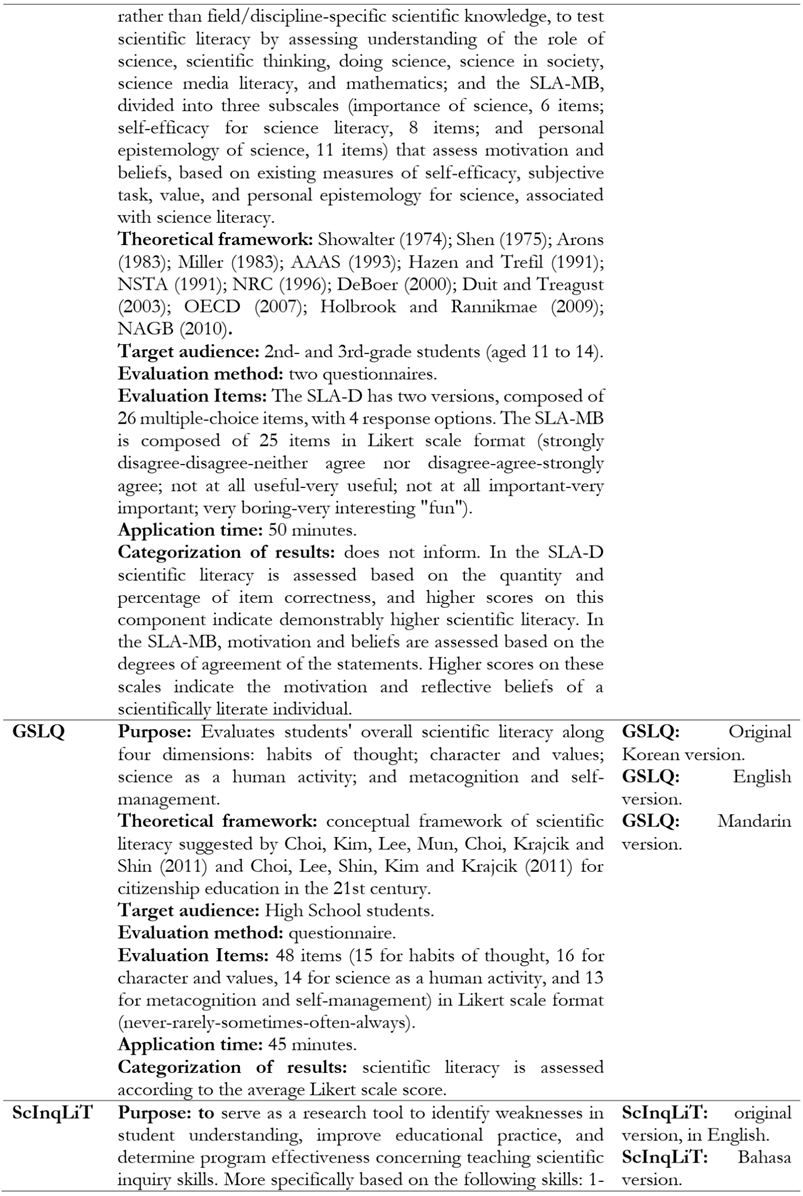

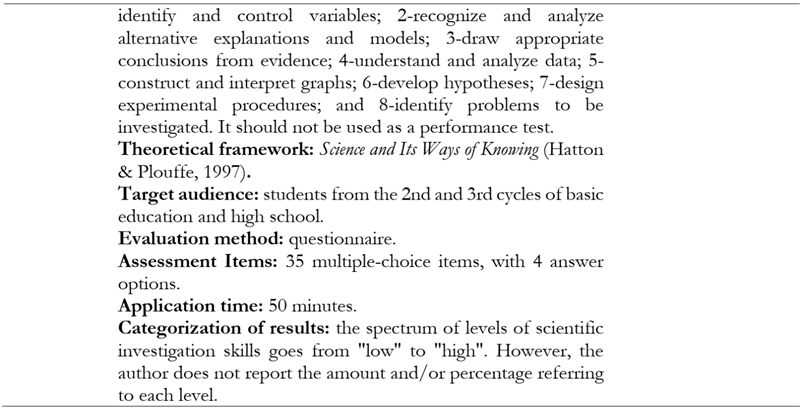

We also observed that the TBSL, the TOSLS, the SLA, the GSLQ, and the ScInqLiT and their respective versions were the most commonly adopted scientific literacy assessment instruments. Among these, the version of the TSBL translated into Portuguese-TACB-and the TOSLS were the most frequently used instruments: eight and seven studies, respectively. The main characteristics of the five scientific literacy assessment instruments most adopted by the selected studies are presented in Box 3.

Source: own authorship.

Box 3 Summary of the main characteristics of the 5 most frequently used scientific literacy assessment instruments.

Considering the instruments' nature, they can be classified according to the purpose of the assessment. The TBSL (Laugksch & Spargo, 1996a) and its TACB (Nascimento-Schulze, 2006), TACB-S (Vizzotto & Mackedanz, 2018), TACB-R (Nascimento-Schulze et al., 2006) and adapted versions assess the respondents' level of scientific literacy. The TOSLS (Gormally et al., 2012), its adapted versions, the ScInqLiT (Wenning, 2007), the Scientific Literacy Assessment Instrument (Atta et al., 2020), the Scientific Literacy Test (Jannah et al, 2020), the Scientific Literacy Assessment (Koedsri & Ngudgratoke, 2018), and Soobard and Rannikmäe's (2011) instrument test individuals' mastery of different scientific literacy skills; the SLA (Fives et al, 2014) and its adapted versions assess both the level of scientific literacy knowledge in everyday situations and the motivations and beliefs related to it; the SToSLiC (Jufri et al., 2019) assesses scientific literacy skills and the degree of agreement of the meaning of sentences expressing the elements of character formation; the SLiM (Rundgren et al, 2010) and the Media Scientific Literacy Instrument (Brossard & Shanahan, 2006) assess individuals' scientific literacy knowledge pertaining to understanding scientific and technical vocabulary presented in the media; the GSLQ (Mun et al, 2013) identifies scientific literacy in the dimensions of thinking, character and values, science as a human activity, and metacognition and self-management; and the Santiago et al. (2020) instrument assesses the degree to which respondents agree on the relationship between science and the environment, society, technology, and the school science vision in aspects of scientific literacy.

Thus, seven instruments (53.8%) aim to assess different scientific literacy skills, three instruments (23.1%) were developed to assess the level of knowledge regarding students' scientific literacy, and three instruments (23.1%) are directed at assessing students' values, beliefs, habits, and motivations related to scientific literacy. Notably, the objectives of the latter group of instruments allow for assessing the affective domain, while the others assess the cognitive domain in relation to scientific literacy. The assessment of these affective factors seem to be associated with that proposed by Fives et al. (2014), who claim that

to achieve the goal of a scientifically literate society, individuals need to be more than knowledgeable about scientific content, they must also value that content and be open to it as a source of information for decision-making. (p. 576)

Concerning the question configuration, five-item formats were identified, with a predominance of multiple-choice selection items. The TBSL and its versions use the true-false-not-know format; the TOSLS, the ScInqLiT and its respective versions, the Scientific Literacy Assessment, the Scientific Literacy Test, and the SLiM adopt the multiple-choice format with four response options, with the fourth response option of the SLiM being "I don't know"; the GSLQ employs a Likert scale; the SLA and SToSLiC use both multiple-choice items and Likert scales; the Science Literacy Assessment Instrument adopts discursive items; and the Media Scientific Literacy Instrument uses a "fill in the blank" construct item format.

Methodological and contextual characteristics of the selected studies

All the studies analyzed used questionnaire surveys. Of the 43 surveys, seven (16.3%) reported the data from pilot studies, 11 (25.6%) published the validation process of assessment instruments, and 25 (58.1%) conducted only the application of the instruments.

Among the research that used the pilot study data, Brossard and Shanahan (2006), Laugksch and Spargo (1996a), Rundgren et al. (2010), Soobard and Rannikmäe (2011), and Wenning (2007) indicated the process of designing and validating scientific literacy assessment instruments, Rachmatullah et al. (2016) performed the translation of the SLA into the local language in Indonesia, and Vizzotto et al. (2020) analyzed the validity of the TACB-S for a different audience than the one for which it was designed.

Of those who proceeded with the validation process, the studies by Atta et al. (2020), Fives et al. (2014), Gormally et al. (2012), Jannah et al. (2020), Jufri et al. (2019), Koedsri and Ngudgratoke (2018), McKeown (2017), and Mun et al. (2015) published the process of developing new instruments for assessing scientific literacy, Nascimento-Schulze (2006) translated the TBSL into the Portuguese language, originating the TACB, and Vizzotto and Mackedanz (2018) and Nascimento-Schulze et al. (2006) reduced and simplified the TACB, deriving the TACB-S and TACB-R, respectively.

Finally, of the studies that performed only the application of already developed and validated instruments, Camargo et al. (2011), Coppi and Sousa (2019a, 2019b), Gresczysczyn et al. (2018), Lima and Garcia (2015), Lima and Garcia (2013), and Rivas et al. (2017) used the TACB; Čipková et al. (2020), Gomes and Almeida (2016), Shaffer et al. (2019), Souza (2019), Utami and Hariastuti (2019), and Waldo (2014) applied the TOSLS, Vizzotto (2019) and Vizzotto and Del Pino (2020a, 2020b) employed the TACB-S, Mun et al. (2013) and Pramuda et al. (2019) adopted the GSLQ, Cartwright et al. (2020), Kola et al. (2020) and Santiago et al. (2020) used adapted versions of the TOSLS, Laugksch and Spargo (1999) applied the TBSL, Oliveira and Silva-Forsberg (2012) used an adapted version of the TACB, Özdem et al. (2010) adopted an adapted version of the TBSL, and Innatesari et al. (2019) applied the ScInqLiT.

As for the level of education, 32 studies (74.4%) assessed the scientific literacy of students of only one level and 11 (25.6%) assessed individuals of different levels and even teachers. It can be noticed that there is a predominance of research conducted in order to assess the level of scientific literacy of secondary and higher education students, followed by those that assess students in the 3rd cycle of basic education, as shown in Figure 1.

However, it should be noted that of the 13 scientific literacy assessment instruments, five were developed for secondary school students — Scientific Literacy Test, Science Literacy Assessment Instrument and the instruments of Santiago et al. (2020) and Soobard and Rannikmäe (2011) —, three for elementary school students — SLA, SToSLiC and the Scientific Literacy Assessment, — two for higher education students —TOSLS and Media Scientific Literacy Instrument. The other three instruments were constructed for more than one level of education.

In this sense, we observed that some instruments were applied to target populations different from the one for which the instrument was developed. The versions of the TBSL were those in which this was most frequently observed. Although they are instruments developed to assess the literacy of secondary school leavers, the TACB, TACB-S, and adapted versions of the TBSL and TACB have been used to assess students at the secondary, technical and higher education levels. In the case of the latter level, most studies aimed at comparing levels of scientific literacy between first-year and final-year students in biology, chemistry, physics and undergraduate courses.

Noting the high number of applications of the TACB in the 3rd cycle of basic education, Vizzotto et al. (2020) analyzed the psychometric characteristics of this instrument by applying the TACB-S, in order to verify its validity and reliability for this level of education. The results revealed low values of reliability, difficulty, and discrimination indices. In this sense, the authors concluded that the TACB and the reduced and simplified versions (TACB-R and TACB-S) should not be applied to students of the 3rd cycle without an adaptation and validation process directed to the level of education in question (Vizzotto et al., 2020).

Ways of presenting and analyzing the results of scientific literacy

In all the instruments analyzed, the classification and comparison between individuals were made according to the frequency distribution: the quantity and/or percentage of responses to the items. Of the 13 instruments, six do not report on the categorization process of the students, namely: TOSLS, SLA, SToSLiC, SLiM, GSLQ and Media Scientific Literacy Instrument.

Among those that categorize them, the TBSL, such as its TACB and TACB-S versions, distinguishes individuals into scientifically literate or non-scientifically literate. To be considered scientifically literate, an individual must obtain a minimum score of 13, 45, and 10 on the subtests of the nature of science, the science content knowledge, and the impact of science and technology on society, respectively. In the TACB-S, the minimum score on these subtests should be 6, 17, and 5, respectively.

The Scientific Literacy Test categorizes the respondents' level of scientific literacy into "very good" (70-100% correct), "good" (60-69% correct), "moderate" (50-59% correct), "low" (40-49% correct) and "very low" (0-39% correct). Soobard and Rannikmäe's (2011) instrument, on the other hand, categorizes it into "nominal", "functional", "conceptual/procedural", and "multidimensional", according to the framework proposed by Bybee (1997). Santiago et al.'s (2020) instrument categorizes the responses of groups of individuals according to agreement, into "agree" and "disagree".

The ScInqLiT and the Scientific Literacy Assessment, while categorizing individuals' scientific literacy levels into "low" and "high" and "mastery" and "no mastery", respectively, do not report the values for each category. So does the Science Literacy Assessment Instrument, which, although it categorizes individuals' science literacy skills according to θ from the Item Response Theory (TRI)-"0 < θ ≤ 1", "-1 < θ ≤ 0", "-2 < θ ≤ -1", and "-3 < θ ≤ -2"-does not make the level of each category explicit.

However, the analysis of the studies revealed that, in the case of 4 of the 5 main scientific literacy assessment instruments, some authors chose to change the analysis strategy of the original instrument, creating categories and/or modifying the categorization process and presentation of the results of the respondents' scientific literacy assessment. Gresczysczyn et al. (2018), applying the TACB, assessed the average scientific literacy of groups rather than individuals, classifying as scientifically literate the groups whose average on the three subtests was higher than the minimum required hits. Oliveira and Silva-Forsberg (2012), using an adapted version of the TACB, categorized students' level of scientific literacy into "very good" (above 80% achievement), "good" (80% achievement), "satisfactory" (70% achievement), "regular" (60% achievement), and "below" (scores up to 50% achievement), with those who achieved at least the "regular" level being classified as scientifically literate. Özdem et al. (2010) reported the students' results in the TBSL according to the average score of correct answers overall and in each subtest. Nascimento-Schulze et al. (2006) analyzed the results of the percentiles of performance on the TACB-R overall and by subtest, comparing them with the students' social representations of science and technology.

As for the TOSLS, Gormally et al. (2012), Santiago et al. (2020) and Souza (2019) reported the hit frequencies by skill, Shaffer et al. (2019) presented them by skill categories and Cartwright et al. (2020), Čipková et al. (2020), Gomes and Almeida (2016), Kola et al. ( 2020), Utami and Hariastuti (2019) and Waldo (2014) reported the hit frequencies of the instrument as a whole.

In the case of SLA, the study by Rachmatullah et al. (2016) classified the respondents' science literacy level results into "very low," "low," "medium," "high," and "very high." However, the authors did not report the amount or percentage of hits for each category. The same was observed in the study by Innatesari et al. (2019), who categorized the students' science literacy level scores on the ScInqLiT into "low," "medium," and "high," and although they state that this classification was based on the National Examination of Natural Sciences (UN IPA) average, they do not present the values of each category.

It is found that, despite using frequency distribution to classify respondents, scientific literacy assessment instruments adopt different item formats and methods of categorizing the results. These factors make it difficult to compare the results between studies that used different instruments. In addition, the fact that some studies modify the original instruments' categorization processes and even create categories makes it difficult to compare analyses conducted by surveys that adopted the same instrument.

Study results: performance in scientific literacy assessment

Among the 18 studies that adopted the TBSL and its versions-TACB, TACB-S, TACB-R, and adapted -, Camargo et al. (2011), Gresczysczyn et al. (2018), Laugksch and Spargo (1996a), Lima and Garcia (2015), Oliveira and Silva-Forsberg (2012), Rivas et al. (2017), Vizzotto and Del Pino (2020a, 2020b), and Vizzotto and Mackedanz (2018) revealed that most of the students assessed are scientifically literate. The studies of Coppi and Sousa (2019a, 2019b), Laugksch and Spargo (1999), Lima and Garcia (2013), Nascimento-Schulze (2006), Vizzotto (2019), and Vizzotto et al. (2020), on the other hand, revealed that the majority of the students assessed did not reach the minimum number of hits required to be considered scientifically literate. The research of Özdem et al. (2010) showed moderate scientific literacy scores of the investigated students, while that of Nascimento-Schulze et al. (2006) indicated that there is a relationship between satisfactory performances on the TACB-R and high interest in scientific topics, as well as good grades and school attendance.

Of the ten surveys that applied the TOSLS and its adapted versions, mean scores higher than 50% were found in the studies by Čipková et al. (2020), Shaffer et al. (2019), and Waldo (2014), and mean scores below this value were noted by Gomes and Almeida (2016), Kola et al. (2020), Santiago et al. (2020), and Utami and Hariastuti (2019). Gormally et al. (2012) observed significant gains in science literacy skills on the post-test. In contrast, Cartwright et al. (2020) showed no statistical differences in the number of hits on TOSLS items between the pre-test and post-test, although the average hit rate was above 50% in both situations. Souza (2019), on the other hand, reported that some skills were well-developed, while others needed improvement, however, they did not indicate which ones.

Regarding the three studies that applied the SLA and its adapted version, the results of Fives et al. (2014) and McKeown (2017) revealed mean hits greater than 50%, while Rachmatullah et al. (2016) reported a mean below this value.

As for the GSLQ, Pramuda et al. (2019) revealed that the control group and the experimental group obtained mean scores of 3.84 and 3.87, respectively, Mun et al. (2015) indicated that students' mean score was 3.46 and Mun et al. (2013) observed a mean score of 3.67. Neither study reported the categorization of the results. In the case of ScInqLiT, Wenning's (2007) study showed averages above 50% and Innatesari et al. (2019) below 50%. In the remaining seven surveys, which applied different scientific literacy assessment instruments, the studies by Atta et al. (2020), Brossard and Shanahan (2006), and Rundgren et al. (2010) revealed mean scores on the items above 50%, whereas the studies by Jannah et al. (2020 and Jufri et al. (2019) indicated mean scores below 50% and the one by Koedsri and Ngudgratoke (2018) reported that students did not master the three scientific literacy attributes assessed. Soobard and Rannikmäe (2011) reported that 54% of students have a functional level of scientific literacy.

In general, most studies showed positive results in the assessment of the respondents' scientific literacy (i.e., more than half of the students assessed reached the minimum number of correct answers in the TBSL and its versions to be considered scientifically literate or obtained more than 50% of correct answers in the items of the other instruments). With regard to the level of education, most of the surveys conducted with students from the 2nd and 3rd cycles of Basic Education and Higher Education showed positive results, while most of those that evaluated students from Secondary and Technical Education showed negative results regarding their performance.

However, these results should be analyzed with caution. It is necessary to consider that, as explained above, several surveys used instruments in target populations different from the one for which the instruments were developed. Added to this factor is the fact that many of the surveys applied the scientific literacy assessment instruments in other countries, merely translating them into the local language and, in some cases, reducing the instrument without taking into account the differences in context and realities between the places of application, that is, without validating the instrument for that particular target population. Furthermore, it is worth noting that most of the higher education students evaluated belonged to undergraduate courses in scientific disciplines, such as Biology, Chemistry, Physics, and Natural Sciences, which could explain the positive results observed.

Study results: performance on the dimensions of scientific literacy

Regarding the dimensions of scientific literacy assessed by the instruments, only 28 studies reported which were the best and worst aspects analyzed. Of those that applied the TBSL and its versions, all revealed that the best results were identified in the subtest of the science content knowledge. At the same time, the worst were evidenced in the subtest of the nature of science by Coppi and Sousa (2019b), Nascimento-Schulze et al. (2006), Özdem et al. (2010), Rivas et al. (2017), Vizzotto and Mackedanz (2018) and on the subtest of the impact of science and technology on society by Coppi and Sousa (2019a), Lima and Garcia (2015), Vizzotto (2019) and Vizzotto and Del Pino (2020a). Camargo et al. (2011) found that first-year students and teachers scored worse on the nature of science subtest, while for final-year students this occurred on both subtests. Vizzotto and Del Pino (2020b) observed that first-year students had the lowest number of correct answers in the subtest on the impact of science and technology on society and final year students in the subtest on the nature of science.

Among the studies that used the TOSLS, there was no positive or negative predominance of any of the skills. In the case of the best skills, the research by Santiago et al. (2020) and Čipková et al. (2020) identified the ability to evaluate the uses and misuses of scientific information; Souza (2019) found the ability to identify a valid scientific argument; Utami and Hariastuti (2019) checked the ability to solve problems using quantitative skills, including basic statistical analysis; Waldo (2014) identified category 1 skills, related to recognizing and analyzing the use of research methods that lead to scientific knowledge; and Gomes and Almeida (2016) looked at the abilities to identify a valid scientific argument, to evaluate the uses and misuses of scientific information, to understand the elements of a research design and how they impact scientific findings/conclusions, to create an appropriate graph from data, and to justify inferences, predictions, and conclusions based on quantitative data.

As for the worst skills found, Čipková et al. (2020) indicated the ability to understand the elements of a research project and how they impact scientific findings/conclusions; Santiago et al. (2020) noted the ability to assess the validity of sources; Utami and Hariastuti (2019) checked the ability to create an appropriate graph from data; Souza (2019) identified the ability to read and interpret graphical representations of data; Gomes and Almeida (2016) indicated the ability to assess the validity of sources, to read and interpret graphical representations of data, to solve problems using quantitative skills including basic statistical analysis, and to understand and interpret basic statistics; and Waldo (2014) identified category 2 skills, referring to the organization, analysis, and quantitative interpretation of scientific data and information.

The study by Rachmatullah et al. (2016) was the only one to perform this analysis for the SLA. The authors identified that, in the SLA-D, the highest and lowest number of hits were in the dimensions of understanding the role of science and mathematics in science, respectively. Of the studies that applied the GSLQ, all observed the best results in the dimensions of science as a human activity. The worst results, on the other hand, were found in the dimension of metacognition and self-management by Mun et al. (2013) and Mun et al. (2015), while Pramuda et al. (2019) detected them for the dimension of character and values. Innatesari et al. (2019), using the ScInqLiT, indicated that students were most successful on items of the ability to plan experimental procedures and least successful on the ability to identify and control variables.

As for the studies that applied the other instruments, Rundgren et al. (2010) identified that the SLiM items related to the subjects of physics and chemistry were those that presented the highest and lowest amounts of correct answers, respectively; Jufri et al. (2019), adopting the SToLiC, and Jannah et al. (2020), applying the Scientific Literacy Test, revealed that the skills of interpreting data and evaluating scientific evidence scored the highest, while the worst scores were obtained in the skills of evaluating and developing scientific research; and Soobard and Rannikmäe (2011) reported only that the items whose skills require applying scientific knowledge in new and everyday activities scored the lowest.

As well as the different item formats, the categorization processes and the target audience make it difficult to compare the studies. The diversity of dimensions assessed by each instrument also compromises the contrasting analysis between survey results. However, a broader analysis reveals that there was no predominance of any dimension of scientific literacy in both the positive and negative results.

It is worth noting that, although the objects of analysis of this review were instruments for assessing scientific literacy in general, a great interest in the development and validation of instruments for assessing scientific literacy in specific subjects of certain scientific disciplines was verified. This is evident in the number of scientific productions excluded because they addressed dimensions of scientific literacy intrinsic to certain areas of knowledge (n = 42), particularly for physics and chemistry.

This fact demonstrates the breadth of scientific literacy and the lack of comprehensive instruments to fully assess it, leading some researchers to develop instruments specific to certain areas. Moreover, this scenario supports the idea of DeBoer (2000), in which the author argues that

Science literacy should be conceptualized broadly enough for local school districts and individual teachers to pursue the goals best suited to their particular situations and the content and methodologies most appropriate for them and their students.(p.582)

Study results: significant differences between individuals/groups

With regard to the comparison of the results of the scientific literacy assessment between the different groups of individuals analyzed, differences with statistical significance were identified in several aspects assessed in 16 of the 43 studies: ethnicity (Fives et al., 2014; Laugksch & Spargo, 1999; Mun et al, 2013; Shaffer et al., 2019); education level (Laugksch & Spargo, 1999; McKeown, 2017; Mun et al., 2015; Rundgren et al., 2010); year/grade (Özdem et al., 2010); gender (Laugksch & Spargo, 1999; Mun et al., 2013, 2015; Shaffer et al., 2019); number of science subjects taken (Čipková et al, 2020; Laugksch & Spargo, 1999); type of institution-public/private-(Gormally et al., 2012; Nascimento-Schulze, 2006; Vizzotto, 2019); pre-test/post-test (Cartwright et al., 2020; Gormally et al., 2012); socioeconomic level (Fives et al., 2014); initial training/continuing training (Gomes & Almeida, 2016); number of failures (Vizzotto, 2019); concurrent work and study (Vizzotto, 2019); post-secondary studies (Vizzotto, 2019); continuity in studies (Vizzotto, 2019); 1st year higher education students/last year higher education students (Vizzotto & Del Pino, 2020b).

The analysis points to the persistence of the relationship between factors related mainly to ethnicity, education level, and gender. This further supports the argument that many instruments have been applied in target populations different from those they were designed, and reinforces the need for the validation process of instruments for the context in which they will be applied.

CONCLUSION

The results of the SLR identified 13 scientific literacy assessment instruments used by 43 studies in 14 countries, with a predominance from Brazil, Indonesia, and the United States. Most researchers have used the scientific literacy assessment instruments already validated in the literature, including the TBSL, TOSLS, and their respective versions were the most adopted in their research. It was found that most instruments aim to assess different scientific literacy skills. Even so, some instruments were identified to assess characteristics of the affective domain, such as values, beliefs, habits, and motivations related to scientific literacy, highlighting the importance of such attributes in the development of a scientifically literate citizen.

The classification of the respondents was obtained through the descriptive frequencies of response to the items, and there was no standardization in the processes of categorizing the results.

It was observed that most of the instruments were developed to assess secondary school students and that there is a predominance of studies that assess the scientific literacy of secondary and higher education students, the latter being the ones that presented the best performances concerning the dimensions of scientific literacy analyzed. However, it was evidenced that caution must be used when performing such an analysis since the instruments were applied at different levels of education and in different contexts from those for which they were developed.

The SLR carried out enriches the field of research on the assessment of scientific literacy, filling a gap that existed until then and producing results that are useful for researchers in the area. In summary, although the subject has been the object of a large number of studies, it was found that there is a very small number of instruments that assess scientific literacy-mainly aimed at elementary school students-among which there are certain convergences, such as the assessment of scientific literacy skills and the target audience, and divergences, such as the type of instrument, the format of the items and the way of scoring and classifying individuals.

The results showed research with total or partial absence of the presentation and identification of the validation processes of the instruments. Furthermore, the study explained a considerable number of investigations that applied instruments to target audiences whose contexts differ from those they were developed, without the instruments being adapted and validated for the respective samples. Added to these is that many authors have changed and/or created categorization processes from the original instruments. These circumstances highlight the need for great caution in using the results of these studies and in using these instruments in the decision-making for which they were or will be, applied.

It is suggested, therefore, that future research within this theme develop and validate, in a satisfactory manner, new instruments for assessing scientific literacy, covering the different levels of education and establishing clear and precise classification and categorization criteria in order to allow the comparison of results and contribute to the progress of this area of study.

ACKNOWLEDGMENTS

This work was funded by national funds through FCT — Fundação para a Ciência e a Tecnologia, I.P., under the scope of the PhD Research Grant in Education Sciences with reference UI/BD/151034/2021.

REFERENCES

Atta, H. B., & Aras, I. (2020). Developing an instrument for students scientific literacy. Journal of Physics: Conference Series, 1422(1), 012019. https://doi.org/10.1088/1742-6596/1422/1/012019 [ Links ]

Briner, R., & Denyer, D. (2012). Systematic review and evidence synthesis as a practice and scholarship tool. In D. Rousseau (Ed.), Handbook of evidence-based management: companies, classrooms, and research (pp. 328-374). Oxford University Press. [ Links ]

Brossard, D., & Shanahan, J. (2006). Do they know what they read? Building a scientific literacy measurement instrument based on science media coverage. Science Communication, 28(1), 47-63. [ Links ]

Camargo, A. N. B. de, Pilar, F. D., Ribeiro, M. E. M., Fantinel, M., & Ramos, M. G. (2011). Alfabetização científica: a evolução ao longo da formação de licenciandos ingressantes, concluintes e de professores de química. Momento - Diálogos Em Educação, 20(2), 19-29. [ Links ]

Cartwright, N. M., Liddle, D. M., Arceneaux, B., Newton, G., & Monk, J. M. (2020). Assessing scientific literacy skill perceptions and practical capabilities in fourth year undergraduate biological science students. International Journal of Higher Education, 9(6), 64-76. https://doi.org/10.5430/ijhe.v9n6p64 [ Links ]

Čipková, E., Karolčík, Š., & Scholzová, L. (2020). Are secondary school graduates prepared for the studies of natural sciences?-evaluation and analysis of the result of scientific literacy levels achieved by secondary school graduates. Research in Science and Technological Education, 38(2), 146-167. https://doi.org/10.1080/02635143.2019.1599846 [ Links ]

Coppi, M. A., & Sousa, C. P. (2019a). Estudo da alfabetização científica de alunos do ensino médio de um colégio de São Paulo. Revista Eletrônica Científica Ensino Interdisciplinar, 5(15), 537-544. https://doi.org/10.21920/recei72019515537544 [ Links ]

Coppi, M. A., & Sousa, C. P. de. (2019b). Estudo da alfabetização científica dos alunos do 9° ano do ensino fundamental de um Colégio Particular se São Paulo. Debates Em Educação, 11(23), 169-185. https://doi.org/https://doi.org/10.28998/2175-6600.2019v11n23p169-185 [ Links ]

DeBoer, G. E. (2000). Scientific literacy: another look at its historical and contemporary meanings and its relationship to science education reform. Journal of Research in Science Teaching, 37(6), 582-601. https://doi.org/10.1002/1098-2736(200008)37:63.0.CO;2-L [ Links ]

Fives, H., Huebner, W., Birnbaum, A. S., & Nicolich, M. (2014). Developing a measure of scientific literacy for middle school students. Science Education, 98(4), 549-580. [ Links ]

Galvão, T. F., & Pereira, M. G. (2014). Revisões sistemáticas da literatura: passos para sua elaboração. Epidemiologia e Serviços de Saúde, 23(1), 183-184. https://doi.org/10.5123/s1679-49742014000100018 [ Links ]

Gomes, A. S. A., & Almeida, A. C. P. C. de. (2016). Letramento científico e consciência metacognitiva de grupos de professores em formação inicial e continuada: um estudo exploratório. Amazônia: Revista de Educação Em Ciências e Matemáticas, 12(24), 53-73. https://doi.org/10.18542/amazrecm.v12i24.3442 [ Links ]

Gormally, C., Brickman, P., & Lutz, M. (2012). Developing a test of scientific literacy skills (TOSLS): measuring undergraduates’ evaluation of scientific information and arguments. CBE Life Sciences Education, 11(4), 364-377. [ Links ]

Gresczysczyn, M. C. C., Monteiro, E. L., & Filho, P. S. C. (2018). Determinação do nível de alfabetização científica de estudantes da etapa final do ensino médio e etapa inicial do ensino superior. Revista Brasileira de Ensino de Ciência e Tecnologia, 11(1), 192-208. https://doi.org/10.3895/rbect.v11n1.5631 [ Links ]

Innatesari, D. K., Sajidan, S., & Sukarmin, S. (2019). The profile of students’ scientific inquiry literacy based on Scientific Inquiry Literacy Test (ScInqLiT). The 2nd Annual International Conference on Mathematics and Science Education, 1227 012040. https://doi.org/10.1088/1742-6596/1227/1/012040 [ Links ]

Jannah, A. M., Suwono, H., & Tenzer, A. (2020). Profile and factors affecting students’ scientific literacy of senior high schools. AIP Conference Proceedings, 2215 (April), 070021. https://doi.org/10.1063/5.0000568 [ Links ]

Jufri, A. W., Hakim, A., & Ramdani, A. (2019). Instrument development in measuring the scientific literacy integrated character level of junior high school students instrument development in measuring the scientific literacy integrated character level of junior high school students. International Seminar on Science Education, 1233 012100. https://doi.org/10.1088/1742-6596/1233/1/012100 [ Links ]

Koedsri, A., & Ngudgratoke, S. (2018). Diagnostic assessment of scientific literacy of lower secondary school students using g-dina model. Re-Thinking Teacher Professional Education: Using Research Findings for Better Learning - 61st World Assembly ICET 2017, 268-273. [ Links ]

Kola, A. J., Opeyemi, bdulrahman A., & Olu, A. M. (2020). Assessment of scientific literacy skills of college of education students in Nigeria. American Journal of Social Sciences and Humanities, 5(1), 207-220. https://doi.org/10.20448/801.51.207.220 [ Links ]

Laugksch, R. C., & Spargo, P. E. (1996a). Construction of a paper-and-pencil test of basic scientific literacy based on selected literacy goals recommended by the american association for the advancement of science. Public Understanding of Science, 5(4), 331-359. https://doi.org/10.1088/0963-6625/5/4/003 [ Links ]

Laugksch, R. C., & Spargo, P. E. (1996b). Development of a pool of scientific literacy test-items based on selected AAAS literacy goals. Science Education, 80(2), 121-143. https://doi.org/https://doi.org/10.1002/(SICI)1098-237X(199604)80:2<121::AID-SCE1>3.0.CO;2-I [ Links ]

Laugksch, R. C., & Spargo, P. E. (1999). Scientific literacy of selected South African matriculants entering tertiary education: A baseline survey. South African Journal of Science, 95(10), 427-432. [ Links ]

Lima, A. M. D. L., & Garcia, R. N. (2015). A alfabetização científica de estudantes de licenciatura em ciências biológicas: um estudo de caso no contexto da formação inicial de professores. XEncontro Nacional de Pesquisa Em Educação Em Ciências - X ENPEC, 1-8. [ Links ]

Lima, J. S., & Garcia, R. N. (2013). Uma investigação sobre alfabetização científica no ensino médio no colégio de aplicação - UFRGS. Salão UFRGS 2013: SIC - XXV Salão de Iniciação Científica Da UFRGS. http://www.elsevier.com/locate/scp [ Links ]

McKeown, T. R. (2017). Validation study of the science literacy assessment: a measure to assess middle school students’ attitudes toward science and ability to think scientifically [Doctor Thesis, Virginia Commonwealth University, Virginia, United States of America]. http://ovidsp.ovid.com/ovidweb.cgi?T=JS&PAGE=reference&D=psyc15&NEWS=N&AN=2018-09130-030 [ Links ]

Miller, J. D. (1983). Scientific literacy: a conceptual and empirical review. Daedalus, 112(2), 29-48. [ Links ]

Mun, K., Lee, H., Kim, S. W., Choi, K., Choi, S. Y., & Krajcik, J. S. (2013). Cross-cultural comparison of perceptions on the global scientific literacy with australian, chinese and korean middle school students. International Journal of Science and Mathematics Education, 13(2), 437-465. https://doi.org/10.1007/s10763-013-9492-y [ Links ]

Mun, K., Shin, N., Lee, H., Kim, S. W., Choi, K., Choi, S. Y., & Krajcik, J. S. (2015). Korean secondary students’ perception of scientific literacy as global citizens: using Global Scientific Literacy Questionnaire. International Journal of Science Education, 37(11), 1739-1766. https://doi.org/10.1080/09500693.2015.1045956 [ Links ]

Nascimento-Schulze, C. M. (2006). Um estudo sobre alfabetização científica com jovens catarinenses. Psicologia - Teoria e Prática, 8(1), 95-106. https://www.redalyc.org/articulo.oa?id=1938/193818626006 [ Links ]

Nascimento-Schulze, C. M., Camargo, B., & Wachelke, J. (2006). Alfabetização científica e representações sociais de estudantes de ensino médio sobre ciência e tecnologia. Arquivos Brasileiros de Psicologia, 58(2), 24-37. [ Links ]

Oliveira, W. F. A., & Silva-Forsberg, M. C. (2012). Níveis de alfabetização científica de estudantes da última série do ensino fundamental. Atas Do VIII Encontro Nacional de Pesquisa Em Educação Em Ciências - VIII ENPEC, 00-11. http://www.nutes.ufrj.br/abrapec/viiienpec/resumos/R0671-1.pdf [ Links ]

Özdem, Y., Çavaş, P., Çavaş, B., Çakıroğlu, J., & Ertepınar, H. (2010). An investigation of elementary students’ scientific literacy levels. Journal of Baltic Science Education, 9(1), 6-19. [ Links ]

Pramuda, A., Mundilarto, Kuswanto, H., & Hadiati, S. (2019). Effect of real-time physics organizer based smartphone and indigenous technology to students’ scientific literacy viewed from gender differences. International Journal of Instruction, 12(3), 253-270. https://doi.org/10.29333/iji.2019.12316a [ Links ]

Rachmatullah, A., Diana, S., & Rustaman, N. Y. (2016). Profile of middle school students on scientific literacy achievements by using scientific literacy assessments (SLA). AIP Conference Proceedings, 1708-08000(February 2016), 1-5. https://doi.org/10.1063/1.4941194 [ Links ]

Rivas, M. I. E., Moço, M. C. de Q., & Junqueira, H. (2017). Avaliação do nível de alfabetização científica assessment of the level of scientific literacy. Revista Acadêmica Licencia&acturas, 5(2), 58-65. [ Links ]

Rundgren, C. J., Rundgren, S. N. C., Tseng, Y. H., Lin, P. L., & Chang, C. Y. (2010). Are you SLiM? Developing an instrument for civic scientific literacy measurement (SLiM) based on media coverage. Public Understanding of Science, 21(6), 759-773. https://doi.org/10.1177/0963662510377562 [ Links ]

Santiago, D. D. da S. A., Nunes, A. O., & Alves, L. A. (2020). Letramento científico e crenças CTSA em estudantes de pedagogia. REPPE: Revista Do Programa de Pós-Graduação Em Ensino, 4(2), 210-236. [ Links ]

Shaffer, J. F., Ferguson, J., & Denaro, K. (2019). Use of the test of scientific literacy skills reveals that fundamental literacy is an important contributor to scientific literacy. CBE-Life Sciences Education, 18(3), 1-10. https://doi.org/10.1187/cbe.18-12-0238 [ Links ]

Shamos, M. H. (1995). The myth of scientific literacy. Rutgers University Press. [ Links ]

Soobard, R., & Rannikmäe, M. (2011). Assessing student’s level of scientific literacy using interdisciplinary scenarios. Science Education International, 22(2), 133-144. https://eric.ed.gov/?id=EJ941672 [ Links ]

Souza, K. J. P. (2019). Letramento científico: uma análise do uso social dos conhecimentos construídos nas ciências naturais e matemática. Dissertação de Mestrado,Universidade do Estado do Rio Grande do Norte, Mossoró, Brasil. [ Links ]

Utami, A. U., & Hariastuti, R. M. (2019). Analysis of science literacy capabilities through development Test of Scientific Literacy Skills (TOSLS) integrated Internet of Things (IOT) technology. Science Education and Application Journal (SEAJ) Pendidikan IPA Universitas Islam Lamongan, 1(1), 68-72. [ Links ]

Vizzotto, P. A. (2019). A proficiência científica de egressos do ensino médio ao utilizar a física para interpretar o cotidiano do trânsito. Tese de Doutoramento, Universidade Federal do Rio Grande do Sul, Porto Alegre, Brasil. [ Links ]

Vizzotto, P. A., & Del Pino, J. C. (2020a). Avaliação do nível de alfabetização científica de acadêmicos ingressantes e concluintes de cursos de licenciatura. Research, Society and Development, 9(5), e140953349. https://doi.org/10.33448/rsd-v9i5.3349 [ Links ]

Vizzotto, P. A., & Del Pino, J. C. (2020b). Medida del nivel de alfabetización científica en alumnos recién ingresados y del último año de los cursos de física. Revista de Ensañnza de La Física, 32(1), 21-30. [ Links ]

Vizzotto, P. A., & Mackedanz, L. F. (2018). Teste de alfabetização científica básica: processo de redução e validação do instrumento na língua portuguesa. Revista Prática Docente, 3(2), 575-594. https://doi.org/10.23926/rpd.2526-2149.2018.v3.n2.p575-594.id251 [ Links ]

Vizzotto, P. A., Rosa, L. S., Duarte, V. de M., & Mackedanz, L. F. (2020). O uso do Teste de Alfabetização Científica Básica em estudantes do ensino fundamental : análise da confiabilidade de medida nesse grupo. Research, Society and Development, 9(3), e79932447. https://doi.org/10.33448/rsd-v9i3.2447 [ Links ]

Waldo, J. (2014). Application of the Test of Scientific Literacy Skills in the assessment of a general education natural science program. The Journal of General Education, 63(1), 1-14. [ Links ]

Wenning, C. J. (2007). Assessing inquiry skills as a component of scientific literacy. J. Phys. Tchr. Educ. Online, 4(2), 21-24. [ Links ]

DECLARATION OF CONFLICT OF INTEREST

Received: December 16, 2021; Accepted: September 15, 2022

texto em

texto em