Servicios Personalizados

Revista

Articulo

Compartir

Estudos em Avaliação Educacional

versión impresa ISSN 0103-6831versión On-line ISSN 1984-932X

Est. Aval. Educ. vol.34 São Paulo 2023 Epub 29-Dic-2023

https://doi.org/10.18222/eae.v34.9219_port

ARTICLES

ASSESSMENT PRACTICES IN HIGHER EDUCATION: A STUDY WITH PORTUGUESE FACULTY

ICentro de Investigação em Estudos da Criança da Universidade do Minho, Braga, Portugal; evalopesfernandes@ie.uminho.pt

IICentro de Investigação em Estudos da Criança da Universidade do Minho, Braga, Portugal; aflores@ie.uminho.pt

IIICentro de Investigação em Estudos da Criança da Universidade do Minho, Braga, Portugal; irenecadime@ie.uminho.pt

IVCentro de Investigação em Estudos da Criança da Universidade do Minho, Braga, Portugal; ccoutinho@ie.uminho.pt

This paper examines the assessment practices and the influence of the Bologna Process in the process of changing practices of faculty from five Portuguese public universities (n = 185). Data were collected through a questionnaire comprising closed and open-ended questions. Findings indicate that Portuguese faculty use a diversity of assessment practices determined more by themselves than by external factors. Differences in assessment practices were found as a result of study cycles and fields of knowledge. This small-scale study suggests that Portuguese faculty have changed their assessment practices, but the impact of the Bologna Process is unclear.

KEYWORDS: ASSESSMENT PRACTICES; BOLOGNA PROCESS; FACULTY; HIGHER EDUCATION

Este trabalho examina as práticas de avaliação e a influência do Processo de Bolonha no desenvolvimento de mudança das práticas do corpo docente de cinco universidades públicas portuguesas (n = 185). Os dados foram recolhidos através de um questionário que incluiu perguntas fechadas e abertas. Os resultados indicam que os professores portugueses utilizam uma diversidade de práticas de avaliação determinada mais por eles próprios do que por fatores externos. Foram encontradas diferenças nas práticas de avaliação como resultado dos ciclos de estudo e da área de conhecimento. Este estudo em pequena escala sugere que os professores portugueses alteraram suas práticas de avaliação, mas o impacto do Processo de Bolonha não é claro.

PALAVRAS-CHAVE: PRÁTICAS DE AVALIAÇÃO; PROCESSO DE BOLONHA; PROFESSORES; ENSINO SUPERIOR

Este trabajo examina las prácticas de evaluación y la influencia del Proceso de Boloña en el desarrollo del cambio de las prácticas del profesorado de cinco universidades públicas portuguesas (n = 185). Los datos se obtuvieron por medio de un cuestionario que incluyó preguntas cerradas y abiertas. Los resultados indican que los profesores portugueses utilizan una diversidad de prácticas de evaluación más determinadas por ellos mismos que por factores externos. Se encontraron diferencias en las prácticas de evaluación en función de los ciclos de estudio y del área de conocimiento. Este estudio en pequeña escala sugiere que los docentes portugueses alteraron sus prácticas de evaluación, pero el impacto del Proceso de Boloña no está claro.

PALABRAS CLAVE: PRÁCTICAS DE EVALUACIÓN; PROCESO DE BOLOÑA; DOCENTES; EDUCACIÓN SUPERIOR

INTRODUCTION

The past two decades have been especially fruitful in terms of goal setting and legislation change regarding the European higher education (HE). In the European context, in addition to globalization trends and challenges worldwide, the Bologna Process (BP) brought about a series of specific changes aimed at creating a European Higher Education Area (EHEA), thus promoting the comparability of the standards and quality of higher-education qualifications.

In Portugal, the Decreto-Lei [Decree-Law] n. 74 (2006) (later amended by Decreto-Lei n. 107, 2008) provided for the application of Bologna Principles in HE institutions. Formally, Portugal has followed structural changes in study cycles according to European standardized criteria, and has implemented a quality improvement system. The changes involve a transition from a system based on “knowledge transmission” to one based on “student competence development”, with an emphasis on experimental work, project work, and transversal skills (Decreto-Lei n. 107, 2008). The goal of these changes is to foster more competitive learning environments, as well as conceptual changes regarding teaching (Reimann & Wilson, 2012), recognizing the central role of the student within a logic of autonomy, teamwork and active learning (Segers & Dochy, 2001). The Bologna Process has implied change in various dimensions, especially concerning curriculum flexibility and teaching and student work (Pereira & Flores, 2016).

Studies undertaken in the Portuguese HE context have shown the commitment of HE institutions to BP, as well as increased cooperation between Portugal and the European HE institutions (David & Abreu, 2017). However, some contradictions were also identified, such as: the apparent destruction of the binary system (university and polytechnic education) and the complex preservation of the national culture, language, education systems and institutional autonomy (David & Abreu, 2007); the successful realization of more formal aspects of the BP (Veiga & Amaral, 2008) and the maintenance of traditional assessment practices (Pires et al., 2013); and the prevalence of practices not aligned with the Bologna purposes (Pires et al., 2013; European Students’ Union [ESU], 2015; Barreira et al., 2015; Pereira et al., 2017; European Commission, 2018). Other findings concern mixed assessment methods, including more traditional methods alongside more innovative practices (Pires et al., 2013; Pereira et al., 2017; Flores & Pereira, 2019).

Despite the positive results, adapting HE programs to the Bologna teaching and learning perspective is still a challenge. In some cases, new and innovative assessment practices have been introduced towards a more student-centered approach. However, in most cases, assessment is still about “making statements about students’ weaknesses and strengths” (Earl & Katz, 2006, p. 4).

Traditionally, classroom assessment has focused on its summative function, on the assessment and measuring of learning, using assessment information to make and report judgements about learners’ performance which may encourage comparison and competition (Black & Wiliam, 1998). On the other hand, the formative function of assessment, which refers to how student learning can be improved (Black & Wiliam, 1998), has also been highlighted.

Although more traditional forms of assessment may be effective in specific contexts and purposes, they are not suitable for all assessment purposes and may encourage reproduction and memorization (Perrenoud, 1999; Biggs, 2003). In turn, the so-called student-centered assessment methods (Webber & Tschepikow, 2013; Myers & Myers, 2015) enable the development of technical and problem-solving skills, e.g., fostering greater student involvement in the learning process (Myers & Myers, 2015). These methods imply more global tasks that have to be developed over time. They can simultaneously include the process and product and the individual and collective dimensions. In addition, they stimulate autonomy, collaboration, responsibility, constructive feedback, interaction with stakeholders and peers, knowledge building (Webber & Tschepikow, 2013), skills development, and the deepening of learning (Brew et al., 2009). These methods include, for example, experimental hands-on work, project work or reflections (Webber & Tschepikow, 2013; Struyven et al., 2005).

Regardless of the assessment approach chosen, it is important to deal “with all aspects of assessment in an integrated way” (Black & Wiliam, 2018, p. 552). The selection of assessment strategies represents a key component of the teaching and learning process. This selection should result in a goal-oriented questioning process in the program or course (Hadji, 2001), defining which questions should be answered through the assessment. Therefore, it is essential to use a variety of assessment methods based on their suitability for teaching and learning goals and the nature of courses and curricular units (Earl & Katz, 2006; Black & Wiliam, 2018).

An effective and successful questioning within the EHEA context implies proper goal formulation and the recognition of assessment as a cornerstone of the teaching and learning process. With the Bologna Process, there is an expectation of more diverse assessment methods (i.e., alternative methods, including those that involve students) and the adoption of other approaches to assessment, such as assessment for learning (McDowell et al., 2011), recognizing the role of feedback in the assessment and learning process (Black & Wiliam, 1998; Hattie & Timperley, 2007; Carless et al., 2011). Within the context of learner-centered practices, the literature also recognizes the role of classroom in the organization of innovative and facilitated learning environments (Black & Wiliam, 1998) and in the development of innovative ways of structuring teaching and assessment (Fernandes, 2015).

This view has gained prominence in the context of the BP (Flores & Simão, 2007), challenging faculty to promote more questioning, innovative and creative learning opportunities (Zabalza, 2008). However, it is not clear whether faculty have fully embraced this (Webber & Tschepikow, 2013).

This paper1 reports on research aimed at understanding HE assessment practices after the BP, particularly concerning possible changes, and the meaning of these changes, taking into account the context of university teaching.

METHODS

This paper draws upon a broader Ph.D. research in educational sciences, funded by the Fundação para a Ciência e a Tecnologia [National Foundation for Science and Technology] (Ref. SFRH/BD/103291/2014) and which itself is a part of a broader three-year research project entitled “Assessment in higher education: The potential of alternative methods”, funded by Fundação Nacional para a Ciência e a Tecnologia [National Foundation for Science and Technology] (Ref. PTDC/MHC-CED/2703/2014). The study reported in this paper aimed to identify assessment practices of faculty and investigate the influence of the BP on those practices. In particular, the following research questions are addressed:

1) What kind of assessment practices are used by Portuguese faculty after the implementation of the BP?

2) What effect do faculty characteristics and teaching contexts have on their responses to the scale on assessment practices?

3) What was the influence of the BP on the process of change of faculty’s assessment practices?

A survey was carried out in five Portuguese public universities, combining closed and open-ended questions.

Participants

The questionnaire was administered between February and July 2017 to a convenience sample of faculty from five Portuguese public universities. The sample consisted of 185 faculty from various teaching cycles (i.e., undergraduate, master’s, integrated master’s, and Ph.D. levels) in five different scientific areas (i.e., exact sciences, engineering and technology sciences, medical and health sciences, social sciences, and humanities). A little more than four-fifths of the sample (83.8%) taught in undergraduate degree programs; 77.3% taught in master’s degree pro- grams; 41.5% taught in integrated master’s degree programs; 55.8% taught in Ph.D. courses; and 1.7% in other programs (e.g., non-degree awarding courses, professional courses, among others). The participants were predominantly female (47.0%) and over 45 years old (55.7%). Most were associate/assistant professors (71.3%), held a Ph.D. (74.6%) and had pedagogical training (63.2%). Regarding their experience as academics, most had more than 15 years of experience (70.8%) (see Table 1).

TABLE 1 Demographic characteristics of participants

| DEMOGRAPHIC CHARACTERISTICS | N | % |

|---|---|---|

| University | ||

| A | 36 | 19.5 |

| B | 34 | 18.4 |

| C | 60 | 32.4 |

| D | 36 | 19.5 |

| E | 19 | 10.2 |

| Gender | ||

| Male | 74 | 40.0 |

| Female | 87 | 47.0 |

| Missing | 24 | 13.0 |

| Age | ||

| Under 45 years old | 82 | 44.3 |

| Over 45 years old | 103 | 55.7 |

| Cycle of studies | ||

| Undergraduate degree | ||

| Yes | 155 | 83.8 |

| No | 30 | 16.2 |

| Integrated master’s degree | ||

| Yes | 73 | 39.4 |

| No | 103 | 55.7 |

| Missing | 9 | 4.9 |

| Master’s degree | ||

| Yes | 143 | 77.3 |

| No | 42 | 22.7 |

| Ph.D. | ||

| Yes | 101 | 54.6 |

| No | 80 | 43.2 |

| Missing | 4 | 2.2 |

| Field of knowledge | ||

| Medical and health sciences | 21 | 11.4 |

| Exact sciences | 16 | 8.6 |

| Engineering and technology sciences | 50 | 27.0 |

| Social sciences | 77 | 41.6 |

| Humanities | 21 | 11.4 |

| Professional category | ||

| Full professor | 10 | 5.4 |

| Associate/assistant professor with habilitation | 19 | 10.3 |

| Associate/assistant professor | 132 | 71.3 |

| Other | 24 | 13.0 |

| Teaching experience | ||

| Less than 15 years | 54 | 29.2 |

| More than 15 years | 131 | 70.8 |

| Pedagogical training | ||

| Yes | 117 | 63.2 |

| No | 63 | 34.1 |

| Missing | 5 | 2.7 |

| Total | 185 | 100.0 |

Source: Authors’ elaboration.

Instrument

The scale “assessment practices” consists of 30 items (see Table A1). The version used in this study is an adaptation of a section of the socio-professional questionnaire from the Conceptions of Assessment Inventory (CAI), originally used in the clinical context of nursing (Gonçalves, 2016), adapted for use with faculty across the spectrum of university subjects. A group of educational science experts evaluated the items, ensuring their alignment with the different knowledge areas, and some contextual adjustments were made (adapting the original text to university teaching in general). The data were complemented with two (closed and open-ended) questions about possible changes in assessment practices: 1)Have you changed the way you assess your students over your career?2) In your opinion, has the implementation of the BP contributed to change assessment practices in HE?For each question, the following answer choices were available:yes, no, and maybe, and the participants were encouraged to justify their answers (open-ended questions).

The project was approved by the Committee of Ethics for Research on Social Sciences and Humanities at the Universidade do Minho (Ref. SECSH 035/2016 and SECSH 036/2016). All participants were fully informed of the project’s goals. A signed written consent and permission document was obtained from the participants.

ANALYSIS

Statistical analyses were performed using the software IBM SPSS Statistics 24. Factor analysis is a technique that can be used not only to understand the structure of a measure, but also to reduce data to a more manageable set of variables (factors or dimensions), while retaining as much original information as possible (Field, 2009). Therefore, exploratory factor analysis (EFA) was performed, using the principal component analysis method with varimax rotation (Field, 2009). The assumptions of adequacy of the sample’s size and sphericity were verified. In this study, this analysis served two purposes: (a) to obtain empirical evidence for the underlying structure of the scale on assessment practices, by identifying core dimensions, based on the intercorrelations between the items; and (b) to reduce the data obtained through the 30 items to more manageable scores that are interpretable and represent the core dimensions of the assessment practices. The number of factors and items to be retained was based on two criteria: factors with eigenvalues greater than 1; and items with factor loadings greater than 0.35. The fidelity of the subscales was tested with Cronbach’s alpha. Because this is an exploratory study, alpha values greater than 0.60 were considered (Hair et al., 2009).

The mean score of the items was calculated for each dimension, and the relationship between these dimensions and participants’ demographic and professional characteristics was explored. To investigate the effects of age, gender, years of experience, professional category, field of knowledge, cycles of studies taught, and pedagogical training on the assessment practices, multivariate analysis of variance (MANOVA) was used. The assumptions of independence of the observations, univariate normality and homogeneity of the variance-covariance matrices were guaranteed (Field, 2009). The partial eta-squared (ηp²) values were calculated as a measure of the effect size, considering the following guidelines for its interpretation: small effect, ηp² > 0.01; medium effect, ηp² > 0.06; large effect, ηp² > 0.14 (Cohen, 1988). Regarding demographic characteristics, in the age variable for this sub-study, two groups were considered for analysis: 1) faculty under 45 years of age; 2) faculty over 45 years of age. In the variable professional experience (professional variables), the length of service was added as follows: 1) faculty with less than 15 years of experience; 2) faculty with more than 15 years of experience. The cut-off points for the grouping in the variables “age” and “professional experience” were defined based on the date of implementation of the Bologna Process in Portugal. Therefore, older faculty and faculty with more years of experience most likely had experience in the two periods (before and after the Bologna Process), whereas the younger and less experienced faculty most likely did not. The professional categories “associate professor with habilitation” and “assistant professor with habilitation”, and “associate professor” and “assistant professor”, and the fields of knowledge of exact sciences and engineering and technology sciences were also grouped in order to obtain more adequate units of analysis.

Data collected through the open-ended questions of the questionnaire were analyzed through content analysis guided by the principles of completeness, representativeness, consistency, exclusivity and relevance (Bardin, 2009), and by recognizing the interactive nature of the data analysis (Miles & Huberman, 1994). A mixed approach and the definition of more general categories were privileged (Bardin, 2009; Esteves, 2006), articulating an inductive (emergent character of the data) (Cho & Lee, 2014) and a deductive perspective through the definition of categories of analysis in line with the research goals and the theoretical framework (Ezzy, 2002). The categories of analysis were semantic (Miles & Huberman, 1994).

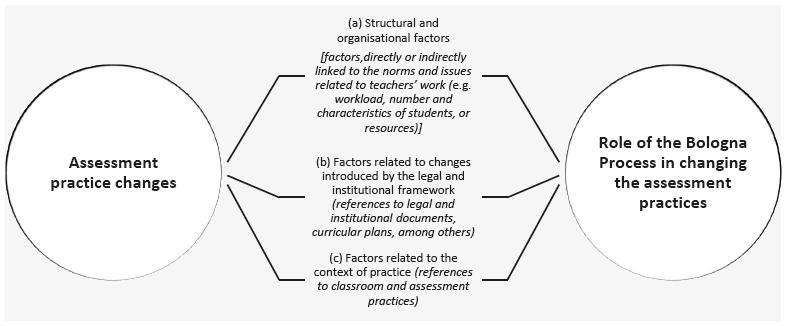

The analysis of the participants’ discourse, combined with a critical review of the literature, supported the following central dimensions: 1) Changes in assessment practices; 2) Influence of the BP on changes in assessment practices. Each dimension originated a set of themes and categories similar to the questionnaire protocol, according to the answers “Yes”, “No” and “Maybe”: (a) structural and organizational factors; (b) factors related to the changes introduced by the legal and institutional framework; and, (c) factors related to the context of practice. In the first category, references to factors, directly or indirectly, linked to the circumstances (more or less challenging) in which the faculty’s work is developed were considered (e.g., workload, number and characteristics of students, and resources). As for the factors linked to changes introduced by the legal and institutional framework, references to legal and institutional documents were considered, as well as curricular plans, among others. Finally, regarding the context of the practice, references to the classroom and assessment practices were included.

RESULTS

The results of the EFA suggested that component 1 represented the “engagement and participation of students in the assessment”,2component 2 represented the “use of assessment by the faculty in the teaching and learning process”,3 and component 3 represented the “assessment as a process determined by external factors”4 (see Table A1 for detailed results). These three components reflect, on the one hand, the importance of student involvement in the assessment process (European Commission, 2013). On the other hand, they reflect how faculty use assessment, recognizing that the way they assess students (Brown, 2005) and their use of assessment information (Earl & Katz, 2006) have serious implications for student learning. Nevertheless, the assessment is not immune to the context, rules and procedures outside the classroom as “faculty’s decisions on assessment strategies are closely related to the opportunities and limitations offered by normative and institutional decisions” (Ion & Cano, 2011, p. 167). The three components explained 46.57% of the variance. All items had high factor loadings in the respective component. The items of each factor revealed an adequate internal consistency, supporting the reliability of the obtained scores. Cronbach’s alpha was higher than 0.60 in all the three components (see Table A1).

Table 2 presents the results of descriptive statistics concerning faculty’s assessment practices. The assessment practices associated with the use of assessment in the teaching and learning process were the most used, while assessment practices determined by external factors are the least used (see Table 2). The results of the descriptive statistics also reveal a positive trend in the use of practices related to the involvement and participation of students in the assessment process.

TABLE 2 Descriptive statistics of the faculty’s assessment practices

| N | % | MINIMUM | MAXIMUM | MEAN | SD | MEDIAN | Q1 | Q3 | |

|---|---|---|---|---|---|---|---|---|---|

| 1. Students’ engagement and participation in assessment | 172 | 93 | 1.29 | 3.93 | 2.71 | 0.49 | 2.71 | 2.43 | 3.00 |

| 2. Use of assessment by faculty in the teaching and learning process | 172 | 93 | 2.00 | 4.00 | 3.13 | 0.38 | 3.10 | 2.90 | 3.40 |

| 3. Assessment as a process determined by external factors | 179 | 96.8 | 1.00 | 3.67 | 2.69 | 0.57 | 2.67 | 2.33 | 3.00 |

Source: Authors’ elaboration.

Note: Q1 (first quartile); Q3 (third quartile); SD (standard deviation).

The MANOVA indicate that there is no significant effect of the age, gender, years of experience as faculty, professional category and the frequency of peda- gogical training of the participants on the different assessment practices. Concerning the influence of study cycles on the use of different assessment practices, multivariate tests revealed the absence of statistically significant differences between the faculty who teach in the undergraduate, integrated master’s and Ph.D. programs (see Table A2 for descriptive statistics).

However, the results of MANOVA indicated the existence of statistically significant differences, with a medium effect size, between faculty who teach in master’s degree programs and those who do not (WILK’S Δ = .931, F(3, 158) = 3.876, p = .010, ηp2 = .069). The results of the univariate tests allowed to conclude that the faculty who teach in this cycle of studies attached more importance to the practices associated with the involvement and participation of students in the assessment, and to the practices associated with the use of assessment by the faculty in the teaching and learning process. Less importance was attached to practices determined by external factors (see Table 3).

In regard to the field of knowledge, multivariate tests revealed the existence of statistically significant differences, although with small effect size, in the use of different assessment practices (WILK’S Δ = 0.876, F(9, 379.814) = 2.366, p = 0.013, ηp2 = 0.043). The results of the univariate tests (see Table 3) and the corresponding pairwise comparisons suggested a greater use of practices associated with the involvement and participation of students in the assessment process by faculty who teach in the areas of social sciences compared to those who teach in exact sciences and engineering and technology sciences (p = 0.031). It was also possible to identify a greater use of assessment practices determined by external factors in faculty who teach in the areas of exact sciences and engineering and technology sciences compared to social sciences faculty (p = 0.029).

TABLE 3 Results of the univariate tests applied to the effect of study cycle (master’s level) and field of knowledge on assessment practices

| STUDY CYCLE MASTER’S LEVEL |

FIELD OF KNOWLEDGE | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Yes M (SD) |

No M (SD) |

F (df1, df2) |

p | ηp 2 | ES & ETS M (SD) |

MHS M (SD) |

SS M (SD) |

H M (SD) |

F (df1, df2) |

p | ηp 2 | |

| 1. Student engagement and participation in the assessment | 2.76 (0.476) |

2.52 (0.513) |

6.725 (1, 160) |

0.010 | 0.040 | 2.56 (0.459) |

2.72 (0.484) |

2.81 (0.547) |

2.83 (0.233) |

3.080 (3, 158) |

0.029 | 0.055 |

| 2. Assessment use by faculty in the teaching and learning process | 3.17 (0.373) |

3.01 (0.427) |

4.484 (1, 160) |

0.036 | 0.027 | 3.06 (0.391) |

3.10 (0.419) |

3.16 (0.393) |

3.34 (0.257) |

2.548 (3, 158) |

0.058 | 0.046 |

| 3. Assessment as a process determined by external factors | 2.62 (0.588) |

2.90 (0.482) |

6.519 (1, 160) |

0.012 | 0.039 | 2.82 (0.500) |

2.82 (0.556) |

2.53 (0.631) |

2.67 (0.511) |

3.158 (3, 158) |

0.026 | 0.057 |

Source: Authors’ elaboration.

Note: M (mean); SD (standard deviation); df (degrees of freedom), p (p-value), ηp 2 (partial eta square), ES (exact sciences), ETS (engineering and technology sciences); MHS (medical and health sciences), SS (social sciences), H (humanities).

ASSESSMENT PRACTICES CHANGES AND THE ROLE OF THE BOLOGNA PROCESS

Assessment is a strategical asset for higher education organizations. Faculty’s right choices on assessment strategies are a key factor in students’ academic success. A good assessment can be motivating and productive for students, helping them know “how well they are doing and what else they need to do” (Brown, 1999, p. 3). On the other hand, a poor assessment may be “tedious, meaningless, grueling and counterproductive” (Brown, 1999, p. 4).

Faculty were also asked about possible assessment changes after the BP was implemented. Most participants (85.9%) said that they have changed the way they assess their students over their career; 9.8% reported no change at all, and 4.3% responded “maybe” (see Table 4). When asked whether the implementation of the BP contributed to changing their assessment practices, 47.8% responded positively, 29.1% said “no”, and 23.1% responded “maybe” (see Table 4).

TABLE 4 Assessment practice changes and the contribution of BP

| YES | NO | MAYBE | MISSING | TOTAL | ||||

|---|---|---|---|---|---|---|---|---|

| n | % | n | % | n | % | |||

| I have changed the way I assess my students. | 158 | 85.9 | 18 | 9.8 | 8 | 4.3 | 1 | 185 |

| The implementation of the BP has contributed to change my assessment practices. | 87 | 47.8 | 53 | 29.1 | 42 | 23.1 | 3 | 185 |

Source: Authors’ elaboration.

Assessment practices changes

Factors related to the context of practice are prevalent when it comes to assessment practices changes. Structural and organizational factors are considered less influential (see Figure 1). The characteristics and number of students, the type and content of courses, the technical and material conditions, and collaborative work were the main factors related to the teaching conditions identified by faculty who said that they had changed their assessment practices:

The decrease in the number of students per class allowed me to provide a more individualized service (whether in individual or group work) and more regular and personalized feedback. Collaborative work with my colleagues and the joint teaching of specific courses also brought me into contact with other assessment practices I have been adopting. (Q59).

The participants also identify changes introduced by legal and institutional framework, e.g., the introduction of the BP, changes imposed by institutions and assessment processes:

The BP fostered other forms of assessment, besides traditional examinations. (Q18).

As a result of teaching policies, HE funding and the enhancement of my academic career, I was forced to abandon laboratory and/or practical classes. (Q89).

Issues such as the importance of training experience, self-learning, expe- rience as faculty, experimentation and the introduction of new assessment models were also highlighted:

Through the teaching-learning process, I felt the need, because of the results, to change the assessment criteria, processes and methodologies in the following years in order to obtain better results. (Q157).

I have diversified and adapted my assessment strategies to students’ profiles. I have also intensified self-assessment practices and student assessment in my practice. (Q168).

On the other hand, the faculty who have not changed their assessment practices stress their lack of power to introduce changes in the curricular units and the absence of institutional context changes. As for context-related factors, interestingly, the belief that faculty assess students in suitable way prevails. Some responses, however, show willingness and need to implement changes in assessment processes, as illustrated in the quotes below:

I believe that the way I assess continues to be more reliable. (Q75).

So far, I have not modified my assessment practices but I am convinced that in small classes it is necessary to implement new forms of assessment that can help students develop more skills. (Q119).

The context’s influence, students’ characteristics, the study cycle, and the contents to be taught were highlighted by the faculty who responded “maybe” when asked whether they had changed their assessment practices.Changes related to institutional regulations, the focus on the student and on the teaching and learning process, as well as the coexistence of a diversity of assessment methods, were also emphasized:

I continue to attach great importance to written tests because they give me the most objective and fair way of assessing the student’s work a whole. Because I teach an engineering course, I also attach great relevance to practical and experimental works, which enhance more appealing and proactive learning. Such work tends to be developed in groups because in engineering it is also important to enhance the student’s ability to interact and work with others. (Q84).

The role of the Bologna Process in faculty’s assessment practices

The teaching and learning practice emerges as a core factor in BP’s contri- bution to changing assessment practices. However, structural and organizational conditions assume particular relevance for the participants who do not recognize the process’ contribution to changing assessment practices (see Figure 1).

The receptivity of institutions to innovative assessment practices, the changes in student’s profile, and the existence of smaller classes were the main aspects highlighted by the participants who recognized the impact of the BP on assessment practice changes. However, a deterioration of teaching conditions was also identified:

Because the kind of students we have forces us to take action to update our teaching and assessment skills. Bologna has created or questioned what we were doing, and I think reality is changing. (Q119).

At first, I believe that Bologna drew attention to the need to diversify the forms of assessment and adapt to the “new” student-centered learning paradigm. In practice, with the reduction of contact hours and with huge classes, this initial effect ended up fading in some courses. (Q184).

The formal changes imposed by Bologna (e.g.: six-month courses, ECTS, among others) and the standardization of assessment criteria and programs were emphasized by the participants. Nevertheless, these changes have had both positive and negative implications:

The institutions allowed faculty to use less conventional methods, classroom simulations, debates, presentations using technology, action research, projects, etc. (Q25).

I believe the changes are mostly in formal processes (e.g., documents/course files...) than eventually in the conceptions/practices. Continuous assessment is, for example, more common after Bologna, but more often than not, it just leads to a greater number of assessment criteria (e.g., increasing the number of tests). (Q177).

This ambivalence was prevalent in their responses. The introduction of innovative assessment methods, with a greater focus on student participation, the use of formative and continuous assessment to the detriment of traditional assessment methods, the development of projects outside the classroom and the university itself, the competency-based assessment or feedback were positive changes highlighted by the participants. But a more negative perspective coexisted which referred to the reduction in training hours and program length, the uniformity of teaching practices, and the lack of depth and effectiveness in teaching and assessment practices.

I fully agree, assessment became more directed to the acquisition of (practical) competencies and, whenever necessary, to the use of continuous assessment, with feedback in every assessment. (Q8).

Though rhetorical in many cases, it introduced the need to think about learning and to focus on working with students in the development of activities, not in the old scheme of theoretical classes that nowadays (even due to technological change) students will not bear. (Q172).

It has contributed in a very negative way, with disastrous consequences. Course length is less and less limited. In law school, many students finish the masters’ course with less quality and less knowledge than in the previous programs. (Q31).

In addition, the large number of students per class, the characteristics of students (e.g., lack of autonomy), the resistance on the part of universities to the change process, the lack of resources, and the deficient preparation of the system itself were highlighted by the participants who consider that Bologna had no impact on assessment practices:

In my opinion, students did not assimilate the spirit of the reform implemented by the BP. I think students do not have the autonomy in study and research required by the BP. (Q91).

My experience is that the university resisted change, either by the natural resistance of its actors (faculty, students, and formal leaders), or by the lack of material and human resources needed for an effective implementation of the BP. (Q165).

Additionally, some participants consider Bologna an “administrative formality”, a formal transition that modifies official documents (e.g., curricular plans) but lacks the desired impact on assessment practices:

There was a change in terminology (e.g., the degrees became known as master’s degrees), but in practical terms, the changes in assessment seem insignificant. (Q75).

As far as the teaching and learning practice is concerned, the changes were also “cosmetic”, the discourse changed, but practice did not, and in some cases the changes were made to suit the convenience of the different actors. Often, when these changes do exist, they are “more rhetorical than practical” and have at times resulted from the individual and collective efforts of faculty who tend to follow a more formative logic, though not necessarily motivated by the BP:

The changes in assessment practices were more rhetorical than practical. The changes are essentially a result of faculty’s individual and/or collective work (discipline groups, teams, etc.). (Q174).

The number of students per class, the “closed logic” of some courses, and the resources available were pointed out as structural and organizational factors by the faculty who answered “maybe”. These participants also highlighted the “semesterization” and condensation of courses (changes introduced by the legal and institutional framework), the lack of preparation of faculty and students, the absence of structural changes in terms of assessment, and the prevalence of summative training (the practice’s context). The emergence of more formative practices, the focus on the student and the teaching-learning process, greater concern with assessment, and greater student autonomy were also identified:

Perhaps it has contributed to an increased variety of students and to continuous assessment, with more assessments over the semester/year. But essentially, I think the logic of the written exam/test and written works with oral presentations was kept. (Q74).

It should have contributed to changing assessment practices, but this has not been the case (except for a few cases), just as with teaching methods. There was an adaptation of teaching to the BP, but not a real implementation of it. (Q163).

DISCUSSION

The Bologna Process and the creation of the EHEA challenged European countries, including Portugal, to change their teaching-learning practices, focusing on student-centered pedagogies, problem-solving initiatives, and innovative assessment practices. This requires adjusting traditional teaching and learning standards based on knowledge transmission, as well as moving towards more innovative and creative forms of teaching, learning and assessment. Assessment must be differentiated to respond positively to students’ diverse backgrounds and abilities (Scott et al., 2014). This study aimed to examine faculty’s assessment practices and the effects that the implementation of the BP had on those practices. A scale on the faculty’s assessment practices was used. Findings suggest that the measure presents adequate psychometric properties and might therefore be a useful tool to measure faculty’s assessment practices in HE. Findings also indicate the existence of diverse assessment practices, with a prevalence of assessment practices of the faculty, and a lower incidence of assessment practices determined by external factors. The influence of the study cycle and knowledge area on the use of different assessment practices was also identified. Indications were also found that faculty who teach in master’s programs use more practices associated with student involvement and participation in the assessment, as well as assessment practices determined more by the faculty than by external factors.

In addition, assessment practices associated with student involvement and participation in the assessment were also found to be more used by social sciences faculty than by exact sciences and engineering and technology sciences faculty. The study also identified a greater use of assessment practices determined by external factors among exact sciences and engineering and technology sciences faculty compared with social sciences faculty.

Faculty also questioned the existence of changes in their assessment practices and the possible contribution of the BP to change them. Most of the faculty reported to have changed the way they assess students over their careers; interestingly, however, less than half of participants recognize the BP’s importance in changing assessment practices. Factors related to structural and organizational aspects (e.g., number of students per class, material conditions, semesterization), to changes introduced by the legal and institutional framework (e.g., legal and institutional documents, curricular plans, among others), and to the context of practice (e.g., assessment conceptions and use) explain differences between optimistic and skeptical faculty regarding the BP’s contribution to changing assessment practices. Many participants consider that Bologna may not have been a key moment in changing - or at least challenging - their assessment practices. However, its contribution to the discussion and attention dedicated to teaching and learning and, especially, assessment issues, seems undeniable. These results highlighted the complexity of higher education scenarios (Zabalza, 2004) and assessment (Brown & Knight, 1994), as well as the complexity of adapting academic practices to the Bologna principles (European Commission, 2018).

The findings reinforce the importance of assessment by faculty in the teaching and learning process - for example, the degree of understanding about an issue or subject, or the role of assessment on planning future learning activities. In this context, feedback assumes particular relevance for improving students’ learning. Findings also corroborate the crucial contribution of assessment and of faculty’s right choices and strategies for the success of students’ learning (Brown, 1999). The role of student engagement and participation in the assessment process is another relevant finding. From a perspective of improvement rather than measurement, the participative construction of assessment criteria contributes for an understanding of what is expected and for students’ self-regulation (Bonniol & Vial, 1997). The role of curricular plans and the need to measure and attribute a classification or grade also emerges, though with less relevance, in faculty’s assessment practices.

Additionally, the participants’ voices reflect the different international perspectives in the field of assessment, sometimes within a summative and verification logic (Perrenoud, 1999) and others within a student-centered assessment logic (Webber, 2012), recognizing the connection between assessment conceptions and practices, as well as their influence on students’ learning (Gibbs, 1999; Light & Cox, 2003).

The BP represented an opportunity of modernization and improvement of HE. However, there are two mutually opposing views: “the opinion that Bologna was an evolution towards creating better learning conditions, with curricula more adapted to reality” and the argument that Bologna represented “a simple reduction in the length of undergraduate and master’s programs that by no means altered teaching programs or methodologies” (Justino et al., 2017, p. 1).

This study’s findings also point to a number of tensions regarding the impact of Bologna on assessment practices at various levels: factors related to structural and organizational characteristics, to changes introduced by the legal and institutional framework, and to the context of practice. Bologna may be seen as an opportunity to introduce some necessary changes to respond to these tensions, but the literature and the results of this research project show the need for a more systematic evaluation and a deeper reflection on the changes that have occurred on curriculum, pedagogy and assessment. The success of the BP in terms of how assessment is experienced by faculty is not evident in the data arising from this study. Nevertheless, the ambition of the BP is a positive one, and further efforts by universities are needed to mitigate a purely summative assessment approach. This research provides evidence of the role of faculty’s working conditions and workload in their assessment practices, as well as their conceptions on teaching, learning and assessment.

ACKNOWLEDGMENTS/FUNDING

This work was supported by Portuguese national funds through the Fundação para a Ciência e a Tecnologia [Foundation for Science and Technology] and co-financed by European Regional Development Fund (ERDF) through the Competi- tiveness and Internationalization Operational Program (POCI) with the reference POCI-01-0145-FEDER-007562, under grant number PTDC/MHCCED/2703/2014 (project: “Assessment in higher education: The potential of alternative methods”) and grant number SFRH/BD/103291/2014 (Ph.D. scholarship); and within the framework of the projects of Centro de Investigação em Estudos da Criança [Research Centre for Child Studies] (CIEC), of the Universidade do Minho, under the references UIDB/00317/2020 and UIDP/00317/2020.

REFERENCES

Bardin, L. (2009). Análise de conteúdo. Edições 70. [ Links ]

Barreira, C., Bidarra, M. G., Vaz-Rebelo, P., Monteiro, F., & Alferes, V. (2015). Perceções dos professores e estudantes de quatro universidades portuguesas acerca do ensino e avaliação das aprendizagens. In D. Fernandes, A. Borralho, C. Barreira, A. Monteiro, D. Catani, E. Cunha, & P. Alves (Orgs.), Avaliação, ensino e aprendizagem em Portugal e no Brasil: Realidades e perspetivas (pp. 309-326). Educa. [ Links ]

Biggs, J. B. (2003). Teaching for quality learning at university. SHRE and Open University Press. [ Links ]

Black, P., & Wiliam, D. (1998). Assessment and classroom learning. Assessment in Education: Principles, Policy & Practice, 5(1), 7-74. https://doi.org/10.1080/0969595980050102 [ Links ]

Black, P., & Wiliam, D. (2018). Classroom assessment and pedagogy. Assessment in Education: Principles, Policy & Practice, 25(6), 551-575. https://doi.org/10.1080/0969594X.2018.1441807 [ Links ]

Bonniol, J., & Vial, M. (1997). Les modèles de l’évaluation. De Boeck & Larcier S.A. [ Links ]

Brew, C., Riley, P., & Walta, C. (2009). Education students and their teachers: Comparing views on participative assessment practices. Assessment & Evaluation in Higher Education, 34(6), 641-657. https://doi.org/10.1080/02602930802468567 [ Links ]

Brown, S. (1999). Institutional strategies for assessment. In S. Brown, & A. Glasner (Eds.), Assessment matters in higher education: Choosing and using diverse approaches (pp. 3-13). SHRE & Open University Press. [ Links ]

Brown, S. (2005). Assessment for learning. Learning and Teaching in Higher Education, (1), 81-89. https://eprints.glos.ac.uk/id/eprint/3607 [ Links ]

Brown, S., & Knight, P. (1994). Assessing learners in higher education. Kogan Page. [ Links ]

Carless, D., Salter, D., Yang, M., & Lam, J. (2011). Developing sustainable feedback practices. Studies in Higher Education, 36(4), 395-407. https://doi.org/10.1080/03075071003642449 [ Links ]

Cho, J. Y., & Lee, E. (2014). Reducing confusion about grounded theory and qualitative content analysis: Similarities and differences. The Qualitative Report, 19(32), Article 64. https://doi.org/10.46743/2160-3715/2014.1028 [ Links ]

Cohen, J. (1998). Statistical power analysis for the behavioral sciences (2nd ed.). Lawrence Earlbaum Associates. [ Links ]

David, F., & Abreu, R. (2017). Portuguese higher education system and Bologna Process implementation. In Edulearn17 Proceedings, 9th International Conference on Education and New Learning Technologies (pp. 4224-4231). IATED. [ Links ]

David, F., & Abreu, R. (2007). The Bologna Process: Implementation and developments in Portugal. Social Responsibility Journal, 3(2), 59-67. https://doi.org/10.1108/17471110710829731 [ Links ]

Decreto-Lei n. 74, de 24 de março de 2006. (2006). Aprova o regime jurídico dos graus e diplomas do ensino superior. Diário da República, (60), série I-A, 2242-2257. https://dre.pt/dre/detalhe/decreto-lei/74-2006-671387 [ Links ]

Decreto-Lei n. 107, de 25 de junho de 2008. (2008). Altera os Decretos-Leis n.os 74/2006, de 24 de Março, 316/76, de 29 de Abril, 42/2005, de 22 de Fevereiro, e 67/2005, de 15 de Março, promovendo o aprofundamento do Processo de Bolonha no ensino superior. Diário da República, (121), série I, 3835-853. https://dre.pt/dre/detalhe/decreto-lei/107-2008-456200 [ Links ]

Earl, L., & Katz, S. (2006). Rethinking classroom assessment with purpose in mind. The Crown in Right of Manitoba. https://www.edu.gov.mb.ca/k12/assess/wncp/full_doc.pdf [ Links ]

Esteves, M. (2006). Análise de conteúdo. In L. Lima, & J. A. Pacheco (Orgs.), Fazer investigação: Contributos para a elaboração de dissertação e teses (pp. 105-126). Porto Editora. [ Links ]

European Commission. (2013). Report to the European Commission on improving the quality of teaching and learning in Europe’s higher education institutions. EU Publications. [ Links ]

European Commission. (2018). The EHEA in 2018: Bologna Process Implementation Report. Publications Office of the European Union. https://doi.org/10.2797/091435 [ Links ]

European Students’ Union (ESU). (2015). Overview on student - Centered learning in higher education in Europe: Research study. Peer Assessment of Student-Centred Learning. [ Links ]

Ezzy, D. (2002). Qualitative analysis: Practice and innovation. Allen & Unwin. [ Links ]

Fernandes, D. (2015). Pesquisa de percepções e práticas de avaliação no ensino universitário português. Estudos em Avaliação Educacional, 26(63), 596-629. https://doi.org/10.18222/eae.v26i63.3687 [ Links ]

Fernandes, E. L. (2020). Conceptions and practices of assessment in higher education: A study of university teachers [Tese de doutorado, Universidade do Minho]. RepositóriUM. http://hdl.handle.net/1822/76411 [ Links ]

Field, A. (2009). Discovering statistics using SPSS (3rd ed.). Sage Publications. [ Links ]

Flores, M. A., & Pereira, D. (2019). Capítulo I: Revisão da literatura. In M. A. Flores (Coord.), Avaliação no ensino superior: Conceções e práticas (pp. 23-48). De Facto Editores. [ Links ]

Flores, M. A., & Simão, A. M. (2007). Competências desenvolvidas no contexto do ensino superior: A perspetiva dos diplomados. In Atas das V Jornadas de Redes de Investigación en Docencia Universitaria. Universidad de Alicante. [ Links ]

Gibbs, G. (1999). Using assessment strategically to change the way students learn. In S. Brown, & A. Glasner (Eds.), Assessment matters in higher education: Choosing and using diverse approaches (pp. 41-53). SHRE & Open University Press. [ Links ]

Gonçalves, R. (2016). Conceções de avaliação em contexto de ensino clínico de enfermagem: Um estudo na Escola Superior de Enfermagem de Coimbra [Tese de doutorado, Universidade de Aveiro]. RIA. http://hdl.handle.net/10773/17179 [ Links ]

Hadji, C. (2001). A avaliação desmistificada. Artmed. [ Links ]

Hair, J. F., Black, W. C., Babin, B. J., & Anderson, R. E. (2009). Multivariate data analysis (7th ed.). Pearson. [ Links ]

Hattie, J., & Timperley, H. (2007). The power of feedback. Review of Educational Research, 77(1), 81-112. https://doi.org/10.3102/003465430298487 [ Links ]

Ion, G., & Cano, E. (2011). Assessment practices at Spanish universities: From a learning to a competencies approach. Evaluation & Research in Education, 24(3), 167-181. https://doi.org/10.1080/09500790.2011.610503 [ Links ]

Justino, D., Machado, A., & Oliveira, T. (2017). Processo de Bolonha; EEES. Declaração da Sorbonne: Processo de Bolonha. Euroogle Dicionários. http://euroogle.com/dicionario.asp?definition=761&parent=1141 [ Links ]

Light, G., & Cox, R. (2003). Learning & teaching in higher education: The reflective professional. Sage Publications. [ Links ]

McDowell, L., Wakelin, D., Montgomery, C., & King, S. (2011). Does assessment for learning make a difference? The development of a questionnaire to explore the student response. Assessment & Evaluation in Higher Education, 36(7), 749-765. https://doi.org/10.1080/02602938.2010.488792 [ Links ]

Miles, M. B., & Huberman, A. M. (1994). Qualitative data analysis: An expanded source book (2nd ed.). Sage. [ Links ]

Myers, C. B., & Myers, S. M. (2015). The use of learner-centered assessment practices in the United States: The influence of individual and institutional contexts. Studies in Higher Education, 40(10), 1904-1918. https://doi.org/10.1080/03075079.2014.914164 [ Links ]

Pereira, D. R., & Flores, M. A. (2016). Conceptions and practices of assessment in higher education: A study of Portuguese university teachers. Revista Iberoamericana de Evaluación Educativa, 9(1), 9-29. http://dx.doi.org/10.15366/riee2016.9.1.001 [ Links ]

Pereira, D. R., Flores, M. A., & Barros, A. (2017). Perceptions of Portuguese undergraduate students about assessment: A study in five public universities. Educational Studies, 43(4), 442-463. http://dx.doi.org/10.1080/03055698.2017.1293505 [ Links ]

Perrenoud, P. (1999). Avaliação: Da excelência à regulação das aprendizagens - Entre duas lógicas. Artmed. [ Links ]

Pires, A. R., Saraiva, M., Gonçalves, H., & Duarte, J. (2013). Bologna Paradigm in a Portuguese Polytechnic Institute - The case of the first cycle. In L. Gómez Chova, D. Martí Belenguer, & I. Candel Torres (Eds.), Proceedings of the 7th International Technology, Education and Development Conference (INTED2013 Conference) (pp. 4896-4903). Iated. [ Links ]

Reimann, N., & Wilson, A. (2012). Academic development in ‘assessment for learning’: The value of a concept and communities of assessment practice. International Journal for Academic Development, 17(1), 71-83. https://doi.org/10.1080/1360144X.2011.586460 [ Links ]

Scott, S., Webber, C. F., Lupart, J. L., Aitken, N., & Scott, D. E. (2014). Fair and equitable assessment practices for all students. Assessment in Education: Principles, Policy & Practice, 21(1), 52-70. https://doi.org/10.1080/0969594X.2013.776943 [ Links ]

Segers, M., & Dochy, F. (2001). New assessment forms in problem-based learning: The value-added of the students’ perspective. Studies in Higher Education, 26(3), 327-343. https://doi.org/10.1080/03075070120076291 [ Links ]

Struyven, K., Dochy, F., & Janssens, S. (2005). Students’ perceptions about evaluation and assessment in higher education: A review. Assessment & Evaluation in Higher Education, 30(4), 331-347. https://doi.org/10.1080/02602930500099102 [ Links ]

Veiga, A., & Amaral, A. (2008). Survey on the implementation of the Bologna process in Portugal. Higher Education, 57(1), 57-69. https://link.springer.com/article/10.1007/s10734-008-9132-6 [ Links ]

Webber, K. L. (2012). The use of learner-centered assessment in US colleges and universities. Research in Higher Education, 53(2), 201-228. https://www.jstor.org/stable/41349005 [ Links ]

Webber, K. L., & Tschepikow, K. (2013). The role of learner-centred assessment in postsecondary organisational change. Assessment in Education: Principles, Policy & Practice, 20(2), 187-204. https://doi.org/10.1080/0969594X.2012.717064 [ Links ]

Zabalza, M. A. (2004). La enseñanza universitaria: El escenario y sus protagonistas (2a ed.). Narcea. [ Links ]

Zabalza, M. A. (2008). Competencias docentes del profesorado universitario: Calidad y desarrollo profesional. Narcea. [ Links ]

1This article is derived from the work carried out as part of the first author’s doctoral thesis (Fernandes, 2020), under the supervision of Maria Assunção Flores (Universidade do Minho).

2Component 1, “Engagement and participation of students in assessment”, included 14 items: 20 - I provide guidance that helps students assess the learning of others; 14 - I provide guidance that helps students assess their own performance; 25 - I provide guidance that helps students assess their own learning; 16 - I help students identify their learning needs; 15 - I identify students’ strengths and advise them on how they should be strengthened; 7 - I give students the opportunity to decide on their own learning goals; 26 - Students are assisted in planning the next steps in their learning; 29 - I give students the opportunity to assess other students’ performance; 30 - I regularly discuss ways of promoting learning with students; 18 - Students are assisted in thinking about how they learn best; 17 - Students are encouraged to identify their mistakes as precious learning opportunities; 21 - Students’ mistakes are valued by providing evidence on their way of thinking; 19 - I use questioning to identify the explanations/justifications of students concerning their performance; and 11 - I provide students with information about their performance compared to their previous performance.

3Component 2, “Use of assessment by the faculty in the teaching and learning process”, included 10 items: 2 - I use information from assessments of my students’ learning to plan future activities; 1 - Assessing student learning provides me with useful information about students’ understanding of what has been taught; 8 - I use questioning to identify students’ knowledge; 12 - Students’ learning goals are discussed with them so that they can understand those goals; 28 - Learning assessment criteria are discussed with students so that they can understand them; 9 - I take into account the best practices that faculty can use to assess learning; 10 - My assessment of learning practices helps students learn independently; 22 - Students are assisted in understanding the learning goals of each activity or set of activities; 27 - Students’ efforts are important for assessing their learning; and 5 - The feedback that students receive helps them improve.

4Component 3, “Assessment as a process determined by external factors”, included 3 items: 24 - Students’ learning goals are mainly determined by the curriculum; 13 - Student performance assessment consists primarily in assigning grades; and 3 - The learning assessment practices that I use are determined more by curricular plans than by analyzing what students have been developing in the program or classes.

APPENDIX

TABLE A1 Measure “Assessment practices” - Items’ components and factor loadings

| ITEMS | FACTORS | ||

|---|---|---|---|

| 1. Students’ engagement and participation in assessment | 2. Use of assessment by faculty in the teaching and learning process | 3. Assessment as a process determined by external factors | |

| 20 - I provide guidance that helps students assess the learning of others. | 0.705 | ||

| 14 - I provide guidance that helps students assess their own performance. | 0.679 | ||

| 25 - I provide guidance that helps students assess their own learning. | 0.670 | ||

| 16 - I help students identify their learning needs. | 0.654 | ||

| 15 - I identify students’ strengths and advise them on how they should be strengthened. | 0.633 | ||

| 7 - I give students the opportunity to decide on their own learning goals. | 0.631 | ||

| 26 - Students are assisted in planning the next steps in their learning. | 0.616 | ||

| 29 - I give students the opportunity to assess other students’ performance. | 0.602 | ||

| 30 - I regularly discuss ways of promoting learning with students. | 0.571 | ||

| 18 - Students are assisted in thinking about how they learn best. | 0.567 | ||

| 17 - Students are encouraged to identify their mistakes as precious learning opportunities. | 0.559 | ||

| 21 - Students’ mistakes are valued by providing evidence on their way of thinking. | 0.554 | ||

| 19 - I use questioning to identify the explanations/justifications of students concerning their performance. | 0.541 | ||

| 11 - I provide students with information about their performance compared to their previous performance. | 0.499 | ||

| 2 - I use information from assessments of my students’ learning to plan future activities. | 0.736 | ||

| 1 - Assessing student learning provides me with useful information about students’ understanding of what has been taught. | 0.675 | ||

| 8 - I use questioning to identify students’ knowledge. | 0.587 | ||

| 12 - Students’ learning goals are discussed with them so that they can understand those goals. | 0.582 | ||

| 28 - Learning assessment criteria are discussed with students so that they can understand them. | 0.559 | ||

| 9 - I take into account the best practices that faculty can use to assess learning. | 0.511 | ||

| 10 - My assessment of learning practices helps students learn independently. | 0.486 | ||

| 22 - Students are assisted in understanding the learning goals of each activity or set of activities. | 0.475 | ||

| 27 - Students’ efforts are important for assessing their learning. | 0.464 | ||

| 5 - The feedback that students receive helps them improve. | 0.457 | ||

| 24 - Students’ learning goals are mainly determined by the curriculum. | 0.780 | ||

| 13 - Student performance assessment consists primarily in assigning grades. | 0.680 | ||

| 3 - The learning assessment practices that I use are determined more by curricular plans than by analyzing what students have been developing in the program or classes. | 0.645 | ||

| Eigenvalues | 8.46 | 2.34 | 1.78 |

| % variance | 31.32 | 8.66 | 6.58 |

| Cronbach’s alpha | 0.894 | 0.796 | 0.619 |

Source: Authors’ elaboration.

Note: Items 2 and 4 (“The assessment that I do focuses on what students know, understand and use of the curricular goals.”) have a very low factor loading (< 0.35); item 23 (“I help students identify their learning needs.”) is highly loaded simultaneously on components 1 and 2. These items were eliminated and the PCA was rerun. Kaiser-Meyer-Olkin = 0.87; Bartlett’s test of sphericity: χ²(435) = 1825.21, p < 0.001.

TABLE A2 Descriptive statistics for the assessment practices as a function of age, gender, years of experience, professional category, cycle of studies taught, and pedagogical training

| 1. STUDENTS ENGAGEMENT AND PARTICIPATION OF IN ASSESSMENT | 2. USE OF ASSESSMENT BY FACULTY ON THE TEACHING AND LEARNING PROCESS | 3. ASSESSMENT AS A PROCESS DETERMINED BY EXTERNAL FACTORS | |

|---|---|---|---|

| M (SD) | M (SD) | M (SD) | |

| Gender | |||

| Male | 2.68 (0.52) | 3.09 (0.40) | 2.77 (0.57) |

| Female | 2.73 (0.48) | 3.18 (0.39) | 2.60 (0.61) |

| Age | |||

| Under 45 years old | 2.70 (0.50) | 3.11 (0.36) | 2.73 (0.53) |

| Over 45 years old | 2.72 (0.49) | 3.16 (0.41) | 2.64 (0.61) |

| Professional category | |||

| Full professor | 2.83 (0.60) | 3.17 (0.49) | 2.78 (0.75) |

| Associate/assistant professor with aggregation/qualification | 2.96 (0.47) | 3.19 (0.33) | 2.67 (0.60) |

| Associate/Assistant professor | 2.66 (0.50) | 3.15 (0.40) | 2.65 (0.58) |

| Other | 2.73 (0.38) | 3.17 (0.49) | 2.79 (0.50) |

| Cycle of studies | |||

| Undergraduate | |||

| Yes | 2.72 (0.49) | 3.15 (0.40) | 2.64 (0.58) |

| No | 2.68 (0.50) | 3.07 (0.34) | 2.87 (0.52) |

| Integrated master’s | |||

| Yes | 2.63 (0.47) | 3.07 (0.38) | 2.76 (0.59) |

| No | 2.75 (0.50) | 3.18 (0.39) | 2.63 (0.56) |

| Ph.D. | |||

| Yes | 2.74 (0.49) | 3.18 (0.38) | 2.59 (0.59) |

| No | 2.66 (0.50) | 3.09 (0.40) | 2.78 (0.55) |

| Pedagogical training | |||

| Yes | 2.76 (0.48) | 3.17 (0.35) | 2.67 (0.56) |

| No | 2.60 (0.50) | 3.09 (0.45) | 2.71 (0.60) |

Source: Authors’ elaboration.

Note: M (mean); SD (standard deviation).

Received: December 16, 2021; Accepted: July 03, 2023

texto en

texto en