Servicios Personalizados

Revista

Articulo

Compartir

Revista Brasileira de Educação

versión impresa ISSN 1413-2478versión On-line ISSN 1809-449X

Rev. Bras. Educ. vol.24 Rio de Janeiro 2019 Epub 26-Feb-2019

https://doi.org/10.1590/s1413-24782019240002

Article

Quality indicators in education: discriminant analysis of the performances in Prova Brasil

IInstituto Federal do Espírito Santo, Viana, ES, Brazil.

IIUniversidade Federal do Espírito Santo, Vitória, ES, Brazil.

IIIUniversidade Estadual de Maringá, Maringá, PR, Brazil.

The present study identifies the contextual variables that best differentiate the performance obtained by students from the final grades of primary education in state schools of Espírito Santo in the Prova Brasil of 2013 through discriminant analysis, with a sample of 124 schools. The results showed that the age-series distortion, the teacher regularity index and the abandonment rate formed an optimal set of variables to discriminate the schools with “better” and “worse” school performance. The technique used contributes to the work of researchers and managers, as they can appropriate this method with the purpose of tracing the profile (description), differentiation (inference) or classification (prediction) of schools. Moreover, researchers and managers can reorganize their actions and investments based on priority contextual variables, with broad potential to change the school performance of their regions in Prova Brasil.

KEYWORDS: educational policies; educational indicators; educational management; school performance; discriminant analysis

O presente estudo identifica as variáveis contextuais que melhor diferenciam o desempenho obtido por estudantes dos anos finais do ensino fundamental das escolas estaduais capixabas na Prova Brasil de 2013 por meio de análise discriminante, tendo como amostra 124 escolas. Os resultados demostraram que a distorção idade-série, o índice de regularidade docente e a taxa de abandono formaram um conjunto ótimo de variáveis para distinguir as escolas com “melhores” e “piores” desempenhos capixabas. A técnica utilizada contribui com o trabalho de pesquisadores e gestores, uma vez é possível se apropriar desse método com objetivos de traçar o perfil (descrição), a diferenciação (inferência) ou a classificação (predição) de escolas, bem como de reorganizar suas ações e seus investimentos com base em variáveis contextuais prioritárias, com amplo potencial de modificar os desempenhos escolares de suas regiões na Prova Brasil.

PALAVRAS-CHAVE: políticas educacionais; indicadores educacionais; gestão educacional; desempenho escolar; análise discriminante

El presente estudio identifica las variables contextuales que mejor diferencian el desempeño obtenido por estudiantes de los años finales de la enseñanza fundamental de las escuelas estatales del estado de Espírito Santo en la Prova Brasil de 2013 por medio de análisis discriminante, teniendo como muestra 124 escuelas. Los resultados demostraron que la distorsión edad-serie, el índice de regularidad docente y la tasa de abandono formaron un conjunto óptimo de variables para discriminar a las escuelas con “mejores” y “peores” desempeños. La técnica utilizada contribuye con el trabajo de investigadores y gestores, ya que pueden apropiarse de ese método con objetivos de trazar el perfil (descripción), la diferenciación (inferencia) o la clasificación (predicción) de escuelas, así como de reorganizar sus acciones y sus inversiones en base a variables contextuales prioritarias, con amplio potencial de modificar los desempeños escolares de sus regiones en la Prova Brasil.

PALABRAS CLAVE: políticas educativas; indicadores educativos; gestión educativa; rendimiento escolar; análisis discriminante

INTRODUCTION

After the second half of the twentieth century, devices for education quality assessment applied by external institutions to schools began to be used on a global scale. Currently, they are part of the set of regulatory frameworks recommended by multilateral organizations and agencies and have been assimilated by the public administration in several countries around the world (Akkari, 2011). In Brazil, as in much of Latin America and the Caribbean (Beech, 2009), adherence to international evaluation models happened slowly, from the 1980s to the 1990s, until the creation of the Sistema Nacional de Avaliação da Educação Básica (SAEB). In its twenty-seven years, SAEB has been consolidated not only as the main dispositive for educational performance assessment in Brazil, but also as a powerful analytical tool that guides public policy and establishes official parameters to define what “quality” is in primary education.

Beginning in 2005, with the creation of the nationwide exam Prova Brasil, and in 2007, with the formulation of the Índice de Desenvolvimento da Educação Básica (IDEB), SAEB was expanded and scaled to fit the international prerogatives of “competitiveness” and “efficiency” in school education. Since then, the Brazilian system has been examining schools and students of public and private networks in rural and urban areas, enrolled in the early and final grades of primary education and who are finishing High School. Thus, to agree with Coelho (2008, p. 231), “the evaluation is increasingly established as an element of the regulation and of the managerial and competitive management of the ‘Evaluator-State’ in Brazil”; that is, as an instrument promoting increased interference and control over education.

In this process, student performance has become synonymous with educational quality, and official rates have favored the emergence of academic studies seeking to discover why certain schools achieve “better” results than. The densification of these surveys on school effectiveness indicates that contextual, organizational, monitoring, and pedagogical aspects contribute to student development, making certain schools more or less able to offer quality education fairly (Karino and Laros, 2017). A considerable portion of academic studies employ quantitative approaches to analyse primary education data and to estimate the “school effect” produced by each institution or educational system, signalling to the education public management which would be the relevant contextual factors and school practices for the educational development of the country.

Many of the discussions held by the school effectiveness area, however, have been conducted by the set of variables whose variation would better explain the variation of schools’ and students’ grades. Despite the contribution of these studies to the understanding of the teaching conditions in the country, an analytical gap is observed, for many investigations in the area fail to analyse characteristics distinguishing schools with differentiated performances.

The predominant studies in the area, in general, result from the application of regression models aiming to

understand how each independent variable (e.g., complexity of school management) influences the dependent variable (e.g., grade obtained in Prova Brasil),

affirm, with a certain confidence level, if the relationship between independent and dependent variable is attributable to chance and

develop a model to predict the value of the dependent variable in observations outside the sample with which the predictive equation was generated.

However, if there is wide variation in the dependent variable between observations, many studies, by taking mean values for the entire data set, provide inaccurate measurements of effectiveness when the data set is divided into mutually exclusive and collectively exhaustive groups. Therefore, to investigate the relevance of schools to groups with different performances from contextual indicators usually used for school evaluation is reasonable.

Given this scenario, the present research aimed to contribute to the academic debate around the analysis of official metrics adopted by the Brazilian State to evaluate primary education, offering an alternative way to interpret them: discriminant analysis. This technique is applied when the research objectives involve the analysis of information taken from independent variables to achieve the clearest possible separation (discrimination) between groups in order to map the groups’ profile (description), differentiation (inference) or classification (prediction). For this, the discriminant analysis uses the idea of finding a linear combination of independent variables that would produce, by Fisher’s approach, “maximally different” discriminant scores or, in terms of the Mahalanobis’ approach, find the locus of the “equidistant” points of the groups means by a measure of adapted covariance distance (Eisenbeis and Avery, 1972).

The research objective is to identify the variables best distinguishing schools with different performances and develop discriminant functions that represent such differences from the own performance criteria currently used in Prova Brasil. Therefore, the text describes the practice of structuring the study network on school effectiveness in Brazil and demonstrates some of its major contributions to the analysis of the factors that influence school performance. The following section outlines a historical overview of the construction and improvement of SAEB, the main mechanism for evaluating the quality of primary education in the country. Soon after this contextualization of the Saeb, our research presents the theoretical-methodological perspective used in the data collection and analysis. Lastly, an exploratory analysis of the contextual variables influencing the students’ scores of the final grades of primary education of Espírito Santo education network in the Prova Brasil of 2013 is carried out.

The intention is not simply to validate the variables currently used by SAEB but to prepare interpretations and alternative uses of these indicators with the aim of contributing to school/educational research and management. In this way, a discriminant analysis is proposed to indicate, among the factors that are officially considered to be relevant in defining the quality of education in the country, which ones would be particularly high priority in discriminating “best” and “worst” performing schools and, therefore, to deepen the understanding of the organization of the Espírito Santo schools and support the strategic work in that State. This is undoubtedly an unusual analysis if we consider the specialized literature in the area, since most quantitative studies on the subject evaluate only the influence of variables on schools’ performance. In this case, rather than offering a regional point of view on the impact of a national exam, the contextual variables discriminating schools with differentiated performances in Prova Brasil are evaluated under the assumption that the comparative evaluation of homogeneous groups among themselves and different of others may reveal unfamiliar relationships, providing insights for both management and educational researches.

THE FORMATION OF THE STUDY NETWORK ON SCHOOL EFFECTIVENESS

The question of the contexts in which school education develops and its influence on human formation is not new in educational debates. It has been more than half a century since the first researches interested in identifying factors that would be positively or negatively impacting on school performance began to appear in the international scene. The Coleman Report, published in 1966, in which the causes for differences in performance among North American schools were analysed (Coleman et al., 1966), is an academic landmark for the specialized literature. This research has generated enormous controversy in academic community by pointing out that about 90% of the variation in school outcomes could be attributed to students’ or their families’ socioeconomic conditions - signalling that, once extracurricular factors are controlled, schools would have little influence on the quality of education they offer. Since then several discussions (under different levels of analysis) about possible school effects on academic performance have been carried out (e.g., Alves and Soares, 2013; Machado, Alavarse and Oliveira, 2015). In Brazil, mainly, adopting Prova Brasil grades as proxies of school effectiveness (e.g., Palermo, Silva and Novellino, 2014).

In reaction to the destabilizing role of instruction caused by Coleman Report, numerous other studies have been produced in the following decades with the intention of showing how schools, regardless of socio-cultural inequalities, could “make a difference” in the student trajectory and effectively contribute to promote social equity through formal education (e.g., Edmonds, 1979; Mortimore et al., 1988; Rutter et al., 1979). Thus, academic networks of studies on school effectiveness began to take shape at the end of the 20th century, gathering diverse sets of quantitative and qualitative research that aimed at analysing the effects of educational institutions on the development of key capabilities and skills for different stages of schooling, as well as suggesting ways to make pedagogical work more effective (Carvallo-Pontón, 2010).

A theoretical model for school analysis that would become a central representation for subsequent research in the area was proposed by Scheerens (1990), based on a combination of these studies on school effectiveness in the 1970s and 1980s. The author integrated, in a same perspective, curricular and extracurricular factors, characterized by inputs, teaching processes and outputs. Thus, Scheerens sought to demonstrate the deep articulation between the aspects involving school life, though school do not have full control over them - such as: location, size, resources, public policies, socioeconomic and cultural characteristics of the community -, and the realities over which they could exert a strong influence - such as the management of “inputs” to achieve educational processes, school practices and/or “teaching process”, and evaluation of training “outputs”.

In a short time, the popularization of Schereens’ model influenced empirical researches on student/school performance in the systemic evaluations of education in various national contexts, structuring a strong international network of studies on school effectiveness. In Brazil, these studies were established as an organized field of research only at the beginning of the 21st century, when SAEB was established as the main source of information about education in the country and its results could be widely analyzed (Soares, 2007). Along the way, a network of interested investigations estimating the school-effect and identifying the factors that contribute to measure the quality of education was constructed (Ferrão and Couto, 2013).

In analysing the intellectual production of this scientific network that was formed in Brazil between 2000 and 2013, the predominance of empirical analyses about theoretical reflections was observed by Karino and Laros (2017). In this sense, few studies inquiry into SAEB indicators modes of analysis. Instead of proposing models or categories for evaluating data, information is systemized through established intellectual production (e.g., Bonamino et al., 2010; Ferrão and Fernandes, 2003; Franco and Bonamino, 2005; Koslinski and Alves, 2012; Soares, 2007). Thus, since the 1980s, there is increasing criticism of the mismatch between academic investigations on school effectiveness and credible theoretical discussions of other areas of the educational field (Van den Eeden, Hox and Hauer, 1990).

Nowadays, the results of biannual tests carried out by SAEB are systematized, compared and evaluated by a massive repertoire of publications, whatever the controversies surrounding how much the area might have learned from itself and reflected on the theoretical perspectives that underpin its own analytical formulations (e.g., Andrade and Laros, 2007; Franco et al., 2007; Laros, Marciano and Andrade, 2012; Rodrigues, Rios-Neto and Pinto, 2011). Such researches generally use regression models to understand the heterogeneity of factors influencing school performance between and within school groups (Laros and Marciano, 2008). In addition, hierarchical levels are used to control the impact of socioeconomic inequalities on students’ cognitive performance (Ferrão and Fernandes, 2003; Fletcher, 1998).

After almost two decades of intense intellectual production, it is possible to identify general fields of interest among the studies on school effectiveness. On the one hand, there are investigations analysing the effect generated by schools on training in primary education through descriptive (e.g., Stocco and Almeida, 2011) and longitudinal (e.g., Ferrão and Couto, 2013) paths, focusing on the added value that educational establishments would be transmitting. Another field of interest focuses on understanding the access and performance inequalities to educational resources. In this case, the surveys consider the external evaluation results by means of gender (e.g., Soares and Alves, 2003), color (e.g., Andrade, Franco and Carvalho, 2003), and class (e.g., Soares and Andrade, 2006) intersections, demonstrating to what extent the improvement of school conditions does not necessarily coincide with the promotion of equity.

The formation of a third area of interest around school policies and practices explaining the high educational performance of certain schools should also be emphasized. It is composed, in part, by qualitative studies focusing on specific teaching units; to analyse in detail the reasons allowing particular school units to present superior indices in comparison to the average of other schools is intended (e.g., Silva, Bonamino and Ribeiro, 2012; Teixeira, 2009). Thus, socioeconomic factors related to the family are isolated and school/curricular aspects influencing teaching-learning relationships and impacting on student performance are analysed. For this, research techniques are mobilized, namely: pedagogical practices observation, interviews with school subjects, documentary/photographic records of material and/or relational aspects involving educational life, as well as the incorporation of data and statistical variables to enable the spatial comparison of case studies with broader scenarios of teaching conditions in the country.

On the other hand, the aforesaid third area of studies is also composed of quantitative investigations that mainly use regression techniques to analyse school/student context, management, monitoring and performance, contributing to improve school/student performance in official exams and parameters (e.g., Albanez, Ferreira e Franco, 2002; Américo e Lacruz, 2017; Barbosa e Fernandes, 2000; Bonamino et al., 2010; Ferrão et al., 2001; Nascimento, 2007; Soares, 2005). As for the extracurricular variables influencing school performance (Scheerens, 1990), the correlation between school socioeconomic and family schooling on student performance in external evaluations is positive (e.g., Bonamino et al., 2010). The color and gender inequalities are also confirmed (e.g., Ferrão et al., 2001), although some studies report that girls perform better in Portuguese (e.g., Soares, 2005) and boys in mathematics (e.g., Albanez, Ferreira and Franco, 2002) - suggesting that the processes of racialization and gendering of school performance can also be interpreted in terms of symbolic inequalities between areas or discipline. These variables, however, are usually aggregated into the composition of a single fact called “socioeconomic level”, making it difficult to analyze its effects on primary education.

Curricular variables that impact the school effect, in turn, tend to diversify into multiple categories. However, school delay or dropout, usually represented by age-series distortion rate, approval rate and dropout rate, figure in virtually every area study as variables that impact negatively on school performance. Other significant variables for analysing effectiveness are related to school’s management, infrastructure and good state of conservation of school equipment (e.g., Albanez, Ferreira and Franco, 2002), usually integrated under the “indicator of school management complexity”. Teachers’ working conditions, professional profiles and pedagogical practices are practically not used by area studies as factors that impact school performance, although the opposite begins to be confirmed by new research (e.g., Américo and Lacruz, 2017).

In dialogue with the specialized literature on school effectiveness, the results obtained by Espírito Santo’s schools in the Prova Brasil of 2013 are analyzed, which is one of the main instruments used by SAEB to evaluate primary education in the country. For this, however, an alternative statistical method is used by researches in the area: discriminant analysis. With it, we do not intend to deny the results of other investigations, but to estimate which variables impacting school performance can be prioritized to rank school performance and, thus, to contribute to the improvement of the analyses carried out. Hereafter, before presenting this methodology, a historical overview of the construction and improvement of SAEB is described.

THE RECENT TRAJECTORY OF LARGE-SCALE NATIONAL EVALUATIONS IN BRAZIL

SAEB, as explained by Pestana (1992), was part of the restructuring and redemocratization process of Brazil, in which the public institutions actions/results were given greater transparency. In this spirit, SAEB was designed to evaluate educational systems at two complementary levels, both in terms of productivity and efficiency, as well as in terms of working conditions and school infrastructure. At the beginning of the project, to correlate issues related to educational management, teaching competence, costs and student income was sought, as well as the management of educational systems; presenting a sample database capable of offering a diagnosis about the quality of primary education in the country.

In 1995, to expand this database and standardize the results obtained at national level methodological changes were developed: private schools were included in the sample; all the 26 States and Federal District participated; questionnaires on students’ socio-cultural characteristics and study habits were used; Portuguese language and mathematics were prioritized; the 3rd year of high school (which was added to the 5th and 9th grades of primary education) was included; and, Item Response Theory (TRI) was adopted, which made it possible to produce an unified scale to measure, monitor and compare regions or localities performances. The changes follow the metrics of Program for International Student Assessment (PISA), configuring SAEB as we know it today.

In this course, the 1980s quantitative research’s dominant discourse on cost-student was converted by SAEB into more or less explicit notions of pedagogical action competitiveness, performance, efficiency and productivity. The definition of biannual cognitive tests has characterized this way of qualifying basic education. They have been elaborated by reading and mathematics specialists through a synthesis of common elements to different Brazilian curricular matrices and main textbooks of each area. In the last decades, an official parameter of what should be considered fundamental in learning at the end of each schooling cycle was constituted.

SAEB enables school networks, public policy planning and decision-making to be monitored. Yet, from the point of view of certain public administration sectors, a political and managerial problem was produced by SAEB’s diagnostic analyses, as this evaluation results still represented a tool with a low level of interference in school life (Zaponi and Valence, 2009). Such fact has generated a perception that the implementation of forms of evaluation particularizing the analysis results could be an effective strategy for identifying the actors responsible for school success or failure (Bonamino and Souza, 2012). In parallel with SAEB development, several other regional evaluation systems were created, Programa de Avaliação da Educação Básica do Espírito Santo (PAEBES) became a regional parameter.

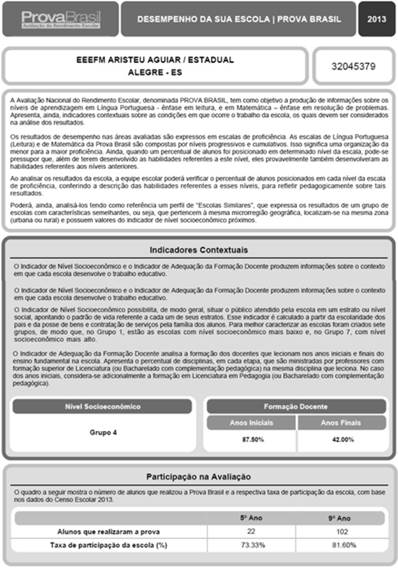

In the midst of this context, through ministerial ordinance n. 931 of March 21st, 2005, SAEB was restructured, now also counting on Prova Brasil. In 2009, this new device was adjusted to become a census evaluation of primary education in urban/rural schools and students in public and private educational networks (with more than 20 students enrolled) throughout the country. The results of this biannual test are presented nominally by school together with indicators on school context variables. In this case, data have been contextualized without explaining to what extent school performance is impacted by these variables based on two criteria: “adequacy of teacher education” and “socioeconomic level of students”. In Figure 1, it is possible to observe an example of the way in which such criteria are published by the Instituto Nacional de Estudos e Pesquisas Educacionais Anísio Teixeira (INEP).

Source: INEP. Performance per school in the Prova Brasil of 2013 (2015).

Figure 1 - Presentation format of performance by school in Prova Brasil.

Although Prova Brasil results conglomerate different indicators to characterize what is usually called “school context”, translating its data to facilitate access and communication with extra-academic audiences, the test scores do not explain to what extent its indexes collaborate to establish student outcomes. For this reason, estimating which educational indicators maximize the discrimination between successful and unsuccessful performances may represent a possibility to deepen the analysis and distinguish factors impacting school routine in the face of a set of variables. In other words, understanding the contextual factors best discriminating school performance improves the current debate on primary education efficacy and quality, supporting specific educational actions and policies for each context.

RESEARCH TRACKS

The purpose of the investigation is to interpret contextual variables best differentiating student performance of the final grades of primary education of Espírito Santo education network in the Prova Brasil of 2013 through discriminant analysis. Although not explored in academic writing about school effectiveness, discriminant analysis is not a recent technique. The first solution to the problem of discrimination among populations is attributed to Fisher, in a study on the classification of new plant species (Fischer, 1936). This technique was also employed by the educational field. For instance, the discriminant analysis was used by Ferreira and Hill (2007) to verify cultural patterns that “better” discriminate higher education institutions.

From the results achieved in this research, it was possible to identify not only what criteria are being mobilized by the external evaluation system to measure what is called quality in Brazilian primary education, but to understand which of these variables effectively impact student performance and discriminate the criteria for defining and hierarchizing different school performances.

Thus, the population investigated in this research brings together 497 state, urban and rural schools from the 78 municipalities of Espírito Santo. Data collection was carried out on the INEP website between September and December, 2015. The data correspond to the year 2013. Public consultation on the Prova Brasil scores met the criteria established by the institute and was limited to schools with at least 20 students enrolled in the assessed grades. The public consultation was manually done based on the state schools code of Espírito Santo’s Department of Education. Thus, in order to estimate the factors impacting on student/school performance, INEP indicators of quantity/quality of education were used to present the context in which each one of these schools developed their educational work.

Table 1 gives the variables operational definitions.

Table 1 - Description of model variables

| Tipo | Description | Abbreviation | Scale |

|---|---|---|---|

| Dependent | Grades of the Prova Brasil of 2013 | GBP | Nominal |

| Independents | Students per class | SPC | Ratio |

| Class hours per day | CHD | Ratio | |

| Age-series distortion rates | ASD | Ratio | |

| Approval rate | APR | Ratio | |

| Dropout rate | SDR | Ratio | |

| Index of teacher regularity | ITR | Interval (0 - 5) | |

| Index of the teacher effort | ITE | Interval (1 - 5) | |

| Indicator of school management complexity | SMC | Interval (1 - 6) | |

| Socioeconomic level of students | SEL | Ratio | |

| Adequacy of teacher education | ATE | Interval (1 - 5) |

Source: Research database. Authors’ elaboration.

The data collected on the INEP website revealed that only 244 (49%) schools (located in 70 of the 78 municipalities) presented data for all variables considered in this study (Table 1). Techniques for treatment of missing values were not used, since the sample size was large enough compared to the statistical technique employed. Thus, the schools were categorized by means of their differentiated performances, having as cut-off criterion the lower and upper quartiles, as follows: worst (grade ≤ Q 1) and best performing schools (grade ≥ Q 3). Thus, effectiveness is related to the results obtained by the schools analyzed. The ANOVA test verified whether the classification of “best”/“worst” performing schools in the Prova Brasil of 2013 could be statistically different.

In this sense, the grades in Prova Brasil were assumed as the study’s dependent variable. Both groups were formed by 62 schools, totalizing a sample of 124 observations. The sample was divided into two subsamples to validate the discriminant analysis: one to estimate the discriminant function (estimate sample = 74 observations); and one for validation purposes (test sample = 50 observations). According to criteria suggested by Hair et al. (2009), the sample size (minimum of 5 observations per independent variable considered in the analysis, although they do not enter into the discriminant function) and the size of the sample per group (minimum of 20 observations per group) meet the minimum considered adequate in relation to the two subsamples, formed by random arrangement.

The discriminant analysis was used to investigate the contextual variables best discriminating the “best” and “worst” performing schools in Prova Brasil 2013. The discriminant analysis allowed to identify the most relevant variables to explain the differences between groups that can be heterogeneous in a context, but homogeneous among them. According to Hair et al. (2009), it is a matter of obtaining a function that is the linear combination of two or more independent variables that increase the discrimination of the groups defined a priori by the dependent variable categories (Equation 1).

Whereupon:

Zjk = |

discriminant Z score of the discriminant j function for the k object |

α = |

intercept |

Wi = |

discriminant weight for the i independent variable |

Xik = |

i independent variable for k object |

i = |

i-th observation, where n is the population size |

The stepwise method was used for determining the discriminant function and identifying the variables with greater discriminating and parsimony power in the discriminant function. To adopt a suggestion from Hair et al. (2009) for stepwise estimation procedures, the criterion for evaluating the statistical significance of the general model was defined using the Mahalanobis D2 measure.

The assumptions made by the discriminant analysis - the multivariate normality of explanatory variables (Mardia’s test), the linearity of the relationship between dependent and independent variables (graph residues), no multicollinearity between the independent variables (tolerance), homogeneity of variance and covariance matrices (Box’s M) and no outliers are present (Cook’s distance) - were verified. Software SPSS 20 was used in data processing.

SCHOOLS’ CONTEXTS AND PERFORMANCE IN PROVA BRASIL

Before starting the measurements extraction procedures for performing discriminant analysis, the ANOVA test demonstrated that the hypothesis of performance equality among school groups in Prova Brasil can be rejected (p-value <0.05). This finding provided support for establishing the discriminant analysis by differentiating the school groups with the best and worst scores obtained in the 2013 evaluation. The ANOVA test verified the mean difference for the hypothesis of data normality, by the Kolmogorov Smirnov test (p-value ≥ 0.05) and of homogeneity of variances, by the Levene test (p-value ≥ 0.05), were not rejected.

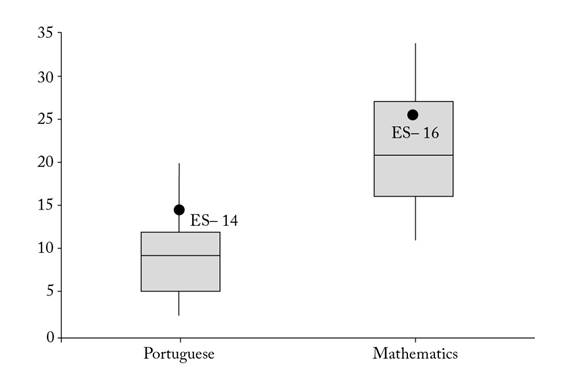

The scores of the worst performing schools varied (from 0 to 10 - Table 1) from 3.39 to 4.51, with average of 4.15 and variation coefficient of 7% around the average. The scores of the best performing schools, in turn, ranged from 5.34 to 6.66, with average of 5.70 and variation coefficient of 5% around the average. In the Prova Brasil of 2013, the average performance of the final grades of primary education of Espírito Santo education network was higher than the average performance of the other Brazilian State education networks at the same educational level. Thus, the schools of the Espírito Santo Education Network presented the 4th best performance in mathematics and the 8th best performance in Portuguese among the 26 states and the Federal District.1 Figure 2 summarizes this performance considering the other states of the country in a boxplot that highlights Espírito Santo.

Source: Research database. Authors’ elaboration.

Figure 2 - Percentage of students proficient in Prova Brasil.

In this way, to interpret state education systems, an interesting perspective is offered by the Espírito Santo education network. On the one hand, its geographical delimitation, with only 497 state schools, contributes to the construction of a controllable and representative sample of a regional context. On the other hand, its relative adequacy to Ministério da Educação (MEC) guidelines for evaluating school performance makes it pertinent to analyse the differential impact of INEP variables in school evaluation.

To avoid problems in estimating the discriminant function, the assumptions made by the discriminant analysis were validated. The validation practice analyses the multivariate normality of the explanatory variables (Mardia’s test; p-value ≥ 0.05), the linearity of the relationship between dependent and independent variables (standardized residual graphic x standard predicted values), the homogeneity of variance and covariance matrices (Box’s M; p-value ≥ 0.05), and the non-existence of outliers (Cook’s distance < 1). The assumption of absence of multicollinearity between the independent variables was verified after the selection of the independent variables in the discriminant model, as will be shown below.

By examining the statistical significance (p-value <0.05) between the independent variables mean for the two groups, four variables were identified as promising candidates to enter the discriminant analysis:

age-series distortion rate;

index of teacher regularity;

student’s dropout rate; and,

approval rate.

The decision to use the stepwise estimation procedure was reinforced by the considerable reduction from 10 to 4 variables.

As suggested by Wilk’s lambdas (ranging from 0 to 1), worst/best performing schools do not have, on average, statistically significant differences in relation to the other variables, especially class hours per day and socioeconomic level of students (Λ> 0.99). Hence, factors traditionally emphasized by the specialized literature were not decisive to make Espírito Santo’s schools better or worse in 2013. This does not mean that these factors had no impact on Prova Brasil scores. On the contrary, from the point of view of the criteria used by SAEB, the results obtained were impacted by all these variables. The analysis only shows that variables such as socioeconomic level of students, class hours per day, students per class, adequacy of teacher education, indicator of the teacher effort and indicator of school management complexity did not differentiate the performance of the groups of the best/worst performing schools. In this case, the variables identified as priorities in the official definition of quality of education in Espírito Santo relate to four main factors: approval rate, student’s dropout rate, age-series distortion and index of teacher regularity.

With the stepwise method applied (Table 2), however, results evidence that three of these four core independent factors have effectively influenced the discrimination of the dependent grades in Prova Brasil.

Table 2 - Discriminant analyses: stepwise method.

| Phase | Inserted variables a | D2 minimum | ||||

|---|---|---|---|---|---|---|

| Statistic | F exact | |||||

| Statistic | df1 | df2 | Sig. | |||

| 1 | ITR | 1.315 | 24.335 | 1 | 72 | 5.039E-006 |

| 2 | SDR | 2.180 | 19.885 | 2 | 71 | 1.389E-007 |

| 3 | ASD | 2.621 | 15.713 | 3 | 70 | 6.518E-008 |

Note: Maximum significance of F to be inserted is 0.05 and to be removed is 0.10. aASD: age-series distortion rate; ITR: index of teacher regularity; SDR: dropout rate. Source: Research database. Authors’ elaboration.

The absence of multicollinearity among the study independent variables can now be verified. Multicollinearity denotes that an independent variable must be highly explained by another (other) variable(s), adding little explanatory power to the model variables set. The impact of collinearity on discriminant analysis, measured in terms of tolerance, whose value refers to the proportion of variation in the independent variables not explained by the variables that are already in the model, denotes absence of multicollinearity (tolerance ≥ 0.19), as suggested by Hair et al. (2009) for small samples. To address the structural correlations (simple linear correlation between the independent variables and the discriminant function) allow for seeing that none of the variables ignored by the stepwise procedure (which prevents variables with no statistical significance from entering the function) was shown to have a substantial effect (± 0.4 or more), in step with Hair et al. (2009), reinforcing the proposed discriminant function.

So, the examination of the statistical significance between the independent variables average for the two groups made the approval rate a candidate to enter the descriptive analysis. But this did not happen because it had discriminant load values above (-0.242). In addition, the canonical correlation (r c = 0.634), which shows the level of association between the discriminant scores and the groups, indicates that 40.2% of the discrimination between the groups (r 2 c = 0.402) can be explained by the function. Therefore, from the analysis carried out up to this stage of the research, it was noticed that the variables age-series distortion, index of teacher regularity and student’s dropout rate, together, explain 40.2% of the discrimination of Espírito Santo schools with worse and best performances in Prova Brasil 2013. However, each of these variables points to a different dimension of teaching.

From a formal point of view, the statistical significance analysis of the discriminant function (Wilk’s Lambda) shows that there is evidence to reject the null hypothesis of equality of the population mean of the two groups (p-value <0.05). That is, the discriminant function is statistically significant. These results indicate that the discriminant function has a high degree of statistical significance and a moderate fit to the data (rc = 0.634). However, to find similar values in the application of discriminant analysis in Social Sciences is common - for example, Bervian and Corrêa (2015) analyzed the relationship between the concepts obtained in the 2012 Exame Nacional de Desempenho dos Estudantes (ENADE) for the business administration course, the academic organization of the participating institution, and the number of students enrolled in each one of them, sampling 53 higher education institutions in the state of Santa Catarina (rc = 0.202). In addition, other variables not predicted by the model could interfere in the results. Thus, the discriminant functions obtained are important elements for understanding the question raised by the research, contributing to future studies.

In addition, the discriminant weights of the discriminant function variables (age-series distortion, index of teacher regularity and student’s dropout rate) have a substantial impact on the model for the discriminant function has a high degree of statistical significance, as shown in Table 3, which presents the standard and non-standard coefficients (discriminant weights) of the canonical discriminant function of the variables selected to compose the discriminant function.

Table 3 - Discriminants coefficients.

| Variables a | Non-standardized coefficients | Standardized coefficients |

|---|---|---|

| (constant) | 1.916 | |

| ASD | 0.051 | 0.401 |

| ITR | -1.488 | -0.687 |

| SDR | 0.159 | 0.453 |

aASD: age-series distortion rate; ITR: index of teacher regularity; SDR: dropout rate. Source: Research database. Authors’ elaboration.

The discriminant weights represent a way of evaluating the importance of individual variables for the discriminant function. The average profile for the two school groups (worst/best performing schools in the Prova Brasil of 2013) helps interpreting standardized/non-standardized discriminant coefficients and discriminant loads (structure matrix) and signs (positive/negative). Positive signs for the present study, are associated with variables that have higher values for the worst performing school group. Negative signs are associated with variables that have higher values for the best performing school group. Thus, signs should be interpreted as an indication of pattern between the groups. The discriminant function can be written in this chaining of non-standard coefficients Equation 2 as:

Z: |

discriminant Z score of the discriminant function. |

ASD: |

age-series distortion; |

ITR: |

index of teacher regularity; |

SDR: |

student’s dropout rate. |

Thus, given the multiple contextual variables presented to characterize the country’s educational institutions, to determine which of them best discriminated students’ school performance in Prova Brasil is possible. As shown in this article, worst performing schools in the Prova Brasil of 2013 had greater age-series distortion, index of teacher regularity and student’s dropout rate. To wit, from a statistical point of view, the differences between the - best/worst performing - schools were maximized by these variables.

Student’s dropout rate has a close connection to age-series distortion for dropout students can go back to school, impacting on age-series distortion rates. There is an established literature on issues related to school flow and, specifically, on the effects of programs against school dropout and age-series distortion (e.g., Fletcher e Castro, 1993; Fletcher e Ribeiro, 1988; Teixeira de Freitas, 1947). For Ferrão, Beltrão and Santos (2002), student learning is negatively influenced if high age-series distortion rates are high. According to Ferrão, Beltrão and Santos (2002) and Klein and Ribeiro (1995), age-series distortion can be controlled by non-retention policies without learning loss. For Menezes-Filho (2007), strategies for reducing repetition can improve performance but social programs against student dropout can have an inverse effect.

Despite the solution to the school dropout problem, it should be noted that the dropout rate, which leads to school backwardness, has the potential to undermine the Espírito Santo Education Network students’ performance. Therefore, even though we recognize the presence of dissonant voices in literature, the present study considers that once student’s dropout and age-series distortion rates are under control, students’ performance in Prova Brasil can be improved.

However, among the three contextual variables, greater effect can be attributed to index of teacher regularity, as shown by the discriminant function. Index of teacher regularity refers to the bonds between teachers and schools. The closer these links are, the better the student’s chances for higher scholastic achievement in Prova Brasil. But how can we interpret these relations? Are we talking about merely professional ties or some kind of rootedness with social context?

Instead of responding to these questions haphazardly, to note that teaching effect is not always related to effort indicators is important (e.g., Hanushek and Raymond, 2005; Houssaint and Rivkin, 2006). On the contrary, in contexts such as Espírito Santo education network, characteristics of bad teachers - apathy (e.g., Soares, 2005), disinterest (e.g., Santos, 2002), loss of authority (e.g., Paiva, Junqueira and Muls, 1997), initial malformation (e.g., Albarnez, Ferreira and Franco, 2002) - have less impact on school performance than the type of bonding to school these professionals have. After all, as observed by Biondi and Felício (2007) when reflecting on primary education students’ performance in Mathematics tests, absence of rotation, working conditions and experience with the teaching context seem to be elements that positively affect learning.

In addition, as a way to support the discriminant function, the classification results obtained from the classification function were analysed (Fisher’s linear discriminant function). After applying the discriminant analysis, on average, 82.4% of the estimate sample and 72% of the test sample were correctly classified. As the odds (without the discriminant function) are 50-50, inasmuch as the groups have the same size, the predictive accuracy is 32.4% and 22% higher than the odds, respectively. These values are higher than those suggested by Hair et al. (2009), who recommend classification accuracy greater than one quarter of the odds (in this case greater than 62.5%). Although the percentage of elements correctly classified after the application of the discriminant analysis may seem low (82.4% and 72%), it is common to find values of this order in practical applications of discriminant analysis in social sciences.

In addition, the test sample accuracy, with schools that did not compose the database for discriminant function estimation, reveals that the model has the capacity to perform, at a good level, the classification of schools outside the database. Of the worst performing schools, 86.5% of the estimate sample and 84% of the test sample were correctly classified after the discriminant analysis. Of the best performing schools, 78.4% of the estimate sample and 60% of the test sample were correctly classified after the discriminant analysis. Thus, worst performing schools are better discriminated by the function.

To verify the discriminatory power of the classification matrix, when compared to a random model, the Press’s Q statistic was calculated, demonstrating that the percentage of classification is satisfactory. The critical value of 6.63 (at the significance level of 0.01) was exceeded by the results (estimate = 31.14 and test = 9.68). Accordingly, the school discriminations in the groups were significantly better than a random distribution for both estimate and test samples. In other words, the expected chance classification was exceeded by a statistically significant level.

From the point of view of studies on school effectiveness, the empirical evidence obtained during the investigation suggests that the discriminant analysis may be particularly revealing for understanding Prova Brasil scores. While other data processing practices commonly used by the area take schools as equal and point to average global values, discriminant analysis enables to statistically consider different school profiles from particular contexts in which more or less effective performances are inserted. Thus, this study resulted in a parsimonious function that allows for estimating with reasonable predictive accuracy which factors should be prioritized, in specific contexts, to effectively modify school performances in assessments applied on a large scale.

In a recent study that also analyzed the relationship between the school environment and its performance in Espírito Santo education network, the multiple linear regression technique was applied by Américo and Lacruz (2017) on a sample basis similar to that used for this study. The authors observed that, in addition to student’s dropout rate and index of teacher regularity, indicator of the teacher effort would be another factor that would differently affect schools’ performance in Espírito Santo education network in Prova Brasil. Such analysis has led to the importance of the “teaching effect” to reduce the negative impacts that unfavourable socioeconomic factors generate on the quality of the state’s education. With the use of discriminant analysis, however, age-series distortion was verified as another contributing factor to understand the differences in performance of schools in that state, once this factor, although not representative in global analyses, focuses on the best/worst performing schools as a substantive discriminator.

Such a finding seems crucial for both educational research and education public management, as the construction of educational policies currently lack analytical tools that enable different schools to be treated differently. In Espírito Santo, the development of actions such as reducing school dropout, so that schools with insufficient results can achieve the desired results, could have been the strategic decision to be made from the results obtained in the Prova Brasil of 2013. What was witnessed was the systematic closure of schools under the Espírito Santo’s Department of Education argument that “there being fewer students, there should be fewer classes and schools” (Américo and Lacruz, 2017, p. 871) to optimize school effectiveness.

FINAL CONSIDERATIONS

Against the Brazilian contemporary managerial and informational background, in which education is being systematically considered in accountability terms, broaden the debate about the meanings of school performance inequalities in large-scale assessments seems to be an increasingly crucial issue for any educational project of the future. After all, we are facing an arena of disputes that has the power to define what is desirable or not for contemporary schooling.

A statistical method little explored in the Brazilian educational field was applied: the discriminant analysis. Prova Brasil official metrics were not essentialized. Theoretical contributions already established by other studies on educational effectiveness were not disregarded. Instead, an alternative statistical model for the analysis of educational quality indicators applicable was presented. Hence, the diversity of school contexts and performance can be considered in a fairly and accurately way. It is an alternative way to interpret schools that present different contexts and performances, responding to the effective needs of each educational reality.

Our findings suggest that by using the discriminant analysis, to locate variables best distinguishing schools with different performances and develop discriminant functions representing such differences from INEP effectiveness criteria was possible. In this sense, the application of the discriminant function allows researchers, managers and education policy-makers to plan and reorganize actions and investments based on priority contextual variables, intervening on student/school performance. This new form of classification reduces and limits quality indicators in education at manageable levels, giving analytical, managerial and policy relevance to the method outlined here.

Therefore, the discriminant analysis, as an alternative analytical tool for studies on school effectiveness in Brazil, offers complex perspectives about plural, heterogeneous and ever-changing realities. Through this methodological tool, other investigations can trace schools and school systems profiles (description), differentiation (inference) and/or classification (prediction). Thus, to understand the role and the potential of different primary education institutions in dealing with educational inequalities in our country is thinkable.

The discriminant analysis allows for describing how school groups differ in relation to underlying variables. It would be interesting to describe the different segments profiles of schools’ grades to understand the way in which school managers of best performing schools differ from school managers of worst performing schools. Several other studies could also investigate whether apparent differences between schools’ groups are significant in assessing whether schools recognized as innovative perform statistically differently from other educational institutions without such a label. In either case, the discriminant analysis provides a hypothesis test that all group means are identical.

In addition, it is possible to predict the group relevance through the discriminant analysis; that is, to use the discriminant function to categorize schools (observations) when the dependent variable value (Prova Brasil scores) is not observed. Thus, to predict schools that are more prone to inferior performance is feasible. In this case, the school’s discriminant function score could be used as a measure of merit, such as a budget scoring, to be applied in the allocation of resources practice in budget constraint circumstances. Thus, the discriminant analysis can complement the results obtained through other techniques, contributing to the improvement of the analyses performed.

REFERENCES

Akkari, A. Internacionalização das políticas educacionais. Petrópolis: Vozes, 2011. [ Links ]

Albanez, A.; Ferreira, F.; Franco, F. Qualidade e equidade no ensino fundamental brasileiro. Pesquisa e Planejamento Econômico, Rio de Janeiro: IPEA, v. 32, n. 3, p. 453-475, dez. 2002. [ Links ]

Alves, M. T. G.; Soares, J. F. Contexto escolar e indicadores educacionais: condições desiguais para a efetivação de uma política de avaliação educacional. Educação e Pesquisa, São Paulo: USP, v. 39, n. 1, p. 177-194, jan./mar. 2013. http://dx.doi.org/10.1590/S1517-97022013000100012 [ Links ]

Américo, B. L.; Lacruz, A. J. Contexto e desempenho escolar: análise das notas na Prova Brasil das escolas capixabas por meio de regressão linear múltipla. Revista de Administração Pública, Rio de Janeiro: FGV, v. 51, n. 5, p. 854-878, out. 2017. http://dx.doi.org/10.1590/0034-7612160483 [ Links ]

Andrade, M.; Franco, C.; Carvalho, J. P. de. Gênero e desempenho em matemática ao final do ensino médio: quais as relações? Estudos em Avaliação Educacional, São Paulo: Fundação Carlos Chagas, v. 14, n. 27, p. 77-96, jan./jun. 2003. [ Links ]

Andrade, J. M.; Laros, J. A. Fatores associados ao desempenho escolar: um estudo multinível com os dados do SAEB/2001. Psicologia: Teoria e Pesquisa, Brasília: UnB, v. 23, n. 1, p. 33-42, jan./mar. 2007. http://dx.doi.org/10.1590/S0102-37722007000100005 [ Links ]

Barbosa, M. E.; Fernandes, C. Modelo multinível: uma aplicação a dados de avaliação educacional. Estudos em Avaliação Educacional, São Paulo: Fundação Carlos Chagas , v. 9, n. 22, p. 135-153, jul./dez. 2000. http://dx.doi.org/10.18222/eae02220002220 [ Links ]

Beech, J. A internacionalização das políticas educativas na América Latina. Currículo Sem Fronteiras, [s.l.:s.n.], v. 9, n. 2, p. 32-50, jul./dez. 2009. [ Links ]

Bervian, L. M.; Corrêa, M. ENADE: impactos da categoria administrativa, organização acadêmica e número de participantes no desempenho dos estudantes. Revista de Administração Educacional, Recife: UFPE, v. 3, n. 2, p. 6-27, ago. 2015. [ Links ]

Biondi, R. L.; Felício, F. Atributos escolares e o desempenho dos estudantes: uma análise em painel dos dados do SAEB. Brasília, DF: INEP, 2007. [ Links ]

Bonamino, A. et al. Os efeitos das diferentes formas de capital no desempenho escolar: um estudo à luz de Bourdieu e de Coleman. Revista Brasileira de Educação, Rio de Janeiro, ANPEd, v. 15, n. 45, p. 487-594, dez. 2010. http://dx.doi.org/10.1590/S1413-24782010000300007 [ Links ]

Bonamino, A.; Souza, S. Z. Três gerações de avaliação da educação básica no Brasil. Educação e Pesquisa, São Paulo: USP , v. 38, n. 2, p. 373-388, jun. 2012. http://dx.doi.org/10.1590/S1517-97022012005000006 [ Links ]

Brasil. Portaria ministerial n. 931, de 21 de março de 2005. Institui o Sistema de Avaliação da Educação Básica - SAEB, que será composto por dois processos de avaliação: a Avaliação Nacional da Educação Básica - ANEB, e a Avaliação Nacional do Rendimento Escolar - ANRESC. Diário Oficial da União, Brasília, 22 mar. 2005. Seção 1. p. 16-17. [ Links ]

Carvallo-Pontón, M. Eficacia escolar: antecedentes, hallazgos y futuro. Revista Internacional de Investigación en Educación, Jalisco: Pontificia Universidad Javeriana, v. 3, n. 5, p. 199-214, set. 2010. [ Links ]

Coelho, M. I. M. Vinte anos de avaliação da educação básica no Brasil: aprendizagens e desafios. Ensaio: Avaliação e Políticas Públicas em Educação, Rio de Janeiro: CESGRANRIO, v. 16, n. 59, p. 229-258, jun. 2008. http://dx.doi.org/10.1590/S0104-40362008000200005 [ Links ]

Coleman, J. S. et al. Equality of education opportunity. Washington, DC: US Department of Health, Education & Welfare, 1966. [ Links ]

Edmonds, R. Effective schools for the urban poor. Educational Leadership, Alexandria: ASCD, v. 37, n. 1, p. 15-27, out. 1979. [ Links ]

Eisenbeis, R. A.; Avery, R. B. Discriminant analysis and classification procedures. Lexington: Health, 1972. [ Links ]

Ferreira, A. I.; Hill, M. M. Diferenças de cultura entre instituições de ensino superior público e privado: um estudo de caso. Psicologia, Lisboa: APP, v. 21, n. 1, p. 7-26, jan./jun. 2007. [ Links ]

Ferrão, M. E.; Couto, A. Indicador de valor acrescentado e tópicos sobre a consistência e estabilidade: uma aplicação ao Brasil. Ensaio: Avaliação de Políticas Públicas Educacionais, Rio de Janeiro: CESGRANRIO , v. 21, n. 78, p. 131-164, mar. 2013. http://dx.doi.org/10.1590/S0104-40362013000100008 [ Links ]

Ferrão, M. E.; Fernandes, C. O. O efeito-escola e a mudança: dá para mudar? Evidências da investigação brasileira. REICE, Madrid, v. 1, n. 1, p. 1-13, jan./jun. 2003. [ Links ]

Ferrão, M. E. et al. O SAEB - Sistema Nacional de Avaliação da Educação Básica: objetivos, características e contribuições na investigação da escola eficaz. Revista Brasileira de Estudos de População, Belo Horizonte: ABEP, v. 18, n. 1/2, p. 111-130, jul./dez. 2001. [ Links ]

Ferrão, M. E.; Beltrão, K. I.; Santos, D. P. Políticas de não repetência e a qualidade da educação: evidências obtidas a partir da modelagem dos dados da 4ª série do SAEB-99. Estudos em Avaliação Educacional, São Paulo: Fundação Carlos Chagas , v. 13, n. 26, p. 47-74, jul./dez. 2002. 10.18222/eae02620022185 [ Links ]

Fisher, R. A. The use of multiple measurements in taxonomic problem. Annals of Eugenics, United Kingdom: [s.n.], v. 7, p. 179-188, 1936. https://doi.org/10.1111/j.1469-1809.1936.tb02137.x [ Links ]

Fletcher, P. R. À procura do ensino eficaz. Brasília, DF: PNUD; MEC; DAEB, 1998. [ Links ]

Fletcher, P. R.; Castro, C. M. Mitos, estratégias e prioridades para o ensino de 1º grau. Estudos em Avaliação Educacional, São Paulo: Fundação Carlos Chagas , v. 4, n. 8, p. 39-56, jul./dez. 1993. 10.18222/eae00819932340 [ Links ]

Fletcher, P. R.; Ribeiro, S. C. A educação na estatística nacional. In: Sawyer, O. D. (Org.). PNADs em foco: anos 80. São Paulo: ABEP, 1988. [ Links ]

Franco, C.; Bonamino, A. A pesquisa sobre característica de escolas eficazes no Brasil: breve revisão dos principais achados e alguns problemas em aberto. Educação on-line, Rio de Janeiro: PUC, v. 1, p. 1-13, 2005. [ Links ]

Franco, C. et al. Qualidade e equidade em educação: reconsiderando o significado de “fatores intraescolares”. Ensaio: Avaliação de Políticas Públicas Educacionais, Rio de Janeiro: CESGRANRIO , v. 15, n. 55, p. 277-298, abr./jun. 2007. http://dx.doi.org/10.1590/S0104-40362007000200007 [ Links ]

Hair, J. F. et al. Análise multivariada de dados. Porto Alegre: Bookman, 2009. [ Links ]

Hanushek, E. A.; Raymond, M. E. Does school accountability lead to improved student performance? Journal of Policy Analysis and Management, United States: John Wiley & Sons, v. 24, n. 2, p. 297-327, mar. 2005. https://doi.org/10.1002/pam.20091 [ Links ]

Hanushek, E. A.; Rivkin, S. G. Teacher quality. In: Hanushek, E. A.; Welch, F. Handbook of the economics of education. Amsterdam: Elsevier, 2006. [ Links ]

INEP - Instituto Nacional de Estudos e Pesquisas Educacionais Anísio Teixeira. Desempenho por escola na Prova Brasil 2013 - código da escola 32045379. Brasília, DF: 2015. Disponível em: <Disponível em: http://sistemasprovabrasil.inep.gov.br/provaBrasilResultados/view/boletimDesempenho/boletimDesempenho.seam?cid=1293# >. Acesso em: 9 jan. 2018. [ Links ]

Karino, C. A.; Laros, J. A. Estudos brasileiros sobre eficácia escolar: uma revisão de literatura. Revista Examen, Brasília: CEBRASPE, v. 1, n. 1, p. 95-126, jul./dez. 2017. [ Links ]

Klein, R.; Ribeiro, S. C. A pedagogia da repetência ao longo das décadas. Ensaio: Avaliação e Políticas Públicas em Educação, Rio de Janeiro: CESGRANRIO , v. 3, n. 6, p. 55-62, jan./mar. 1995. [ Links ]

Koslinski, M. C.; Alves, F. Novos olhares para as desigualdades de oportunidades educacionais: a segregação residencial e a relação favela-asfalto no contexto carioca. Educação & Sociedade, Campinas: CEDES, v. 33, n. 120, p. 805-831, jul./set. 2012. http://dx.doi.org/10.1590/S0101-73302012000300009 [ Links ]

Laros, J. A.; Marciano, J. L. Análise multinível aplicada aos dados do NELS 88. Estudos em Avaliação Educacional, São Paulo: Fundação Carlos Chagas , v. 19, n. 40, p. 263-278, maio/ago. 2008. http://dx.doi.org/10.18222/eae194020082079 [ Links ]

Laros, J. A.; Marciano, J. L.; Andrade, J. M. Fatores associados ao desempenho escolar em Português: um estudo multinível por regiões. Ensaio: Avaliação de Políticas Públicas Educacionais, Rio de Janeiro: CESGRANRIO , v. 20, n. 77, p. 623-646, out./dez. 2012. http://dx.doi.org/10.1590/S0104-40362012000400002 [ Links ]

Machado, C.; Alavarse, O. M.; Oliveira, A. S. Avaliação da educação básica e qualidade do ensino: estudo sobre os anos finais do ensino fundamental da rede municipal de ensino de São Paulo. Revista Brasileira de Política e Administração da Educação, Goiás: ANPAE, v. 31, n. 2, p. 335-353, maio/ago. 2015. https://doi.org/10.21573/vol31n22015.61731 [ Links ]

Menezes-Filho, N. A. Os determinantes do desempenho escolar do Brasil. São Paulo: IFB; IBMEC/SP; FEA/SP, 2007. [ Links ]

Mortimore, P. et al. School matters: the junior years. Shepton Mallett: Open Books, 1988. [ Links ]

Nascimento, P. A. M. M. Desempenho escolar e gastos municipais por aluno em educação: relação observada em municípios baianos para o ano 2000. Ensaio: Avaliação de Políticas Públicas Educacionais, Rio de Janeiro: CESGRANRIO , v. 15, n. 56, p. 393-412, set. 2007. http://dx.doi.org/10.1590/S0104-40362007000300006 [ Links ]

Paiva, V.; Junqueira, C.; Muls, L. Prioridade ao ensino básico e pauperização docente. Cadernos de Pesquisa, São Paulo: Fundação Carlos Chagas , v. 100, p. 109-119, mar. 1997. [ Links ]

Palermo, G. A.; Silva, D. B. N.; Novellino, M. S. F. Fatores associados ao desempenho escolar: análise da proficiência em matemática dos alunos do 5º ano do ensino fundamental da rede municipal do Rio de Janeiro. Revista Brasileira de Estudos de População, Belo Horizonte: ABEP , v. 31, n. 2, p. 367-384, dez. 2014. http://dx.doi.org/10.1590/S0102-30982014000200007 [ Links ]

Pestana, M. I. G. S. O sistema nacional de avaliação da educação básica. Estudos em Avaliação Educacional, São Paulo: Fundação Carlos Chagas , v. 1, n. 5, p. 81-84, jan./jun. 1992. [ Links ]

Rodrigues, C. G.; Rios-Neto, E. L. G.; Pinto, C. C. X. Diferenças intertemporais na média e distribuição do desempeno escolar no Brasil: o papel do nível socioeconômico, 1997 a 2005. Revista Brasileira de Estudos de População, Belo Horizonte: ABEP , v. 28, n. 1, p. 5-36, jun. 2011. http://dx.doi.org/10.1590/S0102-30982011000100002 [ Links ]

Rutter, M. et al. Conclusões, especulações e implicações. In: Brooke, N.; Soares, J. F. (Eds.). Pesquisa em eficácia escolar: origem e trajetórias. Belo Horizonte: Editora UFMG, 1979. [ Links ]

Santos, L. L. C. P. Políticas públicas para o ensino fundamental: Parâmetros Curriculares Nacionais e Sistema Nacional de Avaliação (SAEB). Educação & Sociedade, Campinas: CEDES, v. 23, n. 80, p. 346-367, set. 2002. [ Links ]

Scheerens, J. School effectiveness research and the development of process indicators of school functioning. School Effectiveness and School Improvement, United Kingdom, v. 1, n. 1, p. 61-80, jan. 1990. https://doi.org/10.1080/0924345900010106 [ Links ]

Silva, J.; Bonamino, A. M. C.; Ribeiro, V. M. Escolas eficazes na educação de jovens e adultos: estudo de casos na rede municipal do Rio de Janeiro. Educação em Revista, Belo Horizonte: UFMG, v. 28, n. 2, p. 367-392, jun. 2012. http://dx.doi.org/10.1590/S0102-46982012000200017 [ Links ]

Soares, J. F. Melhoria do desempenho cognitivo dos alunos no ensino fundamental. Cadernos de Pesquisa, São Paulo: Fundação Carlos Chagas , v. 37, n. 130, p. 135-160, abr. 2007. http://dx.doi.org/10.1590/S0100-15742007000100007 [ Links ]

Soares, J. F.; Alves, M. T. G. Desigualdades raciais no sistema brasileiro de educação básica. Educação e Pesquisa, São Paulo: USP , v. 29, n. 1, p. 147-165, jun. 2003. http://dx.doi.org/10.1590/S1517-97022003000100011 [ Links ]

Soares, J. F.; Andrade, R. J. Nível socioeconômico, qualidade e equidade das escolas de Belo Horizonte. Ensaio: Avaliação de Políticas Públicas Educacionais, Rio de Janeiro: CESGRANRIO , v. 14, n. 50, p. 107-126, mar. 2006. http://dx.doi.org/10.1590/S0104-40362006000100008 [ Links ]

Soares, T. M. Modelo de três níveis hierárquicos para a proficiência dos alunos de 4ª série avaliados no teste de língua portuguesa do SIMAVE/PROEB 2002. Revista Brasileira de Educação, Rio de Janeiro: ANPEd, v. 29, p. 73-88, ago. 2005. http://dx.doi.org/10.1590/S1413-24782005000200007 [ Links ]

Stocco, S.; Almeida, L. C. Escolas municipais de Campinas e vulnerabilidade sociodemográfica: primeiras aproximações. Revista Brasileira de Educação, Rio de Janeiro: ANPEd , v. 16, n. 48, p. 663-814, dez. 2011. http://dx.doi.org/10.1590/S1413-24782011000300008 [ Links ]

Teixeira, R. A. Espaços, recursos escolares e habilidades de leitura de estudantes da rede pública municipal do Rio de Janeiro: estudo exploratório. Revista Brasileira de Educação, Rio de Janeiro: ANPEd , v. 14, n. 41, p. 232-390, ago. 2009. http://dx.doi.org/10.1590/S1413-24782009000200003 [ Links ]

Teixeira de Freitas, M. A. A escolaridade media no ensino primário brasileiro. Revista Brasileira de Estatística, Rio de Janeiro: IBGE, v. 8, n. 30/31, p. 295-474, abr./set. 1947. [ Links ]

Zaponi, M.; Valença, E. Política de responsabilização educacional: a experiência de Pernambuco: ABAVE, 2009. [ Links ]

Van Den Eeden, P.; Hox, J.; Hauer, J. Theory and model in multilevel research: convergence or divergence? Amsterdam: SISWO, 1990. [ Links ]

1Student performance in Prova Brasil is directly related to the evaluation perspective INEP adopted, as well as to the selected content knowledge. In this sense, the greater or lesser compliance with the official criteria refers to an individualized model of testing that assesses cognitive competences and skills that are subdivided into topics and descriptors. Thus, each skill is demonstrated through the association between curricular content knowledge, mental operations, and responses that can be scaled to measure the apparent competencies of students in the Portuguese language disciplines, focusing on reading, and mathematics, concentrating on problem solving.

Received: January 29, 2018; Accepted: August 24, 2018

texto en

texto en