INTRODUCTION

Validity is arguably the most important aspect of any kind of assessment1)-(4, however the overwhelming number of different concepts and beliefs about it has led to some confusion regarding its significance5. Traditionally, in philosophy, the term is derived from the Latin word “Validus” (meaning “strong” or “worth”) and is a fundamental aspect of logic used to provide deductive arguments about a fact or idea. At the transition to the XX century, it emerged in the field of education and psychology as a strategy to justify a growing number of tests in the form of structured assessment, and since they were used to support complex and important decisions, from selection process to educational policies, the quest for validity unleashed a movement of tremendous empirical effort to demonstrate the intrinsic efficacy of these tests. The introduction of the “correlation coefficient” by Karl Pearson in 1896, permitted the estimation of the correlation between different criteria, and not long after that, different psychometric strategies started to be used as validity arguments6. Soon, different views and constructs resulted in the fragmentation of the validity concept with an increasing number of “types of validity”7, and in 1955 the “Joint Committee of American Psychological Association (APA)” was officially using four distinctive varieties: “content validity”, “predictive validity”, “concurrent validity” and “construct validity”. This fragmented approach overestimated the process of collecting evidence through measurements, neglecting the analysis of the consequences and use of the test. Cronbach and Meehls revised these different categories and created what would become the cornerstone of the current validity concept8. They proposed that the validation process should not be limited to evidence gathering, but demanded an extensive analysis of these findings based on an explicit statement of the proposed interpretation, or in Kane’s words “the variable of interest is not out there to be estimated; the variable of interest has to be defined or explicated”9. Since this concept could be applied to any kind of construct and validation process, it paved the way to a unified view of validity, where “all validity is construct validity”1),(10.

Messick, who represented one of the spearheads of the unification movement, proposed that validity should be guided by two questions: the first one, of scientific nature, should be concerned with the psychometrics properties of the test, and the second and essential one, of ethical nature, could only be answered by an extensive analysis of the potential utility and consequences of a test in “terms of human values”11. Cronbach summarized this new idea stating that “One validates, not a test, but an interpretation of outcomes from measurement procedure”12.

The last decades witnessed a significant number of tests created to assess different aspects of the medical profession formation (cognitive, skills, attitude)13),(14. In this scenario, where medical teachers are hard-pressed to attest the efficacy of their assessment methods, medical education risks going back to an era characterized in Cronbach’s words as ‘sheer exploratory empiricism”3, where measurement and the consistency of its results are more important than the assessment itself, and which will result in the progressive reduction of the validity meaning, from a philosophical exercise to an attribute of a measure.

The consequence of such a restrictive view is the excessive use of different type of tests, driven not by an educational purpose but by the impulse to follow the latest fad, without much reflection on their utility and educational impacts15.

The objective of this analysis is to determine the predominant concept of validity used in medical education assessment studies. For this purpose, a bibliometric research was conducted in the literature, covering the last two decades of published assessment works to construct a metric with quantitative indicators on the utility of assessment studies and the perceived change in the concept of validity along the period.

METHODS

Search strategy

The data for this bibliometric analysis was collected based on a search for articles indexed in the PubMed database on assessment of knowledge in medical education, covering a period from January 2001 to august 2019, using the terms “validity” and & or “medical education” and & or “assessment”. A review group was created to ensure expertise in medical education and research methodology, consisting of two clinicians with postgrad formation on medical education and a university researcher. The members of the review group independently applied the inclusion and exclusion criteria (described below) to make up a combined list. A preliminary analysis of the literature was performed to determine the existence of evidence in similar searches and reviews, by searching the databases of Cochrane and BEME reviews.

Inclusion and exclusion criteria

The inclusion criteria were as follows: (1) study design: all studies on learning assessment that included the validation process in its methodology; (2) population: medical students at undergraduate and graduate levels; (3) educational intervention: studies on learning assessment in the cognitive, skills and attitudinal domains. The exclusion criteria were as follows: (1) study design: systematic reviews and reviews and studies published before 2001; (2) population: studies that did not focus exclusively on medical students.

Study selection and classification

A two-stage process was employed for selection and classification. Initially, based on the first broad search based on the eligibility criteria, the authors made a secondary selection, where studies of which validation process was not explicit in their methods section were excluded.

Subsequently, the selected studies were then classified in two categories and three levels, based on their approach to validity. Category one represents studies with the fragmented validity concept, and was subdivided in two levels: “level one”, where the interpretation of validity was restricted to the reporting of test scores, and “level two” where test scores were followed by some kind of inference but without an explicit statement about the utility and consequences of the test. Category two represents studies with a unified validity concept and is entirely constituted by ‘level three”, where the utility and consequences of the test are made explicit (Table 1).

Table 1 Classification of studies

| Classification of studies according to the validity concept | ||

|---|---|---|

| Categories | Levels | Criteria |

| 1-Studies with the fragmented validity concept | Level one | -Studies that do not make explicit the utility of the test being limited to the reporting of results and scores. |

| Level two | -Studies that do not make explicit the utility of the test, being limited to the reporting of results and scores, with some kind of inference. | |

| 2-Studies with unitary validity concept | Level three | -Studies that use the results and inferences of the validation process to make useful pedagogical decisions for the school. |

The authors made a preliminary selection and categorization of studies independently, followed by a consensus process to determine the final classification. Any disagreement on the classification were resolved by subsequent discussion in the panel until a reliable consensus was reached. Furthermore, 20% of the selected studies were randomly submitted to double evaluation for quality assurance and prevention of bias.

The authors also made a manual search, and based on the article “The top-cited articles in medical education: A bibliometric analysis”18, a list was created with the three most frequently cited journals, to serve as a basis for the manual research. Figure 1 represents the research flowchart, from the broad search at the PUBMED database to the final classification of the studies.

After the final selection and classification, the studies were also classified based on the Miller framework for clinical assessment13, to serve as an accessory for validity arguments. For this purpose, studies were sorted into two main groups: (1) “written, oral or computer-based assessment” for the “Knows-Knows how” first two steps of the Miller’s pyramid, and (2) “performance or hands-on assessment” for the “Shows how-Does” top two steps.

Statistical correlation between kinds of assessment in the two decades was assessed by the chi-square test. Statistical analysis was performed using the freeware R 3.2.0.

RESULTS

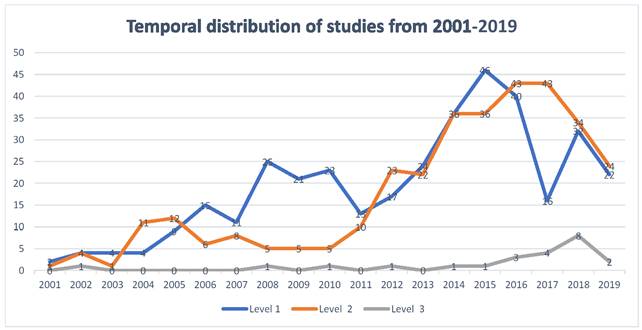

From an initial broad search resulting in 2823 studies, 716 studies were selected based on the eligibility criteria. Of these, 693 (96.7%) were considered studies with the fragmented concept of validity. A total of 366 studies (50,83%) were classified as “level one”, which limited their validity analysis to the resulting score of the validation process without any kind of inference or statement about the consequences or utility of the test. In 327 studies (45,94%), the results were accompanied by some kind of inference about the results but without any report or discussion about the utility of the test and were classified as ‘level two”; and only 23 studies (3.2%) met the criteria for “level three”, where the authors presented the results of the validation process aligned with explicit analysis of the consequences and utility of the test. Figure 2 shows the temporal distribution of selected studies grouped according to the three levels of validity concept.

The temporal distribution showed a significant increase in the number of validity studies in the last decade of the present century. The number of studies increased from 179 studies in “decade ONE/XXI”, to 537 studies in “decade TWO/XXI” (P<0,001). This significant increase in the number of validity studies from one decade to another, was not accompanied by a proportional change in the level of validity. Although there was also an almost two-fold increase in the proportion of level 3 studies, from 1.78% in the first decade to 3.31% in the second decade, this increase was not significant (P=0.356). Thus, 96,27% studies in decade TWO/XXI were still considered to have a fragmented validity concept (Table 2).

Table 2 Validity studies in the first two decades of the 21st century

| Decades | Decade I -XXI (2001-2010) | Decade II -XXI (2011-2019) | |||||

|---|---|---|---|---|---|---|---|

| Articles | N | Percentage Mean | SD | N | Percentage Mean | SD | Sig* |

| Level 1 | 118 | 63.07 | 13.82 | 248 | 46.2 | 7.19 | 0.03 |

| Level 2 | 58 | 35.15 | 13.67 | 269 | 50.73 | 5.95 | 0.04 |

| Category 1 (L1 + L2) | 176 | 98.22 | 2.54 | 517 | 96.94 | 2.69 | 0.444 |

| Category 2(Level 3) | 3 | 1.78 | 2.54 | 20 | 3.31 | 2.67 | 0.356 |

| Mean | 17.9 | 6.98 | 59.6 | 16.41 | 0.001 | ||

*independent samples t-test (CI=95%)

Based on Miller framework for clinical assessment13, 415 studies were classified as ‘written, oral or computer-based assessment” and 301 studies were classified as “performance or hands-on assessment”. In a temporal distribution, the decade “ONE/XXI” had a total of 179 works, with 104 (58.11%) classified as “written, oral or computer-based assessment”, and 75 (41.89%) as “performance or hands-on tests”. Of the 537 works of the decade “TWO/XXI”, 311 (57.91%) were classified as “written, oral or computer-based assessment” and 226 (42.09%) studies were classified as “performance or hands-on tests”. Although there was a significant increase in number of studies in the last decade, there was no significant change in the proportion between “written, oral or computer-based assessment” studies and “performance or hands-on assessment” (Table 3).

DISCUSSION

There has been an unquestionable surge of assessment research in medical education, but this increase in the number of studies does not necessarily correlate with an equivalent educational impact. In the same way that adult learning captivated the attention of medical educators in the second half of the 20th century, we have arrived at the 21st century with assessment papers representing almost half of the most cited articles18, and with the increasing interest in the latest trends in medical education, such as outcome-based curriculum19 and assessment of entrustable professional activities20, where assessment has a prominent role, we can only expect a continuous increase. In contrast with this growing popularity, is the scarcity of validity research with a unitary unified view, which recognizes the consequences of a test policy. This search demonstrated that a fragmented view of validity is still prevalent in most study designs. Almost the totality of works (96.7%) presented a fragmented concept of validity and half of all articles (50.83%) were limited to the isolated demonstration of results from the validation process, leaving aside the most important aspect of validity, which is the score interpretation and its subsequent use1. It is also noteworthy that the impressive escalation in the number of assessment studies, documented in this work, was not followed by an equivalent increase in the number of studies with a unitary unified view of validity, demonstrating a renewed interest on the assessment but with the same old fragmented view of validity.

The biological and quantitative heritage in biomedical sciences has a strong influence on medical education, contributing to a mechanistic view of assessment, frequently described as a tool or instrument; however, assessment should be viewed in a much broader sense than measurement. Royal conducted a general Pub Med search in a five-year period, and found the term “reliable instrument” over two thousand times21. This mechanistic approach is supported by the misconception that learning is linear and independent of context, and that it could always be objectively estimated by reliable instruments used by trained assessors22, but reliability, although necessary, is never sufficient for a valid argument supporting an educational decision23. The medical education literature seems to approach validity as some kind of property of a test, when in fact, validity is about the meaning of test scores and, especially, of its use4),(11),(24.

In the same way that in the mid-1990s Bloom pointed an excessive focus on curriculum reform without paying attention to the “learning environment”, leading to an era of “reform without change”29, the excessive experimentation on different assessment methods without giving much thought to the educational consequences may lead to discredit and obsolescence of many instruments, inaugurating an era of “useless tests”24. The pervasive view of assessment as a box of different and disconnected tools, and the inability to differentiate the validation process of these instrument from the real meaning of validity, make medical educators forget that a medical school is a social environment, with all the peculiarities that can make the most reliable test have a very poor reception if one does not observe the local idiosyncrasies. One simply cannot make a judgement based on a single measurement, and medical schools must develop a more comprehensive view of assessment, not as a tool, but as a program effectively integrated to the educational program14.

“From prediction to explanation”

In a significative number of studies selected in this search, it is clear that many researchers are satisfied solely by the consistency of their test scores, without any further analysis, and it is easy to understand the appeal of reliability over validity in a context where the scientific aspects of medical education casts a long shadow over the humanistic values25. Even Cronbach, one of validity champions, in one of his many important contributions, made a significant extension of the reliability theory to the generalizability theory26, and in the same way for medical teachers with a strong biomedical formation, generalization based solely on the consistency of scores stimulates the replication of experiments without much reflection on current developments on the learning theory and its ethical and social impacts27.

Early exposure of learners to practice28, the growing importance of work-based assessment29 and the complexity of health care all point to the limitations of a statistical-based approach to assess learning in the medical profession, pointing to the dawn of a post-psychometric age30. It is necessary to have a “shift from numbers to words”22 and it is time for medical teachers to look for the intrinsic value of a test, based not only on the consistency of its scores, but also on the utility and consequences of its use. In this sense, educators recognize that one cannot assess learning based solely on test scores,31),(32 and the qualitative assessment can offer solutions to many limitations of numerical values33),(34, contributing to the validity argument. This bibliometric analysis demonstrated that the growing interest in performance assessment has not been followed by an equivalent increase in the number of studies, and with the increasing number of medical schools investing in skills labs and high fidelity simulation35, it is urgent to expand the view of validity, to a qualitative and ecological dimension, where the observed behavior in the laboratory can be generalized to the workplace36),(37.

Slowly, medical education literature is beginning to shift its attention to a unified view of validity38),(39, but the prevalent understanding is still fragmented, with validity frequently confused with the validation process, prioritizing empirical analysis and scores to the detriment of a social dimension of a test40),(41.

CONCLUSION

Validity is “the most important criterion” in a test42, but it is frequently underestimated and compared to subordinate criteria. This systematic search demonstrated that assessment studies in medical education are still far from a unified view of validity, and not committed to an extensive analysis involving all possible consequences of a test policy, especially those related to social and educational impacts. This restrictive view can lead to the waste of valuable time and resources in assessment methods, without any significant educational consequence. Future studies should prioritize assessment research specifically tailored to the needs of the school and integrated to the educational program, shifting the focus from a culture of replication of assessment methods to a social evaluation, where aspects like utility and educational impact should be the primary goals.