INTRODUCTION

Medical residency is the specialization par excellence in the training of physicians, and the program is responsible for ensuring that recently graduated doctors reach the desired level of competence1. As it is in-service training, assessment, in this context, goes beyond the cognitive evaluation, constituting a daily challenge for the teacher and the preceptor.

An effective evaluation process requires, in addition to teacher training, a system that combines several types of evaluation, aiming to reach all elements of learning (knowledge, skills and attitudes), in addition to ensuring the validity and reliability of the utilized methods2.

Considering the complexity of the teacher’s role in medical education, the development and implementation of teacher development programs, activities that seek to improve the knowledge and skills of health professionals as teachers, should be considered a permanent process3),(4. However, according to Steinert4, despite the growing interest in the subject in recent years, few studies have focused specifically on teacher development for student evaluation.

The scenario of this research - our daily practice as teachers and preceptors in the field of pediatrics - is Hospital Universitário Professor Alberto Antunes (Hupaa) at Universidade Federal de Alagoas (Ufal), which has a medical residency program (MRP) in pediatrics that was implemented 30 years ago and has all the credentials to keep it effective to the present day. The program lasts three years, and five vacancies are made available annually. The occupancy rate of medical residency in pediatrics, from 2015 to the present day, was 93.3% (28/30), with a 100% rate of full residency training (18/18)5.

However, the evaluation process of the program’s residents still follow a traditional and summative characteristic, even though the National Medical Residency Commission (CNRM, Comissão Nacional de Residência Médica) has defined guidelines for the evaluation of resident doctors since CNRM Resolution N. 05, of November 12, 19796 (revoked), of which guidelines were reiterated in CNRM resolution N. 02, of May 17, 2006, considering the terms of article 137:

[...] Art. 13. In the periodic evaluation of the resident physician, the modalities of written, oral, practical or performance tests by an attitude scale will be used, which include attributes such as: ethical behavior, relationship with the health team and with the patient, interest in activities and others at the discretion of the institution’s COREME. §1. The minimum frequency of evaluations will be every three months. §2º. At the institution’s discretion, a monograph and/or presentation or publication of a scientific article may be required at the end of the training. §3º The criteria and the results of each evaluation must be made known to the resident doctor.

As for the evaluation, a conceptual model proposed by Miller, known for several decades, known as the Miller’s pyramid, demonstrated to teachers that, regarding professional development, the evaluation cannot be restricted to theoretical knowledge, as it is necessary for the student to know how to apply this knowledge, perform it, in a practical way, in simulated environments and, finally, apply it in real life8.

Miller’s pyramid aligns its strata with the educational objectives and evaluation methods aimed at the types of skills and competences whose domain one wants to know, rising from the theoretical knowledge contained at the base - “to know” and “to know how” - to “to show how” and “to perform”. The pyramid apex corresponds to the evaluation of professionals in their work environment9.

Based on these dimensions and the resident doctor’s degree of learning, the observations made by teachers and preceptors should be directed, in addition to the cognitive one, to the performance evaluations, considering the clinical and psychomotor skills, the interaction with the patient, the management of information, the capacity for judgment, synthesis and decision, as well as the preservation of ethical attitudes1.

Most clinical skills evaluation methods have, as a basic principle, the direct observation of the resident’s performance in clinical tasks, in a real or simulated environment. In this sense, the performance of feedback should be allowed, preferably an immediate (formative) one, which consists in describing and discussing with the residents their performance related to a given activity10.

A well-developed and periodic evaluation system, with continuous feedback, is an effective tool to improve the performance of the future specialists and guarantee their qualification, a goal of indisputable importance in the training process2. For that purpose, the resident’s evaluation needs the systematization and institutionalization related to how to evaluate, in addition to teacher training for this important aspect of the teaching-learning process.

This research proposed to answer the following question: how are residents being evaluated regarding the skills acquired in the pediatric medical residency program at Hospital Universitário Professor Alberto Antunes? Hence, the objective was to analyze the system used to evaluate the pediatric resident doctor of a university hospital, aiming to promote teacher training in evaluation methods.

METHODOLOGICAL TRAJECTORY

An educational action research was created aiming to identify gaps in pedagogical practice and cause changes in educational habits, considering its potential as an investigative praxis, in the resident’s evaluation process through an intentional sample with teachers and preceptors of the aforementioned residency program in pediatrics.

In the educational field, the action research (research-teaching) consists of an investigation about the practice itself and implies the awareness by the participants, allowing them to be involved in all phases of the methodological trajectory11),(12.

Its use in the educational field allows the researcher teacher to identify a problem in their pedagogical activity and, through research, create the conditions to transform it, aiming to favor the personal and professional growth of the researchers and the involved participants11),(12.

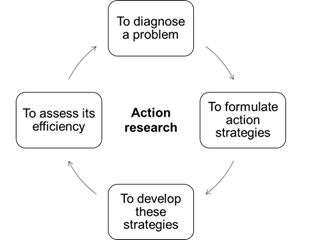

The action research follows a cycle in which practice is improved in the movement between acting in the field of the problem and investigating about it. The cycle includes the identification of the problem and data production on the effects of a change in practice during the intervention, before and after its implementation, using pre- and post-methods to monitor the effects caused by the change13) (Figure 1).

According to Malheiros14, this methodology is very useful in the educational field, because it allows studies on changes in curriculum, teaching-learning models, evaluation methods, among other aspects.

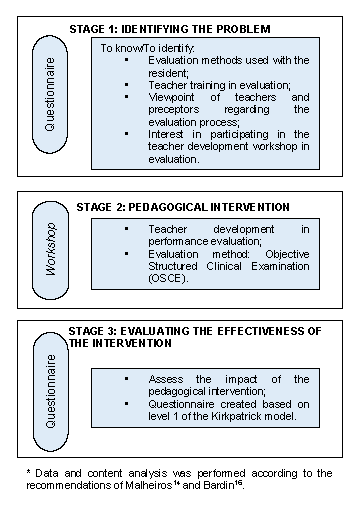

In the research development, different procedures were used for data collection, divided into three stages, observing and complying with the action research methodology, aiming to understand the reality of the resident physician’s evaluation and to propose action strategies for its improvement.

In stage 1 of the action research cycle, all teachers and preceptors who worked at the pediatric medical residency in Hupaa were invited to participate in the research, comprising total of 22 individuals, of which 16 were teachers and 6 preceptors, from January 2018 to April 2019. Of the 22 participants, 16 were directly linked to the University Hospital and 6 to other health services (Hospital Geral do Estado Dr. Oswaldo Brandão Vilela and Hospital Escola Dr. Hélvio Auto), where the pediatric residents from Hupaa work in the urgency/emergency and infectious diseases sectors, respectively. In this phase, one researcher teacher was excluded from the study, and the others agreed to participate in the discussion and to answer the questionnaire.

The first semi-structured questionnaire was applied to identify the deficiencies in the residents’ evaluation process, consisting of questions related to the participants’ sociodemographic data, specific data on the teachers’ training in assessment, the evaluation methods (cognitive tests or assessment of clinical skills) that they used with the resident physicians, the factual knowledge of performance evaluation methods in real and simulated environments, the perspective of teachers and preceptors regarding the evaluation process, as well as the intention to participate in a teaching development workshop about an evaluation method.

The participants’ answers to the open questions were organized based on the ideas that emerged from the guiding questions, when the pre-analysis was carried out through further reading, observing the emergence of categories that were not previously created. A matrix was created, and all statements were transcribed in full. The participants were coded by letters and numbers, following the order of analysis of the questionnaires - teacher (T) and preceptor (P).

Matrices were created, which stored the explicit or implicit ideas, the creation of the categories and the registration units that associate the statements to the topic to explain, in the text, how the result was achieved. The focal points and recording units were interpreted, and the synthesis for each focus was developed14.

Simple statistical analysis was used for objective data and content analysis, according to the recommendations by Malheiros14 and Bardin15, for the qualitative part.

Stage 2, an action resulting from the search for a solution to the diagnosed problem, led to the planning of a pedagogical intervention. According to Malheiros14, in this model of action research, an intervention is made in a certain reality so that, subsequently, its results can be evaluated.

This step consisted in planning the intervention phase, after analyzing the responses to the questionnaire. Then the training workshop on clinical skills assessment was developed for teachers and preceptors, focusing on the evaluation method chosen by the participants.

Aiming to maintain the integrative strategy, the dates for the training were reached by consensus with all involved participants (teachers, preceptors, actors and pediatric monitors). The entire workshop process was recorded through photos and footage, with the participants’ consent.

The stage 3 of this cycle, three weeks after the workshop, was characterized by the evaluation of the pedagogical intervention through a semi-structured questionnaire, sent by electronic mail, based on the Kirkpatrick model, which consists in an internationally recognized evaluation model, of which purpose is assessing educational actions aimed at professionals. It consists of four levels of training evaluation: (1) reaction, (2) learning, (3) behavior (transference) and (4) results16.

The questionnaire was designed based on the level 1 of the Kirkpatrick model. At this level, the participants’ reaction to the training itself is evaluated, as well as their reactions to the learning experience. Elements were questioned, such as the program content, the achieved expectations, the assessment of infrastructure and logistics (facilities and equipment), the duration and organization of training, the quality and content of the didactic material, the structure of scenarios, as well as the speakers’ evaluation (didactics, communication, interaction and knowledge) and the methodology used. Moreover, there were open questions about the motivation to participate in the workshop and the willingness to use the OSCE method in teaching practice with the resident physicians.

Based on the action research cycle, the trajectories used are described in the flowchart below, with the research strategies and constructed data analysis techniques (Figure 2).

The project was approved by the Research Ethics Committee (REC) of Universidade Federal de Alagoas, under Opinion n. 2,304,092 (CAAE: 74854717.0.0000.5013), with no conflicts of interest.

RESULTS AND DISCUSSION

Stage 1 - Situational diagnosis

At this stage, regarding the diagnosis of the problem, all teachers and preceptors who worked in the pediatric medical residency and who met the inclusion criteria participated in the study, totaling 21 individuals, of which 15 were teachers and 6 were preceptors. Of these, 76% (16/21) were females, whose age varied between 29 and 62 years (mean age of 46.5 years), training time from 7 to 38 years (mean of 22.3 years) and teaching time between 1 and 37 years (mean of 13.6 years). Regarding the degree, 23.8% (5/21) have a Doctorate Degree and 33.3% have a Master’s Degree (7/21).

Of the 21 participants, 10 (48%) reported they had no formal training in evaluation and that they used traditional assessment methods, based on their own experience in the service environment. This percentage includes all preceptors, which is due to the fact that there is no requirement for pedagogical training to work in preceptorship17, although it is the preceptor’s role to teach, monitor the daily practice and evaluate physicians in training. Preceptors, in addition to good specific knowledge in the field, need teacher development activities and permanent institutional support to help them improve their teaching skills18.

As for the evaluation methods, 81% (17/21) of the participants reported using more than one, for summation purposes, to obtain a more comprehensive and reliable evaluation. According to Norcini et al.19, the structure for a good evaluation consists of an organized combination of methods, to constitute an evaluation system; however, none of the teachers/preceptors uses a systematized evaluation of the resident physician’s clinical, psychomotor or affective skills, or provide feedback. Therefore, despite the diversity of evaluation methods, there is no guarantee of the absence of weaknesses20.

In the statements provided by teachers and preceptors, a collective concern with this inadequacy related to the evaluation methods can be observed:

Great research. I was always bothered by the evaluation without resources. I believe that there are risks of injustice. (T2)

I recognize how poor the traditional methods of assessment are. Perhaps due to accommodation and resistance to changes, we have not yet managed to leave this comfort zone. Open to new experiences. (T6)

This corroborates the results of Zimmerman et al.20, where teachers declared having difficulty in evaluating and formulating tests due to the lack of theoretical basis, in addition to the lack of standardization in the medical course.

The importance of performance evaluation, at this level of training, is due to its potential to verify clinical skills (communication, physical examination and procedures), and the continuous and formative evaluation allows the correction of failures and reduces the possibility of errors.

Ross et al.21, in a retrospective cohort study, analyzed the performance and progression of resident physicians when assessed by a traditional summative evaluation system, compared to a competency-based evaluation system. From this perspective, they showed the effectiveness of approaching residents that have difficulties, focusing on the possible correction of failures. The traditional evaluation methods identified the residents’ problems, but were not effective in correcting the existing gaps, perhaps because evaluations were disconnected from daily observations.

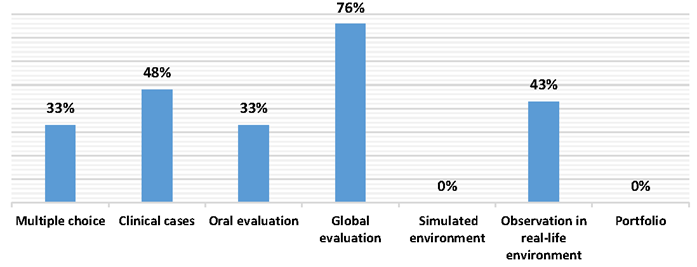

In this research, it was observed that the most frequently used method is the global evaluation, with 76.2% (16/21) of the answers, followed by the observation of the student in an actual environment, used by 43% (9/21) of the participants. As for the evaluation in a simulated environment, two participants (9.5%) reported having had some training for this model, although they did not use it with the residents. The other instruments mentioned were related to cognitive evaluation methods, such as discussion of clinical cases (10/21 or 47.6%), multiple choice tests (7/21 or 33.3%) and oral evaluation (7/21 or 33.3%) (Chart 1).

Chart 1 Distribution of the evaluation methods used by teachers and preceptors of the pediatric medical residency at the UFAL University Hospital, 2018.

The global evaluation consists of a scale that assesses knowledge, punctuality and attitude. According to literature data, which corroborate the findings of this article, the global evaluation is the most frequently used instrument by all postgraduate courses for the evaluation of skills in the United States22, for instance. However, the authors observed two major limitations of the global evaluation. The most important of them is related to the fact that a physician with a deficiency in certain area can achieve a satisfactory global classification, if they show a good result in another competence. The other limitation is that this instrument provides little or no information for constructive feedback, an important component for the development of resident physicians23.

To validate the global concept, the proposal is to construct a matrix with specific items, which reflect the combination of the necessary attributes for good professional performance, in addition to the fact that the resident is being evaluated by several teachers23.

The observation of the student in a real-life environment is a usual method of evaluation, of easy operationalization, favorable to the provision of immediate feedback and widely accepted by the participants2. However, the absence of systematization, the use of an evaluation form (checklist) or providing feedback, generates a low degree of reliability.

The evaluation in a simulated environment allows the approach of clinical tasks that represent what is common in medical practice, at a level of difficulty compatible with the stage of training, and to ensure that all students are evaluated under similar conditions, with careful observation and with the use of the checklist2. However, this method is not used by any of the participants with the residents, although two of them are aware of it. This fact may suggest the absence of an established evaluation process, the lack of interaction between the participants and the difficulty for its operationalization, as this method requires teamwork, prolonged performance and more costly logistics (scenarios, actors, evaluation forms).

Using evaluation methods for clinical skills, whether in real or simulated environments, is not an easy task, as it involves choosing the most appropriate method that relies on a valid, reliable, viable and acceptable technique for everyone involved in the process2. It also implies teacher training in evaluation, teamwork, participation in action research and other strategies, in addition to standardization and systematization. Some participants expressed themselves positively in relation to the research and the need to standardize evaluation:

I hope that after this research and the obtained results, there is a standardization of the evaluation methods used in FAMED. It is very important to standardize the assessments. (T1)

(I hope) It becomes a project, registered with the teaching management, including preceptors from all clinics. (T4)

In this sense, 90% of the participants mentioned the need for training in evaluation methods and showed interest in participating in training about a specific evaluation method in a simulated environment, as explained in the statement by P2: “I am interested in teaching trainings”. Or, even, when one addresses the need for a performance evaluation method such as the OSCE, for instance: “Include OSCE as an integrated assessment during the pediatric internship in the 9th and 10th semesters (at least 1x/semester)” (T5).

The evaluation improvement of teachers and preceptors ensures the quality of the evaluation and the teaching-learning process, since the evaluation allows the review of educational planning and adjustments in their teaching practice20.

One of the statements refers to the need for feedback, as stated by T3: “Continuous assessment, in daily life, is essential for the learning process, always with feedback for its strengthening”. In this statement, it can be observed that the teacher synthesizes the entire process of one evaluation with a formative purpose.

Feedback is the substrate of a formative assessment and an effective tool to improve student performance, especially when it is performed immediately, after the clinical task10. Therefore, it must take place in a dialogical way, with the student playing an important role in the evaluation of their own performance24.

In medical education, feedback is as essential for educators to promote learning as it is for students, as it provides information about their work and quality, aiming to generate improvements25.

The results showed that the participants’ concern about the residents’ assessment methods was a collective one, considering this as the first step towards institutionalizing changes within the teaching-learning scenario.

Stage 2 - Workshop: integrative and interactive dynamics

After identifying the problem, evidenced by the absence of systematization, institutionalization and teacher training regarding evaluation methods for residents in pediatrics, an intervention was planned and implemented as a teaching development strategy under evaluation. Most participants chose the Objective Structured Clinical Examination (OSCE) training.

The OSCE is an assessment tool in a simulated environment, situated in the “show how you do it” dimension of Miller’s pyramid. It is a very frequently used and appreciated evaluation method in several parts of the world. When well prepared, it brings important information for the future professional’s performance.

Nine (43%) research participants attended the workshop with simulated activity: 7 teachers and 2 preceptors, just under 50% of the total sample, despite the previously agreed schedules. Observers and guests also attended the workshop, such as pediatric residency managers, resident physicians and monitors of the pediatric discipline. The activity was carried out at the School of Medicine of Universidade Federal de Alagoas, located next to Professor Alberto Antunes University Hospital. As described by Steinert4, the proximity to the workplace is one of the facilitating factors to increase the participation, motivation and access to these educators. Of those present participants, 8 were teachers and preceptors linked to Hupaa. It was observed that only one of the six participants was linked to the other institutions. Justifications about the absence were conveyed.

The workshop consisted of two sequential moments: in the first, the researchers exposed general data on evaluation and on the OSCE method (in the classroom); the second part was directed to the workshop practice, held in the tutoring rooms of the School of Medicine, with the actors and dummies from the Skills Laboratory.

At that time, the research participants were instructed to rotate through the different scenarios, in groups of three or four. It is noteworthy that the moving through the stations, in the OSCE, is done by the assessed student; however, on this occasion, the objective was not to evaluate the resident, but to allow the participating teacher and preceptor to have an experience with different scenarios, evaluation forms and feedback.

At the end of the circuit, the participants returned to the classroom, where the phases of the OSCE that were carried out were presented, with photographic records of the workshop.

Stage 3 - Evaluation of the impact of the workshop

The Kirkpatrick method was used in this stage, aiming to assess the outcome of the OSCE workshop. Thus, level 1 of the Kirkpatrick method (reaction), used in the evaluation of the training, measures the participants’ impressions in relation to the content, the instructors, the materials and resources, the environment and the facilities. According to Kirkpatrick, all programs must be evaluated at this level, aiming to promote improvements. A positive reaction does not necessarily guarantee learning, but a negative reaction and dissatisfaction certainly reduce the possibilities for learning26),(27. In this respect, the 12 items of the evaluation obtained a predominance of positive assessments, with 10 items being evaluated as between excellent and good.

Regarding the item related to the application of the evaluation method in training (OSCE), all participants were favorable to using it in their teaching-learning practice with resident physicians and also with undergraduate students. This corroborates the comments on the motivation to attend the workshop, when they refer, basically, to the teaching development in more innovative evaluation methods:

New learning. (T1)

Evaluation of students. (T3)

Improve the evaluation process. (T6)

Improve my performance with residents and students. (P1)

In the activity, it was also possible to include the participation of master’s degree students, the professional master’s degree in health teaching, the discipline of teaching evaluation, as a form of learning through the observation of the method being performed in real time.

It is emphasized that a positive action, in a team, undergoes an idealization and generates a new action, according to the cycles of educational action research. It is also noteworthy that the training reached Kirkpatrick level 3, since the participants used the appraisal method of learning in their daily practice, now together with the interns. The activity took place under the same structure of the workshop, which corroborates Steinert’s4 thinking, when she states that bringing teacher development activities into the workplace increases participation, motivation, access and, that together with individual engagement, a sequencing of activities and continued guidance of participants, ensures better training effectiveness.

The butterfly effect28 caused by the intervention (workshop), in the educational action research proposal, and through the evaluation method itself, has also become a teaching-learning environment for students from other levels of education. It is expected that this effect, a metaphor used in science, described by Edward Lorenz in 197228, caused by a small change at the beginning of an event, can have positive future consequences and that it will unfold into new interventions in evaluation methods at the institution, for all undergraduate and graduate levels. In this sense, it is in education and in the reflections that come from it that the transformative potential of changes ensues.

The limitations of the study include the difficulty in aggregating all the participants involved (teachers, preceptors and actors), due to the conciliation of time, place and work, in addition to addressing only one method of evaluation, due to the organization’s logistics and practicality.

FINAL CONSIDERATIONS

The action research carried out with the teachers and preceptors who work with the medical residency program allowed, mainly, to identify limitations in the evaluation and feedback system of the pediatric resident physician, such as the inadequacy of the methods used and the perception that teachers and preceptors should be more aligned with a formative assessment and feedback. In this sense, it should cover all elements of teaching-learning of practice in service, such as clinical skills, attitude, ethics, clinical reasoning and professionalism, thus allowing the identification and correction of any flaws of the physician in-training.

The methodology used in the research disclosed an aggregating effect and contributed to the development of a collaborative and integrative sense within the group, awakening, through protagonism, the need for teacher training. Based on that, some positive changes were observed in the participants (teachers and preceptors) regarding the assessment at other levels of teaching-learning (undergraduate school and internship).

Although reflections on the inadequacy of the evaluation process have been generated, the intervention (workshop) was not sufficient to positively interfere, in the short term, with the pediatric medical residency evaluation.

It is known that a well-designed evaluation process requires time, periodicity, planning and organization. Solid changes require other factors, in addition to continuing teacher training in teaching-learning; it requires dedication, commitment from the involved educators, teachers and preceptors, student awareness and a de facto institutional formalization.

Evaluating is a complex process that remains a challenge; however, promoting spaces for debates and reflections, as shown by the research, can lead to significant measures and they might evolve towards the educational objectives aimed at the physician in training.

texto em

texto em