INTRODUCTION

The development of a country is closely tied to the quality of education (ASTAKHOVA et al., 2016). From this perspective, the improvement and permanent evaluation of the educational context is necessary to meet social demands, measure the quality, and provide visibility to educational institutions that are in a society in constant transformation. Qualifying knowledge in different education areas is a challenge faced by Higher Education Institutions (HEI).

In Brazil, postgraduate school plays a key role in scientific production, as it is the main way to deepen the educational background of researchers in different areas of knowledge, considering different scenarios and an increasingly diverse student body (LEITE et al., 2020). The factors that influence this postgraduate valorization are related to researchers, research projects, funding, and the Evaluation System developed by the Coordination for the Improvement of Higher Level Personnel (CAPES), which seeks to expand and consolidate the stricto sensu postgraduate school (OLIVEIRA; ALMEIDA, 2011).

For the regular operation of stricto sensu post-graduation courses in Brazil, CAPES is responsible for examining proposals for new courses and, through quadrennial evaluation, for analyzing and evaluating the permanence of the offer of Post-Graduation Programs (PPGs) (CAPES, 2021). The evaluation process is broad, deemed essential, and acknowledged in several countries. In HEI, self-assessment is a constituent part of the academic routine and institutional evaluation. The participation of all involved is presupposed, with a legal, constant and transparent character, and offers an opportunity to develop improvements (ANGST, 2017; MYALKINA, 2019).

The adopted evaluation methodology, over time, has undergone reformulations and demonstrates that the evaluation process is complex and variable, requiring critical reflections on the practice and organizational objectives to identify advances, resistance, difficulties, and strategies that enable decision-making (DIAS SOBRINHO, 2008). In the search for continuous improvement, the evaluation process is essential, especially in educational settings where the return is socially important since it allows leveraging the quality of professional education. This fact is directly related to the development of students’ skills and their employability (JORRE DE ST JORRE; OLIVER, 2018).

In the latest reformulation, implemented for the evaluation of the 2017- 2020 quadrennium, the evaluation began to contemplate three central evaluation questions, these being: the Program, which seeks to evaluate the operation, structure, and planning of the Postgraduate Program (PPG) concerning its characteristics and objectives; the Educational Background, which aims to analyze the quality of the students, taking into account the performance of professors and the production of knowledge associated with the research and training activities of the program; and the Impact on Society, which verifies the innovative character of the intellectual production, internationalization, impact and social relevance of the program (MEC, 2019).

There is no doubt that the evaluation of PPGs is essential because it generates indicators both for funding agencies and for the coordination of the PPGs themselves. However, self-assessment is also part of the evaluation spectrum, but it has been little explored in academic studies, especially when it involves the faculty. Furthermore, what is the professors’ perception of the self-assessment parameters? How do professors perceive their performance in the postgraduate program? These research questions remain little explored and are the knowledge gap to be explored in this paper. Given this, the purpose of this research is to identify the perception of the professors of a HEI regarding their participation in the PPG.

The theoretical contribution of this study is the definition of a self- assessment tool for professors, one of the dimensions of the self-assessment model suggested by CAPES. The empirical contribution is the completion of a study with the faculty of a HEI since studies on evaluation are generally conducted with students (AL-THANI et al., 2014; BROOKS et al., 2014; KUMPAS-LENK;EISENSCHMIDT; VEISPAK, 2018). This study also aims to contribute knowledge about how to perform professor self-assessment, which is a critical part of the PPGs’ self-assessment process and is one of CAPES’ evaluative criteria.

In addition, knowing and analyzing the evaluation criteria themselves will allow the growth of the number of postgraduate programs or their improvement with their specificities, which will also facilitate the updating and flexibility of the system (OLIVEIRA, 2017). Moreover, conducting a self-assessment covering all PPGs can be useful for identifying the central aspects to be addressed in institutional strategies aimed at improving the postgraduate courses of the HEI.

THEORETICAL FRAMEWORK

The institutional evaluation term represents the evaluation of the praxis of an institution, in its respective practice areas, attending to the evaluation of plans and projects exercised by these institutions. This process makes it possible to survey the developed actions, seeking for better quality, and it can be carried out in two ways: (1) the external evaluation, carried out by the regulatory unit; or (2) internal assessment, also known as self-assessment, exercised by the subjects of the institution, in which all processes are analyzed, through internal and external evaluations, in a systematic way, accompanied by indicators, aiming to identify the improvement needs (OLIVEIRA, 2010; GAMA; SANTOS, 2020). The latter is exercised by the participants of the institution, being not only evaluators but part of the object of evaluation.

Thus, self-assessment is characterized by a process of self-reflection and self-analysis that highlights the strengths and challenges that still need to be addressed, to improve the quality of the academic work, the formative concepts, development, and learning, since it is planned, conducted, executed and explored by the individuals who are responsible for the actions that are being evaluated. Moreover, it enables reflections on the context and the policies applied, in addition to the organization of data that results in decision-making (CAPES, 2019a). Reflective professionals take responsibility for their professional development, where the way of understanding and improving the execution of their functions comes from the reflection on their own experience (ZEICHNER; LISTON, 2013).

Self-assessment is a way of self-knowledge of what happens in a course or institution, evaluating what it is and reflecting on what it is expected to be, what has already been accomplished, how the administration takes place, what information is available for analysis and interpretation, and what the strengths and weaknesses of the institution are (ZAINKO; PINTO, 2008). It is configured as a constant process of knowledge about the institutional realitto to understand the meaning of the set of proposals developed to enrich the educational quality and achieve greater social relevance, identifying the potentialities and establishing strategies to overcome problems (FRANCO, 2006).The assessment must, first of all, be the search for self- knowledge (self-assessment), enabling the building of an assessment culture in the HEI and allowing them to organize and generate conditions to face the different external assessments conducted in the institution, especially the process of course assessment.

However, it does not mean that they should adapt to the external evaluation model, but rather create a culture of self-assessment and reflection-action-reflection, which are indispensable for the growth and progress of a HEI (POLIDORI; MARINHO-ARAUJO; BARREYRO, 2006).

The self-assessment process in higher education is defined and self- managed by the academic community (LEITE et al, 2020). Lehfeld et al. (2010) highlight the complexity, plurality, and challenges of self-assessment in university institution, since it involves the perception of diverse subjects and is influenced by structural and situational elements. In this perspective, MEC (2019) emphasizes that self-assessment allows one to identify more clearly and accurately the need and importance of HEIs to structure the postgraduate planning institutionally. The interest already described by Watson and Maddison (2005) of self-assessment as a tool for organizational learning is noticeable.

In Brazil, the assessment of post-graduation courses was implemented in 1976, under CAPES responsibility, through the National Post-Graduation System (SNPG), in an attempt to expand and consolidate the post-graduation stricto sensu in the country. The Assessment Directory (DAV) guides the SNPG’s assessment, with the participation of the academic-scientific community and consultants. The fundamentals that guide the Assessment System are based on recognition, reliability, and transparency, and are divided into three collegiate bodies and 49 assessment areas (CAPES, 2021).

Courses in operation are assessed every four years, and the results of the assessment are presented in the format of an assessment form. The assessment form was reformulated in 2019 using three assessment criteria: Program, Educational Background, and Impact on Society. These areas are responsible for defining the indicators for each item, according to the modality and specificities of the PPGs (CAPES, 2019b).

The item Program involves the characteristics and objectives of the PPG from different perspectives, regarding the operation, structure, profile of the facul,ty and articulation between the strategic planning of the program and the HEI (MEC, 2019). It also considers the processes, procedu,res and results of the program’s self-assessment. In this sense, it seeks to induce a participatory process of self-assessment of the program; this question focuses on listing items related to the program that influence and contribute to the intellectual production and student educational background.

The Educational Background dimension analyzes the quality, from different perspectives of the students, taking into account the performance of the professors and the production of knowledge associated with the program’s research and training activities. As to the Impact on Society dimension, we can note the impact and innovative character of the program’s intellectual production, as well as its relevance in society, internationalization, and visibility of the program (MEC, 2019).

In the search for improving the evaluative process of PPGs, and following the global trend of reference countries, the update of the assessment form in 2019 began to assign weight to the processes, procedures, and results of the program’s self-assessment, becoming a relevant component in the Program issue (CAPES, 2019a). Assessing, re-assessing and self-assessing scenarios, policies and procedures requires commitment; in this sense, self-assessment as part of an organizational diagnosis needs to be constituted from a participatory scenario (BUSCO; DOONER; D’ALENCON, 2018).

De Oliveira (2017) considers being the professor’s responsibility to define diversified education strategies that are committed to the effectiveness of learning. The participation of the professor in the self-assessment tools is significant, as it allows the processes that influence learning to be evaluated, validated, and, if necessary, corrected. From this perspective, professors have great responsibility within the structure of postgraduate courses, where their assessment is a crucial part to understand the functioning of the programs and allowing constant work to improve their academic educational background (COSTA et al., 2020).

Taking these aspects into consideration, CAPES (2021) adds that postgraduate assessment is essential to ensure and maintain the quality of PPGs and their grounding, directing resources to foster research. In the understanding of Carneiro and Bin (2019) DE OLIVEIRA, the evaluation of a PPG configures itself, among other things, in the analysis of public policies developed for education, which allows examining the “unfolding” of an entire cycle of the implemented public policy. Moreover, the results of self-assessment can be translated into a space for reflection on the management and directions of PPGs, which is crucial to enhance the results and their quality.

METHOD

The research uses a quantitative, descriptive approach. As a research strategy, a survey was used, with a sample population of 1,916 professors of postgraduate courses at the Federal University of Santa Maria (UFSM). The questionnaires were applied online between December/2020 and January/2021, sent by e-mail to the professors through the institution’s Data Processing Center (CPD), and 221 valid answers were obtained. The work was approved by the UFSM Ethics Committee (CAAE: 40529120.0.0000.5346)

As a collection tool, a questionnaire with 81 questions, organized in blocks, was used. The first was intended to characterize the respondents’ profile and survey some general information, with questions about gender, age, marital status, employment, and length of service in the PPG(s). The rest of the document was subdivided into nine blocks, as shown in Table 1. A 5-point Likert-type scale was used to measure the answers to these blocks (1 - I strongly disagree; 5 - I strongly agree). Specifically for block 02 (Infrastructure of PPGs), a 5-point scale was also used, but rather for adequacy (1- Not at all adequate; 5 - Totally adequate).

Table 1 Description of the data collection tool

| Blocks of the questionnaire | Number of questions | References |

|---|---|---|

| 01 - Respondent profile | 10 | Prepared by the Authors. |

| 02 - Infrastructure of the PPGs | 11 | Tetteh (2019); Soares (2018). |

| 03 - Performance of students | 9 | Vitória et al. (2014); Soares (2018). |

| 04 - Rapport with students | 17 | Silva e Vieira (2015). |

| 05 - Support from the secretariat | 10 | Soares (2018); Tetteh (2019); |

| 06 - Performance of the course coordination | 7 | Soares (2018); Vitória et al. (2014). |

| 07 - International performance | 9 | Prepared by the authors.* |

| 08 - Adherence to the Research Line | 2 | Prepared by the authors.* |

| 09 - Teaching activities | 3 | Prepared by the authors.* |

| 10 - Contribution to the course | 3 | Prepared by the authors.* |

Source: Prepared by the authors.

*Based on the assessment guidelines suggested by Capes.

The collected data were analyzed using SPSS 23.0 software (Statistical Package for the Social Sciences). Descriptive statistics, factor analysis, and regression were used as analysis techniques. Descriptive statistics were used to describe the profile of the interviewees and also to identify their perception and behavior concerning each of the factors assessed.

After that, exploratory factor analyses were performed, aiming to analyze the structure of the interrelationships or covariance existing between variables to define common factors (HAIR et al., 2014). To verify the factorability of the data, two tests were performed, the first Kaiser-Meyer-Olkin test (KMO), which evaluates the degree of correlation of the sample, the closer to 1 representing that it has the greater connection between the selected factors. And the second, Bartlett’s test of Sphericity, which according to Fávero et al. (2009) seeks to assess the percentage of the significance of the relationship between the variables. Communality is a measure of the proportion of variance explained by the factors extracted (FIELD, 2009). Extracted communality equal to or greater than 0.5 was used as a criterion for item maintenance. The varimax rotation method was applied, as it seeks to minimize the number of variables that have high loadings in a factor, facilitating the interpretation of the factors (FÁVERO et al., 2009).

Cronbach’s Alpha was used to measure the reliability of the factors generated. Reliability indicates the degree of internal consistency among the multiple indicators of a factor, by considering the extent to which the same measuring tool represents consistent results from various measurements; for the analysis, the value equal to or greater than 0.7 was defined as a parameter (HAIR et al., 2014).

Later, a multiple linear regression analysis was performed. The model was estimated by ordinary least squares. Normality, homoscedasticity of the errors and absence of multicollinearity of the variables were tested as assumptions of the model. In this sense, normality was verified using the Kolmogorov Smirnov (KS) test; for the multicollinearity of the variables, the IVF test was performed, in which values between 1 and 10 are acceptable. Finally, to verify homoscedasticity, the Pesarán- Pesarán test was applied (CORRAR; DIAS FILHO; PAULO, 2009; MALHOTRA, 2019).

DATA ANALYSIS

This section is divided into three parts. In the first, the profile of the sample is presented. The second part seeks to identify the factors of the professor’s self-assessment. Finally, in the third, regression analysis is used to investigate the influence of the factors on the Professor’s Contribution to PPG.

DESCRIPTIVE ANALYSIS OF THE SAMPLE

A descriptive analysis was carried out with percentage data obtained from the questionnaire applied so that it was possible to identify the profile of the professors who work in the Post graduation.

Regarding gender, the majority (52.49%) is female. Age was subdivided into four intervals, in which there is a great diversity of age groups: 28.05% are up to 40 years old, 24.89% are between 41 and 49 years old, 25.79% are between 50 and 56 years old, and 21.27% are over 57 years old. As for marital status, 74.66% are in a stable relationship/marriage. Most do not hold a coordination position (80.09%), have worked at the HEI for more than 10 years (55.66%), and have been in the PPG for more than 5 years (65.61%).

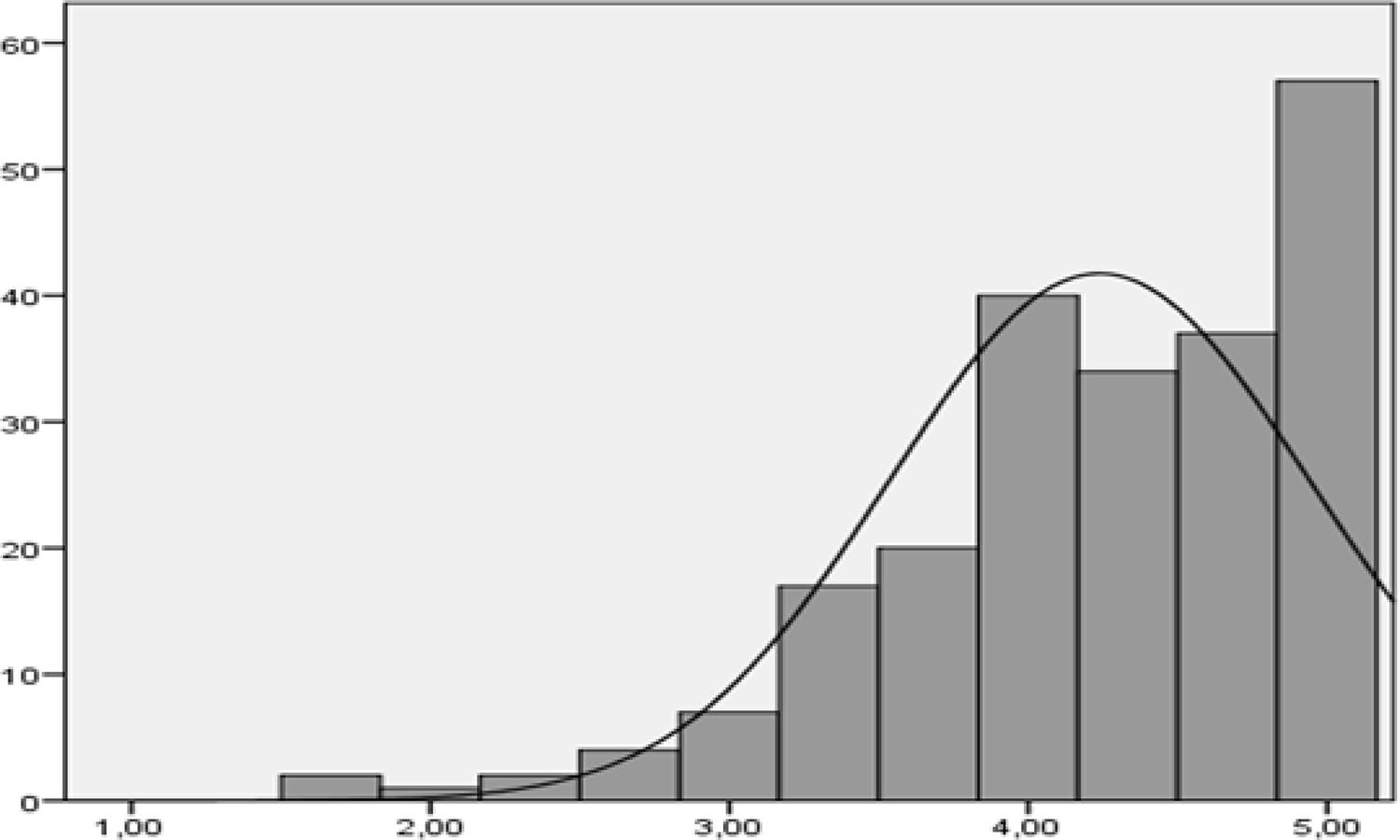

Next, we tried to present the results of the contribution factor for the course. Table 2 and Figure 1 show the descriptive statistics of the 3 items that compose the factor.

Table 2 Descriptive statistics of the Contribution to the Course variables

| Contribution to the course Cronbach’s Alpha: 0,771 | Mean | Frequency in percent | ||||

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | ||

| I can meet the demands made by the course coordinator. | 4.39 | 0.5 | 2.3 | 7.2 | 37.6 | 52.5 |

| I believe I meet what PPG expects of me. | 4.33 | 0.0 | 2.7 | 7.7 | 43.4 | 46.2 |

| My scientific production is higher than what is necessary for the PPG to maintain its concept. | 3.99 | 2.7 | 6.8 | 19 | 31.7 | 39.8 |

Source: Research data (2022).

Note: 1-Strictly disagree; 2-Partially disagree; 3-Not disagree, nor agree; 4-Partially agree; 5-Strictly agree.

Table 2 shows that the three variables have a mean close to four, which indicates that the respondents partially agree with the content of the questions. The analysis of the frequency of responses also allows visualizing this, since most of the respondents partially or totally agree with the content of the questions.

Figure 1 shows the result of the “reduction” of the three variables in Table 2 into a single variable (performed from the mean). This Contribution variable to the course presents an overall mean of 4.24, which demonstrates the respondents’ agreement regarding their contribution to the PPG in which they work.

FACTOR ANALYSIS

Based on the questions that seek to understand the self-assessment carried out by the HEI’s professors, these were grouped into dimensions that describe their respective characteristics. Since two five-point Likert-type scales were used, the factors were divided into two tables. In Table 3, the questions considered the level of adequacy, and in Table 4 the answers analyzed the degree of agreement.

Table 3 Composition of the professor self-assessment factors - Block 02

| Variables | Factorial loads | Factor mean | Explained Variance (%) |

|---|---|---|---|

| Factor 1 - HEI facilities - Cronbach’s Alpha: 0,872 | 3.25 | 54.95 | |

| The infrastructure used by the program. | 0.849 | ||

| The available equipment. | 0.815 | ||

| The laboratories available. | 0.785 | ||

| The infrastructure of the campus. | 0.738 | ||

| The infrastructure available at the Learning Centers (or off-campus campuses) to hold conferences and events. | 0.700 | ||

| Factor 2 - Internet access - Cronbach’s Alpha: 0,847 | 2.93 | 15.64 | |

| Access to the internet in the common areas of the university. | 0.882 | ||

| Internet access in the classrooms used by the program. | 0.879 | ||

| Internet access in my workroom. | 0.757 |

Source: Research data (2022).

Table 4 Composition of the Professor Self-Assessment factors - Blocks 03-10

| Variables | Factorial loads | Factor mean | Explained Variance (%) |

|---|---|---|---|

| Factor 3 – Rapport with students - Cronbach’s Alpha: 0.962 | 4.28 | 29.86 | |

| My students are hardworking. | 0.862 | ||

| My students are good apprentice-researchers. | 0.835 | ||

| My students try hard to do good work. | 0.827 | ||

| My students try to read the material that I give them. | 0.823 | ||

| My students are studious. | 0.798 | ||

| My students make an effort to master the knowledge related to the subject of the dissertation/thesis. | 0.784 | ||

| My students discuss their research with me regularly. | 0.781 | ||

| My students make an effort to master the methodological procedures necessary to the development of the dissertation/thesis. | 0.767 | ||

| My students return quickly the demands that I send to them. | 0.762 | ||

| My students make an effort to search for relevant literature for the elaboration of their work. | 0.759 | ||

| My students do what I guide them to do. | 0.754 | ||

| My students are good researchers. | 0.739 | ||

| My students have a good relationship with me. | 0.666 | ||

| My students are easily accessible. | 0.664 | ||

| My students are interested in participating in events. | 0.641 | ||

| My students actively participate in the activities of my research group. | 0.595 | ||

| My students help me publish papers in national magazines. | 0.584 | ||

| Factor 4 - Secretariat support - Cronbach’s Alpha: 0,966 | 4.66 | 11.38 | |

| The PPG secretariat is always willing to help. | 0.899 | ||

| The PPG secretariat is accessible during office hours. | 0.898 | ||

| The PPG secretariat answers my doubts. | 0.897 | ||

| The PPG secretariat helps me in my demands. | 0.876 | ||

| The PPG secretariat provides correct information. | 0.866 | ||

| The PPG secretariat keeps me informed of academic procedures. | 0.834 | ||

| The PPG secretariat knows the processes and procedures that involve the PPG. | 0,.820 | ||

| The PPG secretariat is courteous. | 0.818 | ||

| The PPG secretariat provides the documents for the exams in a timely manner. | 0,.811 | ||

| The PPG secretariat treats me with respect. | 0.767 | ||

| Factor 5 - Student Involvement - Cronbach’s Alpha: 0,928 | 3.76 | 7.56 | |

| The course students are hardworking. | 0.802 | ||

| The course students are studious. | 0.758 | ||

| The course students participate in class discussions. | 0.729 | ||

| The course students are responsive. | 0.721 | ||

| The course students read the material for the class. | 0.718 | ||

| The course students meet deadlines. | 0.714 | ||

| The course students are productive. | 0.709 | ||

| The course students are accessible. | 0.708 | ||

| The course students do good work in the disciplines. | 0.683 | ||

| Factor 6 - Course Coordination - Cronbach’s Alpha: 0,942 | 4.30 | 7.01 | |

| The course coordination considers my suggestions and opinions. | 0.873 | ||

| The course coordination is responsive to my doubts and problems. | 0.863 | ||

| The course coordination cares about me. | 0.847 | ||

| The course coordination is fair with all the professors. | 0.823 | ||

| The course coordination holds meetings to discuss guidelines and directions for the program. | 0.819 | ||

| The course coordination clearly shows what it expects from the professors. | 0.781 | ||

| The course coordination keeps me informed about changes promoted by agencies (PRPGP, CAPES etc). | 0.765 | ||

| Factor 7 - International performance - Cronbach’s Alpha: 0,848 | 2.36 | 5.33 | |

| I coordinate a research project with international funding. | 0.733 | ||

| I maintain international partnerships that effectively contribute to the internationalization of the PPG. | 0.732 | ||

| I give orientation/co-orientation to PPG students from other countries. | 0.715 | ||

| I have co-authored publications with international researchers. | 0.702 | ||

| I try to include international researchers in my students' advisory boards. | 0.685 | ||

| I give orientation to foreign students in the PPG. | 0.594 | ||

| My international scientific production is compatible with the PPG's internationalization goals. | 0.565 | ||

| Factor 8 - International student productions - Cronbach’s Alpha: 0,727 | 3.32 | 3.08 | |

| My students help me publish papers in international journals. | 0.704 | ||

| My students help me get international academic contacts. | 0.582 | ||

| Factor 9 - Adherence to the research line - Cronbach’s Alpha: 0,760 | 4.62 | 2.41 | |

| The themes of the dissertations that I supervise are always aligned with the research line of the PPG in which I work. | 0.792 | ||

| My research projects are highly aligned with the research line in which I work in the PPG. | 0.743 |

Source: Research data (2022).

In order to meet the communality criterion, the variables with extraction values lower than 0.5 were excluded, as follows: “Resources made available by UFSM libraries” (communality 0.398), “The adequacy of the infrastructure used by the program for PSN (People with Special Needs)” (communality 0. 394), and “Safety measures practiced at the University” (communality 0.411); it is noteworthy that these variables were excluded one at a time, from lowest to highest, until only the variables with extraction values greater than 0.5 remained.

The KMO (value=0.855) and Bartlett’s test (sig<0.001) indicated that the application of factor analysis is adequate. As for the variance, it was verified that the two factors explain 70.59% of all variance and presented satisfactory reliability, according to Crombach’s Alpha, with values of 0.872 and 0.847, respectively.

The HEI Facilities Factor is composed of five variables that refer to the infrastructure resources available to the Program, and the Internet Access Factor is formed by three variables that refer to the issues of connection and access to the Internet in the different spaces and activities of the HEI. We can notice that these factors are directly linked to what Sauerssig discusses (2019), the physical aspects of the environment, which include all the infrastructure, resources, and services offered by the HEI and that are essential for the performance of the activities.

In the second factorial, the KMO test presented the result of 0.910, and Bartlett’s test of sphericity presented a significance level of 0.000 (value=10721.736), indicating the factorability of the data. The resulting factors explain 68.24% of the variance and presented satisfactory reliability by Cronbach’s Alpha (except for one that was removed from the analyses). The factors are presented in Table 4.

Factor 3, defined as Rapport with students, is composed of seventeen variables that refer to how students allow themselves to help, collaborate and seek results, together with the group and the supervisor. Alves, Espindola, and Bianchetti (2012) refer to the process of rapport between the advisor and the student, as being essential respect, availability, care, and receptivity, promoting a harmonious and democratic dialogue between the parties.

Regarding aspects of support to professors, organization, and management of the PPGs, two factors can be highlighted: Factor 4, called Secretariat Support, is composed of ten variables that are intended to evaluate the cordiality, promptness, and form of relationship of the secretariat with professors and also students in the execution of demands and processes within the PPGs; and Factor 6, Course Coordination, composed of seven variables directed to evaluate how much the coordinators of the PPGs care about issues such as problems, opinions, responsibilities, transparency, and sense of justice with professors and students. Elements similar to these are highlighted by Goldani et al. (2010) who emphasize the need for qualification of administrative processes in the PPGs and highlight the need for support to carry out the activities. It should also be noted that the coordination of a course plays an important role in achieving quality in teaching and meeting the fundamental attributions of a course, as well as in directing efficient actions (FERREIRA; PAIVA, 2017).

With regard to the students of the courses, the constitution of Factor 5 was obtained, defined as the Involvement of students, formed by nine variables, which reflect on the students’ actions regarding the studies, readings, productions, participation in events, and if they comply with their academic obligations. Regarding this aspect, students should be considered as partners in higher education, in a scenario where students and also staff personnel are active collaborators in teaching, research, and learning (MERCER-MAPSTONE et al., 2017; MATTHEWS et al., 2019). In this sense, Vasquez and Ruas (2012) expound that the engagement of the learner in the learning process is vtal because from this arise new interpretations, the ability to think beyond the usual activities, to listen to different opinions, and to reflect on their practices, which contributes to new ideas, versatile talent, and specific skills.

Factor 7, named International Performance, and Factor 8, International Student Productions, cover variables related to internationalization, which are CAPES’ objectives (2019a), for the social insertion of knowledge produced in PPGs and the impact of programs (ARAÚJO, FERNANES, 2021). The composition of these factors for self-assessment meets the understanding of Alsharari (2019), who argues that internationalization can generate opportunities in several senses and influence the general improvement of the quality of education, diffusion of new technology for communication, and formation of a comprehensive “workforce” around the world.

Factor 9, in turn, identified as Adherence to the Research Line, is made up of two variables that discuss the alignment of the professor’s research with the PPG’s research lines. This component is directly related to the requirements evaluated by CAPES (2019a), which guides the compatibility and adequacy of proposals and individual research to the objectives of the Program and its lines of research.

It is important to point out that the factors means indicate that the professors consider the available infrastructure and Internet access to be adequate. As for the factors shown in Table 4, for most of them, the means are around four, indicating that the professors partially agree with the proposed items. Two dimensions related to internationalization are the exception, where the average for international relations was only 1.82, and for productions 3.09, indicating that professors perceive they cannot carry out activities that characterize international relationships and are indifferent to the ability to generate international productions.

DETERMINANTS OF OVERALL CONTRIBUTION TO THE COURSE

In order to verify how the self-assessment dimensions contribute to the perception of contribution to the course, regression analysis was performed, with the dependent variable being the Overall Contribution to the course and having the self-assessment factors as independent (explanatory) variables. The regression model was estimated by ordinary least squares, with estimation by the Enter method, and the results obtained are presented in Table 5.

Table 5 Estimated regression model

| Model | Coefficients | T | FIV | |

|---|---|---|---|---|

| Test | Sig. | |||

| Factor 1 - HEI facilities | 0.143 | 2.046 | 0.042 | 1.566 |

| Factor 2 - Internet access | 0.006 | 0.089 | 0.930 | 1.454 |

| Factor 3 - Relationship with students | 0.023 | 0.269 | 0.788 | 2.293 |

| Factor 4 - Secretariat support | -0.059 | -0.935 | 0.351 | 1.278 |

| Factor 5 - Student involvement | -0.022 | -0.304 | 0.761 | 1.653 |

| Factor 6 - Course coordination | 0.152 | 2.405 | 0.017 | 1.284 |

| Factor 7 - International production | 0.200 | 3.047 | 0.003 | 1.379 |

| Factor 8 - International student productions | -0.082 | -1.144 | 0.254 | 1.660 |

| Factor 9 - Adherence to the research line | 0.329 | 4.848 | 0.000 | 1.472 |

Source: Research data (2022).

Note: Dependent variable = Contribution to the course.

The independent variables explain 27.6% of the variation in the professors’ contribution to the PPG. As for the VIF indices, all were close to 1, affirming the non-existence of multicollinearity. The normality analysis of the residuals, from the result of the KS test, indicated the non-normality of the data. The Pesarán-Pesarán test (sig 0.756) confirmed the homoscedasticity of the errors.

The factor that presented the highest coefficient refers to the Adherence to the research line, indicating that there is a positive causal relationship between it and the perception of the professor’s contribution to the PPG. In other words, when the professor guides dissertation or thesis topics and develops studies/research aligned to the research line in which he/she works, his/her contribution to the course increases. Thus, the better the performance and closeness of the professor to the purposes of his/her research line, the better the perception of contribution (MACCARI et al., 2009).

The second factor that has the greatest influence on the dependent variable is International Production. The productions and relationships established with international researchers generate a perception of contribution to the course by the professor. This fact, besides contributing to the development of the PPG, enriches the social insertion of the research produced, and generally its impact as well, which is in line with CAPES’ objectives. In Brazil, specifically, even if there is a different perception of internationalization in different types of universities and given their peculiarities (ALMEIDA et al., 2021), there is a great potential to be explored by researchers who have the capabilities and interest for such goal, which should be encouraged through investments for this purpose.

The third factor deals with the Coordination of the PPGs. According to the perception of the professors, the more the coordination shows consideration for the interests of the faculty, answers questions and suggestions, offers support, expresses clarity about what is expected from the professors, and holds meetings to discuss the Program’s guidelines, the more these actions influence their performance and contribution to the course. The importance of these actions consists in directing the efforts of the professors as to the activities they should develop for the growth and quality of the PPG.

The last factor contemplates the HEI’s Facilities, as they are related to the infrastructure, equipment, resources, laboratories, and areas for conferences and events. In this sense, the better the infrastructure of the Program and the equipment available, the better the quality of the faculty’s work, allowing them to carry out good research. In the case of specific areas, where laboratories are needed, these spaces are indispensable and make studies and tests possible, giving robustness to the scientific production of the PPG.

FINAL CONSIDERATIONS

Social development is related to the quality of education, the improvement, and constant evaluation of the educational context, which gives the State the possibility of measuring quality and allows visibility to educational institutions. This study aimed to identify the perception of the professors of a HEI regarding their participation in the PPG.

Ten dimensions were identified, which can serve as guidelines for evaluating and monitoring PPGs. The results of the regression analysis indicated that the factors Adherence to the Research Line, International Production, HEI Facilities, and Coordination of the PPGs directly influence the Faculty Contribution to the course, while they are related to the production of scientific content, infrastructure, and management of the Program, which is crucial to the development of the program.

Among the self-assessment factors, those related to internationalization stand out, whose means indicated that internationalization continues to be a challenge, especially regarding the maintenance of international relationships. Such results would be expected since most courses at the HEI are not yet classified by CAPES as courses of excellence. On the other hand, becoming aware that internationalization is an item to be improved in a self-assessment process is already a good start for managers to seek alternatives.

Adherence to the research line is another essential factor for professor self-assessment. The lack of alignment between what the professor does, produces and guides to the research lines can generate disastrous effects on the Program dimension of the CAPES evaluation. The maintenance of “umbrella” research projects aligned to the research line in which he/she works will inevitably lead to the generation of new knowledge for the disciplines and dissertations and will promote scientific literature adjusted to the area of activity of the program. Thus, as the research projects are the basis for the constitution of the results of the professor, a constant self-assessment of the professor on this issue is essential for the identification of possible mismatches and, consequently, the resumption of focus.

We also highlight the factors associated with the program’s operationalization, such as the existence of adequate infrastructure and efficient coordination. Building a course of excellence involves the creation of an adequate work structure and participative management. In this sense, the faculty’s contribution depends on the institution’s ability to provide the necessary physical, material, and financial resources, but also on the management’s ability to keep the professors engaged.

As a theoretical contribution, this paper advances the definition of the factors of professor self-assessment. Moreover, it contributes to the theoretical and practical field, as it highlights factors that determine professor self-assessment, generating guidelines for the improvement of PPGs. As an institutional policy, it is a great challenge to develop a solid self-assessment system, capable of building a portrait of each course and the institution’s courses as a whole. However, given the recent changes proposed by the CAPES evaluation system, this challenge takes on a character of urgency and necessity.

Thus, this study represents a first step in the search for a self-assessment model in the faculty dimension. New psychometric tests are still needed to adequately construct and validate a measure. Future research may adapt the tool of this work for specific evaluations within each area, in addition to proposing models for evaluation with the other stakeholders involved in a PPG, such as students, graduates, and the external community.