Servicios Personalizados

Revista

Articulo

Compartir

Educação em Revista

versión impresa ISSN 0102-4698versión On-line ISSN 1982-6621

Educ. rev. vol.40 Belo Horizonte 2024 Epub 30-Ene-2024

https://doi.org/10.1590/0102-469839797

Relacionado con: 10.1590/SciELOPreprints.4126

ARTICLE

DEVELOPMENT AND VALIDATION OF A DIGITAL VULNERABILITY IDENTIFICATION INSTRUMENT (DVI-Q) FOR K-12 STUDENTS 1

2 Federal University of Sergipe. São Cristóvão, SE, Brazil.

The number of young people using digital technologies at an earlier age has increased significantly. Considering that the inappropriate and abusive use of technologies can harm several dimensions of human life, this article presents and discusses the systematization of the development stages and the validation of an instrument that aims to measure digital vulnerability indicators among high schoolers. Based on the literature, the construction of the Digital Vulnerability Identification Questionnaire (DVI-Q) chooses, through the Delphi method, the type of content validation to evaluate the specialists' judgment about the contents in the instrument. A panel of 26 experts with training and experience in the education and health fields participated in this validation process. Further, samples of students participated, contributing to the semantic analysis of the questions and pilot application of the questionnaire to analyze and test the statistics. All these steps occurred during the pandemic period. The DVI-Q reached excellent results in the validation processes, with a Content Validity Index above 0.80 in all questions and categories. Its reliability and consistency was proven through Cronbach's alpha coefficient of 0.821. The final version of the DVI-Q contains 24 questions divided into four categories. During the validation process, evidence showed that this instrument could also be applied to different audiences to investigate different correlations, other research instruments, and statistical analyzes, after some improvements and cross-cultural adaptations.

Keywords: Digital mobile technologies; content validation; Delphi method

O número de jovens que fazem uso das tecnologias digitais cada vez mais precocemente tem aumentado de forma significativa. Considerando que o uso inadequado e abusivo das tecnologias pode ser prejudicial em várias dimensões da vida humana, será apresentada e discutida neste artigo a sistematização das etapas do desenvolvimento e da validação de um instrumento que objetiva mensurar indicativos de vulnerabilidade digital entre estudantes do Ensino Médio. A construção do Questionário de Identificação de Vulnerabilidade Digital (Q-IVD) está fundamentada na literatura, sendo escolhido o tipo de validação de conteúdo para avaliar, por meio do método Delphi, o julgamento de especialistas acerca dos conteúdos presentes no instrumento. Participou desse processo de validação um painel de especialistas composto por 26 juízes com formação e atuação nos campos da educação e saúde, e, posteriormente, amostras de estudantes que contribuíram com a análise semântica das questões e a aplicação-piloto do questionário para realização de análises e testes estatísticos, cabendo destacar que todas essas etapas ocorreram durante o período pandêmico. O Q-IVD obteve ótimos resultados nos processos de validação, com Índice de Validade de Conteúdo (IVC) acima de 0,80 em todas as questões e categorias, e teve sua confiabilidade e consistência comprovadas mediante o coeficiente alpha de Cronbach 0,821. A versão final do Q-IVD contém 24 questões distribuídas em quatro categorias, e, durante o processo de validação, foram evidenciados indícios de esse instrumento ter aplicabilidade validada também para diferentes públicos, mediante aprimoramento e adaptações transculturais, para investigar correlações diversas juntamente com outros instrumentos de pesquisa e análises estatísticas.

Palavras-chave: Tecnologias móveis digitais; validação de conteúdo; método Delphi

El número de jóvenes que utilizan las tecnologías digitales a una edad más temprana ha aumentado significativamente. Y considerando que el uso inapropiado y abusivo de las tecnologías puede ser perjudicial en varias dimensiones de la vida humana, este artículo presentará y discutirá la sistematización de las etapas de desarrollo y de la validación de un instrumento que tiene como objetivo medir indicadores de vulnerabilidad digital entre estudiantes de la secundaria. La construcción del Cuestionario de Identificación de Vulnerabilidad Digital (Q-IVD) se basa en la literatura, eligiendo el tipo de validación de contenido para evaluar, a través del método Delphi, el juicio de especialistas sobre los contenidos presentes en el instrumento. En este proceso de validación participó un panel de expertos compuesto por 26 jueces con formación y experiencia en los campos de la educación y la salud, y posteriormente, muestras de estudiantes que contribuyeron con el análisis semántico de las preguntas y la aplicación piloto del cuestionario para realizar los análisis y estadísticas de pruebas, destacando que todos estos pasos ocurrieron durante el período de pandemia. El Q-IVD obtuvo excelentes resultados en los procesos de validación, con un Índice de Validez de Contenido (IVC) superior a 0,80 en todas las preguntas y categorías, y comprobó su confiabilidad y consistencia a través del coeficiente alfa de Cronbach 0,821. La versión final del Q-IVD contiene 24 preguntas divididas en cuatro categorías, y durante el proceso de validación se mostró evidencia de que este instrumento también ha sido validado para su aplicabilidad a diferentes audiencias, a través de mejoras y adaptaciones transculturales, para investigar diferentes correlaciones junto con otros instrumentos de investigación y análisis estadísticos.

Palabras clave: Tecnologías móviles digitales; validación de contenido; método Delphi

INTRODUCTION

Even before we heard about the new coronavirus (SARS-CoV-2), causing the Covid-19 pandemic, technologies in general, especially Digital Mobile Information and Communication Technologies (MDICT), were already present in the daily routine of the population on a global scale, providing new forms of socialization and a wide variety of resources and services essential for the maintenance of different political, economic, sociocultural, academic, and professional spheres.

The improvement of digital technologies, especially the wireless connection of mobile phone services, has allowed people to access a wider variety of mobile devices, especially smartphones, due to their functionalities such as internet connection, instant communication applications, e-mail services, social networks and sharing of photos, videos, music, and other files. In this context, the number of young people making use of these technological resources at an increasingly early age has also increased, and with it, new ways of learning, thinking, and interacting in the digital society have emerged.

It is undeniable that MDICT provide a series of benefits for the population, such as globalized interactivity, comfort, work and study possibilities, greater agility in daily tasks, entertainment, and other facilities for their users. However, at the same time, we cannot neglect the possibility that they, the MDICT, may at some point, prove to be harmful in several dimensions of human life, including the point of compromising physical and mental health through pathological dependence on technologies, cause personal and social conflicts and even cause, or aggravate some emotional disorders, such as anxiety, if their use occurs in an abusive and uncontrolled way, something that has become common among teenagers (RIBEIRO; LEITE; SOUSA, 2009; KING; NARDI; SILVA, 2014; TUMELEIRO et al., 2018)

Given this discussion, this article presents the systematization of the steps of the process of development and validation of an instrument that aims to measure indicators of digital vulnerability among high school students. We emphasize that this instrument corresponds to an important resource that makes it possible to analyze the students' digital culture, including the use of digital technologies and their correlation with learning, something considered innovative and relevant in view of the assumptions of the Common National Curricular Base, specifically its ten general competencies in the pedagogical scope, articulated with knowledge, attitudes, and values for the full exercise of citizenship with a focus on technological languages and specific skills for the development of digital culture in different social dimensions (BRASIL, 2018).

The development and validation of the instrument proposed in this study are justified by the need to verify the risk of vulnerability of inappropriate use of technologies among adolescents, which, in turn, can trigger various disorders and damage, including in learning, due to anxiety disorders, worsening of pathologies, among other situations in which it is of fundamental importance that teachers can direct the integration of technologies to the use oriented research and other school activities with objectivity. Thus, the need to investigate indications of vulnerability with high school students, specifically in the 3rd year, was motivated by the fact that this is the last stage of Basic Education, in which most students go through a process of transition from adolescence to adulthood, and many are interested in continuing their studies in higher education and/or entering the labor market, a phase that requires greater maturity and responsibility in decision-making, especially regarding the use of MDICT in their social relationships (ALVES, 2019).

It is noteworthy that, although there is a consensus about the importance of using data collection instruments with quality to enable the investigation of phenomena objectively and systematically, there is still a significant number of questionnaires developed and applied in research in various areas, without having been properly validated (KOSOWSKI et al., 2009; SOUZA; ALEXANDRE; GUIRARDELLO, 2017). For this reason, the need to consistently assess the quality of the instrument developed through validation methods that ensure reliable measures and indicators was prioritized, as will be better detailed in the following sections.

FIRST STEPS

The validation process can be understood as a set of methods to analyze the accuracy of results obtained from the realization of a given research instrument, such as questionnaires, interview scripts, tests, etc., and verify whether these in fact contemplate the phenomena and objectives they are proposed to investigate, as well as identify the need for improvement or adaptation between the selected variables and the theoretical construct evaluated (BITTENCOURT et al., 2011; JESUS, 2013; ALVES, 2019). In other words, an instrument will be considered valid when it manages to achieve the objectives proposed during its elaboration.

Among the main validation methods used in research in general over the years, we highlight criterion, construct, and content validation. In general terms, criterion validation seeks to compare how well the scales of a given instrument relate to some external criterion, as well as to predict characteristics of the subjects and predict future events and behaviors; construct validation, on the other hand, consists in evaluating the behavioral representation of variables that cannot be directly measured, called latent variables; and content validation, in turn, aims to determine whether the theoretical construction of the items evaluated adequately represents all dimensions of the content to be measured (JESUS, 2013; SOUZA, ALEXANDRE, GUIRARDELLO, 2017; ALVES, 2019).

The type of validation adopted in this study was content validation, given its high applicability in research in the field of education that involves instruments with qualitative and quantitative approaches in data analysis. The choice of content validation proved to be appropriate to assess, based on the judgment of experts, whether the contents present in the instrument submitted to this process are effective and consistent with the criteria established for measuring the phenomenon to be investigated (ALEXANDRE; COLUCI, 2011; ALVES, 2019; MATOS et al., 2020). In the following topics, we present in detail the steps developed in the validation process of a digital vulnerability measurement instrument, from the preparation of the questions to the contribution of the expert group.

Development of questions: rationale and reference matrix

When we refer to the construction of questionnaires, performance tests and other research instruments aiming to assess indicative of competencies, attitudes and skills through descriptors and variables, we must consider as a starting point the elaboration of a reference matrix to organize and describe in a specific way the assessed elements (PAGAN; TOLENTINO-NETO, 2015; ALVES, 2019).

In this perspective, the research instrument, called the Digital Vulnerability Identification Questionnaire (DVI-Q) was elaborated together with a specific reference matrix, whose objectives, descriptors, and categories were previously established to guide the construction of the questions in a reasoned manner. The process of developing the first version of the DVI-Q, as well as its reference matrix, was based on content analysis of bibliographic research on related themes for the definition of constructs and dimensions that were addressed and organized into categories.

Initially, we used the contributions of Ayres et al. (2003) and Ayres, Paiva and França Júnior (2012) to define and understand the concept of vulnerability, which, in turn, is subjective and involves practical and epistemological foundations articulated with behavioral, cultural, political, and economic aspects to understand how certain population groups, in the analytical dimensions of individual, social and programmatic character, become exposed to health problems and risks. Indeed, the understanding of vulnerability presented in this research is inserted in a multidimensional sphere and related to biological, epidemiological, attitudinal, and socio-cultural factors.

To associate the theoretical contribution of vulnerability with the second central approach of the DVI-Q, that is, the inappropriate use of digital technologies, we resorted to the review of several studies focused on this theme, predominantly in the areas of health and education, and with different populations and methodological approaches (YOUNG, 1998; WIDYANTO; McMURRAN, 2004; KING; VALENÇA; NARDI, 2010; CONTI et al., 2012; KING; NARDI; SILVA, 2014; YILDIRIM; CORREIA, 2015; GONZÁLEZ-CABRERA et al., 2017; LEE et al., 2017; SILVA, 2017; LOUREIRO; GALHARDO, 2018; SENADOR, 2019; KING et al., 2020; SILVA et al., 2021).

Among the studies mentioned, some have developed their own validated instruments to assess a range of phenomena related to technology abuse, such as the Internet Addiction Test (IAT), initially proposed by Young (1998) and improved by Widyanto and McMurran (2004) and which has since been translated and adapted to various contexts and even served as the basis for the creation of other instruments, such as the Nomophobia Questionnaire (NMP-Q), by Yildirim and Correia (2015), which corresponds to another important tool for diagnosing disorders caused by pathological dependence on digital technologies. Besides these, with a look more focused on the contemporary national context, we highlight the contribution of King, Nardi and Silva (2014) and King et al. (2020), along with other researchers and collaborators of the Delete Institute: digital detox and conscious use of technologies, of the Department of Psychiatry of the Federal University of Rio de Janeiro (UFRJ).

The questions of the DVI-Q were elaborated based on the mentioned studies, but with an educational perspective and without the intention of evaluating the cognitive learning about technological concepts, but, in a pluralist epistemological sphere, to identify possible relations of the use of technologies with different levels of vulnerability through a Likert-type scale with four points.

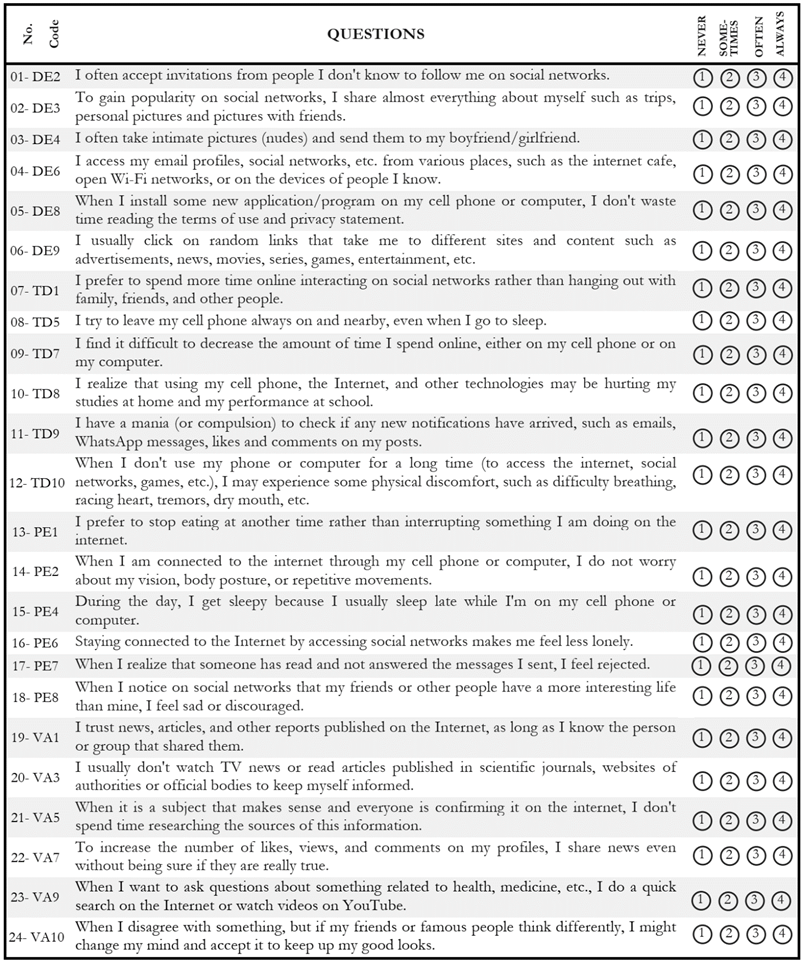

At first, the DVI-Q prototype had 40 questions organized equally into four, specific, but at the same time interrelated categories, namely: Data exposure; Technology dependence; Physical/emotional aggravation; and Virtual alienation. A breakdown of these categories is presented in Chart 1, below.

Source: Prepared by the authors (2021).

Chart 1: Categories that may represent indicators of digital vulnerability among adolescents.

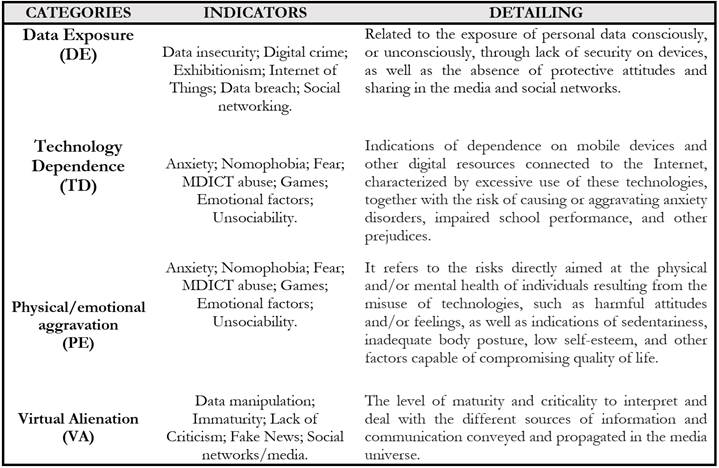

In addition to the reference matrix2, the DVI-Q questions were prepared using some criteria so that a group of specialists could establish their judgment during the process of content validation also through a Likert-type scale of four levels of agreement, in which the score 1 (one) refers to the total disagreement, and 4 (four) shows the total agreement of the item evaluated against the established criteria: characterization; relevance; vulnerability; neutrality; and objectivity. The details of these criteria are presented in Chart 2, below.

Source: Prepared by the authors (2021).

Chart 2: Criteria established for the DVI-Q to be evaluated by experts in the validation process.

The establishment of these criteria contributed to systematize the evaluation of the experts, and, in addition to serving as a barema (set of tables or numerical data that present the result of certain calculations.), it also corresponded to the prediction potential of the questions, thus ensuring higher quality in the formulation of the items in order to avoid some biases, such as excessive use of technical terms and inappropriate language that could confuse or induce the respondents' answers.

Methodology, ethical aspects, expert panel formation, and pilot application

The validation of the DVI-Q followed the ethical principles established by the National Health Council (NHC), according to Resolution No. 510/2016, which deals with research with human beings, upon consideration and approval by the Research Ethics Committee (REC) of the Federal University of Sergipe, under the Certificate of Ethics Appreciation Submission (CEAS) No. 35407520.4.0000.5546 and Opinion No. 4,490.395. The social actors participating in this process are basically classified into two groups, the first composed of a panel of experts involving teachers/researchers and health professionals, and the second, a sample of students, and both contributed specifically to the instrument validation processes.

Initially, for the content validation process of the instrument developed, the Delphi method was used with the purpose of obtaining a grounded consensus among a group of specialists in relation to the contents, categories, descriptors, and criteria submitted to the evaluation. The choice of this method proved relevant because it is one of the main psychometric techniques used to form a panel of experts and, subsequently, to determine consensus for defining competencies, educational content, planning pedagogical actions, and establishing criteria and evaluation methods in questionnaire validation processes (PIRES; BRANDÃO; SILVA, 2006; JESUS, 2013; ANTUNES, 2014; MARQUES; FREITAS, 2018; ALVES, 2019).

The participants who formed the panel of experts working in the processes of content validation of the DVI-Q were invited through the use of the snowball technique, being predominantly teachers/researchers who work and develop research involving the use of technologies in education and with experience in the development of questionnaires and school performance tests, as well as health professionals, also researchers, who work or identified with the themes addressed in the instrument, given the important relationship of the questions with the physical, social, emotional and other biopsychosocial and socioecological dimensions of health.

In fact, the snowball method corresponds to a non-probabilistic sampling technique that uses chains of references to define and reach the number of participants according to their accessibility (BIERNACKI; WALDORF, 1981). In this perspective, Rowe, and Wright (1999), Powell (2003), Jesus (2013), and Alves (2019) reinforce the importance of seeking a heterogeneous background among the participants who will compose the panel of experts acting in validation processes through the Delphi method to ensure balance and greater impartiality of judgments about the criteria evaluated.

It is worth remembering that, given the pandemic scenario in which the study was conducted, and in compliance with the health measures established by the competent bodies to reduce Covid-19 contamination, the entire validation process occurred remotely through MDICT. Thus, soon after the first version of the DVI-Q was prepared, the questions were transposed and adapted to the Google Forms platform, also known as Google Forms, which, being an online and free tool for creating and storing forms in the cloud; its use was notably intensified in scientific research and remote school activities during the pandemic period.

The evaluators who made up the panel of experts, which we can also call judges, as well as the group of students, were invited to participate in the research by free and spontaneous will, demonstrated through an Informed Consent Form (ICF), written in the form of an invitation letter and sent by e-mail, Telegram and WhatsApp, along with the link to access a questionnaire to know the sociodemographic profile of the participants.

It is noteworthy that the use of the Delphi method within the context of physical and social distance proved advantageous as it enabled the questionnaire and the other instruments to be evaluated by the specialists in more than one round, without them being gathered in the same environment, until the intended consensus was obtained. Thus, the process of repeating rounds had the purpose of reducing divergences and reaching a consensus of at least 80% among the evaluators about the items that compose the DVI-Q, as recommended by the literature (WESTMORELAND et al., 2000; JESUS, 2013; ANTUNES, 2014; MARQUES; FREITAS, 2018).

After the conclusion of the Delphi method with the satisfactory consensus obtained by the evaluating judges, the DVI-Q was also submitted to a semantic analysis with the target audience for which this instrument is intended, i.e., a representative sample of students finishing the 3rd year of high school, so that they could explain their impressions about the questionnaire and point out the need for changes to better understand the questions and their applicability.

The use of MDICT proved to be an important and effective strategy in these stages, especially to mediate the communication between researchers and participants, ensuring access to the instruments and data collection without restrictions of time and space. On this discussion of the use of the Internet and other technologies in research, Flick (2009, p. 32) corroborates by arguing that "many of the existing qualitative [and quantitative] methods are being transferred and adapted to research that uses the Internet as a tool, as a source, or as a research question.

In this sense, after completing all the steps of the content validation process of the DVI-Q, in a second moment, this time in person, we submitted this instrument to a pilot application to a larger sample of students, in order to statistically measure the reliability of this questionnaire by analyzing its internal consistency in the Statistical Package for Social Science (SPSS) software, as well as to allow correlations between the variables and with other research instruments.

RESULTS AND DISCUSSION

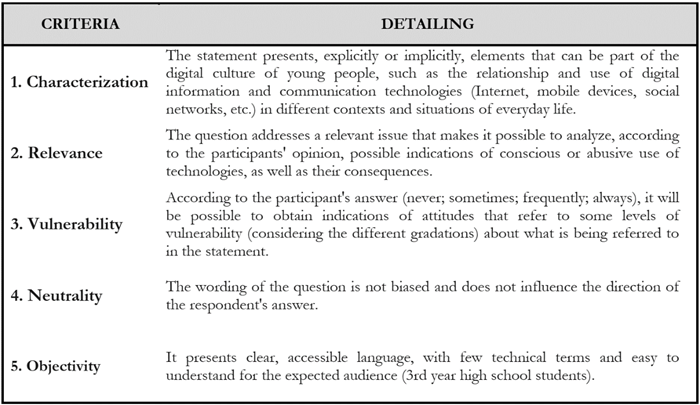

The panel of experts involved in the DVI-Q validation process was composed of 26 (twenty-six) participants, also known as judges. This number is considered valid because, although there is no consensus about the exact number of experts involved in the validation process using the Delphi methodology, Alexandre and Coluci (2011), García e Suárez (2013) and Revorêdo et al. (2016) recommend that, to ensure higher quality in the analysis of the assessments, the number should be at least 10 (ten) and at most 30 (thirty) participants. In Chart 3, we present the characterization of these judges according to age, education, academic degree, and area of work.

Caption: *Participant with more than one education; PHC: Primary Health Care; HSA: Secondary Health Care; BE: Basic Education; HE: Higher Education. Source: Elaborated by the authors (2021).

Chart 3: Characterization of the panel of experts involved in the validation of the DVI-Q.

As shown in Chart 3, there was considerable diversity in the group of experts who participated in the validation process of the DVI-Q, in which about 70% corresponded to professionals with degrees and working predominantly in the areas of education and research, and the remaining 30% were health professionals with different fields of expertise, such as Primary and Secondary Care, Social Work, research and teaching in Higher Education.

The average age of the judges was 35 (thirty-five) years, with a minimum variation of 24 (twenty-four) and a maximum of 47 (forty-seven), and a standard deviation of 6.5 years. The predominant gender was female, with 65% (17), and, in general, 54% (14) of the participants reported having more than 10 years of education. To preserve the anonymity of the participants and facilitate the organization of the data presented in Table 1, it was necessary to establish codes for each specialist, and the first two numerical characters refer to the chronological order in which the participant sent his considerations about the evaluated instrument; the next three characters correspond to the initial of the first undergraduate degree informed on the sociodemographic form; in sequence, the last characters refer to the degree, such as, for example, ESP to refer to Specialist, MEST for Master and DR for Doctor, as well as M_IN and D_IN, Master's and Doctoral student, respectively, in situations where the Stricto Sensu post-graduation course was in its conclusion phase. To facilitate the understanding of these codes, we present two graphic examples in Figure 1, below.

Source: Elaborated by the authors (2021).

Figure 1: Graphic exemplification of the codes assigned to the DVI-Q judges.

As shown in Figure 1, example one refers to the first judge who sent his considerations about the DVI-Q, who has a degree in Education and is studying for a Doctorate; while example two represents a judge with a degree in Psychology who occupied the eighteenth position, according to the chronological order in which the opinion was sent, and who holds a master’s degree. Some of these judges referred to have more than one education, and many referred to have several specializations (Postgraduate Latu Sensu), such as in Technologies and Open and Digital Education, Occupational Medicine, Family Health, Inclusive Education, Health Education, Psychiatry, Linguistic Analysis and Uses, among others.

To reinforce even more how diversified and qualified the panel of specialists was, it is worth mentioning that 80% (21) reported participating in one or more Research Groups registered at the National Council for Scientific and Technological Development, and, regarding the time of professional activity, 20% (5) informed that they have been working for more than 15 years, and 23% (6) have between 10 and 15 years, being research and teaching in Basic Education and Higher Education the predominant fields. Thus, in general, the formation of the panel of experts involved in this validation process included professionals with diverse backgrounds and areas of expertise, relatively evenly distributed, thus allowing a greater range of opinions and qualifications in the analysis and improvement of the validated DVI-Q, whose results will be presented in the next topic.

Results and analysis of the instrument validation process

The Delphi method allowed the group of specialists to assess the DVI-Q questions both quantitatively, using the Content Validity Index (CVI) about the judges' level of agreement, and qualitatively, based on the considerations, orientations, and suggestions sent by the evaluators on the forms. Although Coluci, Alexandre and Milani (2015, p. 931) have not found in their review study a consensus in the literature that characterizes a particular statistical test as the gold standard for content analysis, it was evidenced that, in addition to the qualitative approach through the considerations of the panel of experts, it is important to perform a quantitative analysis through the CVI to measure the "proportion or percentage of judges who agree on certain aspects of the instrument and its items".

In this context, the CVI was calculated using the Likert scale, in which the descriptors were evaluated in four levels, where level 1 (one) was considered not relevant or not representative, since the specialists indicated total disagreement with the evaluated item, while the maximum level, that is, 4 (four), refers to the relevance and representativeness, in view of the total agreement of the evaluators about the item. 80% favorável na análise entre os juízes (ou seja, IVC> 0,80), conforme recomendação dos estudos de Polit e Beck (2006), Alexandre e Coluci (2011), Revorêdo et al. (2016)

For the descriptors and criteria evaluated in the reference matrix and in the DVI-Q to be considered valid or satisfactory, they had to obtain a minimum percentage of agreement of 80% favorable in the analysis between the judges (i.e., CVI ≥ 0.80), as recommended by the studies of Polit and Beck (2006), Alexandre and Coluci (2011), Revorêdo et al. (2016), and Souza, Alexandre, and Guirardello (2017). Thus, considering that the CVI corresponds to the ratio between the number of items considered satisfactory or adequate by the experts and the overall quantity of items, we can represent this equation to evaluate each item individually through the following formula:

It was necessary to tabulate quantitative data to measure the percentage of agreement of the judges' assessment, i.e., the CVI, about the questions before the established criteria (as shown in Chart 2), whose answers marked as "Strongly disagree - (1)" and "Disagree - (2)" were considered unsatisfactory, as well as those marked as "Agree - (3)" and "Strongly agree - (4)", which were considered satisfactory on the Likert scale. In summary, each question to be considered valid had to obtain the minimum 80% agreement percentage (CVI ≥ 0.80) in all five criteria established.

The questions that obtained a CVI below 0.80 were reformulated based on the experts' considerations and submitted to a second round of evaluation. However, some recommendations about the remaining questions with favorable CVI were also considered for analysis and contributed to further improve the quality of these items. In this sense, besides the CVI of each criterion evaluated per question, we also calculated the overall CVI* of each category. Table 1 below presents the results for the category Data Exposure.

Table 1: Application of the CVI in the category Exposure Data for validation of the DVI-Q.

| Quest. | CVI of each criterion applied to the questions | CVI* of the Category | |||||

|---|---|---|---|---|---|---|---|

| Characteri-zation | Relevance | Vulnerability | Neutrality | Objectivity | |||

| Data Exposure | DE1 | 0.88 | 0.96 | 0.92 | 0.77** | 0.96 | 0.84 |

| DE2 | 0.92 | 0.88 | 0.88 | 0.54** | 0.92 | ||

| DE3 | 0.81 | 0.81 | 0.88 | 0.73** | 0.85 | ||

| DE4 | 0.88 | 0.88 | 0.88 | 0.65** | 0.85 | ||

| DE5 | 0.88 | 0.85 | 0.81 | 0.77** | 0.85 | ||

| DE6 | 0.92 | 0.92 | 0.92 | 0.81 | 0.92 | ||

| DE7 | 0.81 | 0.77** | 0.81 | 0.65** | 0.85 | ||

| DE8 | 0.88 | 0.92 | 0.96 | 0.77** | 0.77** | ||

| DE9 | 0.85 | 0.81 | 0.85 | 0.69** | 0.85 | ||

| DE10 | 0.88 | 0.88 | 0.85 | 0.85 | 0.88 | ||

Caption: CVI* overall category; ** criterion considered unsatisfactory in the judges' evaluation.

Source: Elaborated by the authors (2021).

Although the overall CVI of the Data Exposure dimension was considered satisfactory, with a value of 0.84 according to the evaluation of the panel of experts, it is noted that eight questions were evaluated as unsatisfactory because they did not meet any of the criteria assigned for the judgment, being the item "Neutrality" the most pointed out in need of reformulation, specifically in questions DE1, DE2, DE3, DE4, DE5, DE7, DE8 and DE9; In addition to this criterion, "Relevance" in question DE7 and "Objectivity" in question DE8 were also considered unsatisfactory.

In this perspective, we anticipate that the most commented criterion among the four categories and that, to some extent, also divided opinions before the evaluating judges was that of "Neutrality". However, we emphasize that the purpose of this question would be to identify whether the wording of the question would present any biased element capable of influencing the direction of the respondent's answer, since the wording of the alternatives was purposely prepared with value judgments to identify what would be the possible opinions and attitudes that the respondents could demonstrate by marking their answers.

Given the above, we emphasize the important contribution of judges 18PSI_MEST and 25LET_MEST on this question, reinforcing how the vision of the evaluators may be different compared to adolescents, the target audience of the DVI-Q.

Judge 18PSI_MEST:I consider the sentences to be well prepared, with accessible language and consistent with the audience to be researched. Besides, they are compatible with the proposed objectives and investigated indicators.

Judge 25LET_MEST: I liked the questions, but in relation to neutrality I put I always agree, I will justify because, as I work with rhetoric and argumentation, it is [...], I do not consider that there is anything neutral or impartial, there is always a bias, whether ideological or something like that that tries to convince or persuade a certain audience about what is desired [...]. For me, reading the questions, they are all presented very ironically, but of course this questionnaire is not meant for me, but it will be for teenage students, and they may have another view. And I believe that they will have another view and will not realize it, because we realize, for example, like this... I don't see the need to leave my profile private on social networks, so, at my age and in view of everything we read and the things we know, we end up finding it ironic, but for them it wouldn't be. That's why I put this neutrality [...], it is neutral from their point of view, but from our point of view, that we are evaluating the issues, I don't consider it neutral, so I'm just justifying it.

From this perspective, it was necessary to make several changes to the wording of the questions based on the judges' considerations, and we will bring some of these narratives to exemplify, or even justify, the changes made.

Judge 07QUI_MEST: I consider that questions DE1; DE2; DE3; DE4; DE5 and DE8 do not present Neutrality in their wording because by using the term "No" it denotes something at least doubtful and that can influence the student's answer. It generates fear, insecurity and influences the answer.

Judge 25LET_MEST: In question DE2, I would complete, although the question was not bad, I would add for the sentence to be complete [...] "for the more followers, the better", so the question will not have ambiguity, the more invitations, or the more followers, right? Although it is simple and I am understanding that it refers to followers, however, since it is for high school student, I think it is better to make it clearer.

For consultation purposes, we present in Figure 2, below, a QR Code that will give access to the comparative charts with the wording of the questions both in the first version and after their reformulation during the validation stages.

Source: Elaborated by the authors (2021).

Figure 2: QR Code to access and consult the reformulated DVI-Q3 questions.

In Table 2, below, we present the percentage of agreement of the panel of experts, calculated by means of the CVI of each criterion per question, referring to the Technological Dependence category, as well as the overall CVI*.

Table 2: Application of the CVI in the Technological Dependence category for validation of the DVI-Q.

| Quest. | CVI of each criterion applied to the questions | CVI* of the Category | |||||

|---|---|---|---|---|---|---|---|

| Characteri-zation | Relevance | Vulnerability | Neutrality | Objectivity | |||

| Technological Dependence | TD1 | 0.88 | 0.88 | 0.88 | 0.88 | 0.88 | 0.87 |

| TD2 | 0.85 | 0.85 | 0.85 | 0.77** | 0.85 | ||

| TD3 | 0.88 | 0.88 | 0.88 | 0.73** | 0.88 | ||

| TD4 | 0.88 | 0.88 | 0.88 | 0.77** | 0.92 | ||

| TD5 | 0.96 | 0.96 | 0.92 | 0.88 | 1,0 | ||

| TD6 | 0.92 | 0.88 | 0.88 | 0.77** | 0.88 | ||

| TD7 | 0.88 | 0.88 | 0.88 | 0.77** | 0.88 | ||

| TD8 | 0.92 | 0.92 | 0.92 | 0.77** | 0.92 | ||

| TD9 | 0.88 | 0.88 | 0.88 | 0.85 | 0.88 | ||

| TD10 | 0.88 | 0.85 | 0.85 | 0.77** | 0.92 | ||

Caption: CVI* overall category; ** criterion considered unsatisfactory in the judges' evaluation.

Source: Elaborated by the authors (2021).

Although the Technological Dependence dimension was considered valid against the overall CVI of the experts' judgment, seven questions were evaluated with the "Neutrality" unsatisfactory. Although we have already discussed the rationale behind this criterion, the judges' recommendations were taken into consideration to reformulate some of these questions, as we can see below.

Judge 08LET_D_IN: I noticed that in some questions the "neutrality" question was compromised, in the sense that some value judgment was "embedded" in it. The other questions, "characterization, vulnerability, relevance and objectivity" are all very well identified.

Judge 25LET_MEST: This second category, I thought it was very important, and even we end up recognizing ourselves in these parts [laughs], because we do have a certain dependence on the use of technologies, I [...] honestly I realized that there are a couple of questions here that went directly to me, because I have my cell phone all the time, sometimes I'm not using it, I leave the internet off, but the cell phone is right there by my side, even with the internet off, to study and so on.

The narrative of the judge 25LET_MEST points to strong indications that this instrument can also be suitable for adult audiences, such as academics, teachers, and the general population, as long as cross-cultural adaptations are made to align the questions to the characteristics of certain population groups.

However, considering some criticism, the judge 19BIO_D_IN pointed out that by answering some questions, the adolescent would not necessarily be indicating pathological dependence on technologies, and we agree with this consideration, and this is not even the goal of the questions, since pathological dependence on technologies concerns a clinical diagnosis, and even has a specific terminology, nomophobia. It is worth noting that, in the face of the current globalized scenario, we consider that all people have some dependency relationship with technologies, whether for entertainment, work, study, etc., so the focus of this dimension is to investigate whether the respondent has indicators of overuse of these devices for various factors, but without entering this specific context of the health area.

In Table 3, we present the percentage of agreement of the panel of experts through the CVI concerning the criteria evaluated in each question of the category Physical/Emotional Aggravation, as well as the overall CVI* of this category.

Table 3: Application of the CVI in the Physical/Emotional distress category to validate the DVI-Q.

| Quest. | CVI of each criterion applied to the questions | CVI* of the Category | |||||

|---|---|---|---|---|---|---|---|

| Characteri-zation | Relevance | Vulnerability | Neutrality | Objectivity | |||

| Physical/emotional aggravation | PE1 | 0.88 | 0.85 | 0.88 | 0.81 | 0.88 | 0.87 |

| PE2 | 0.92 | 0.88 | 0.88 | 0.81 | 0.88 | ||

| PE3 | 0.92 | 0.92 | 0.88 | 0.85 | 0.92 | ||

| PE4 | 0.92 | 0.92 | 0.88 | 0.88 | 0.88 | ||

| PE5 | 0.92 | 0.92 | 0.88 | 0.88 | 0.92 | ||

| PE6 | 0.92 | 0.88 | 0.88 | 0.73** | 0.92 | ||

| PE7 | 0.85 | 0.85 | 0.85 | 0.77** | 0.85 | ||

| PE8 | 0.92 | 0.88 | 0.88 | 0.81 | 0.88 | ||

| PE9 | 0.88 | 0.81 | 0.81 | 0.81 | 0.85 | ||

| PE10 | 0.85 | 0.81 | 0.85 | 0.77** | 0.81 | ||

Caption: CVI* overall category; ** criterion considered unsatisfactory in the judges' evaluation.

Source: Elaborated by the authors (2021).

It is again observed that the category "Neutrality" remained the most resonant among the judges compared to the others, in which questions PE6, PE7 and PE10 were evaluated as unsatisfactory, but, overall, the category Physical/Emotional Aggravation was considered valid, visualizing the CVI* of 0.87.

Judge 25LET_MEST: Regarding these last two categories, I find them very interesting because it has to do with this perfection that is propagated on social networks and ends up causing a certain feeling of sadness, depressive feelings to the point that the person ends up thinking that their life is not, in quotes, the life that people on social networks have, right? So, I think it is important to address this in the questions, because if the young person or any other person evaluates their life according to what they see on the social networks, it is also a question of consumerism, if they are influenced, I don't know if this is the objective of the research, but I think it is useful for everything, I have even talked to some friends about this, that sometimes we see couples, we see people always smiling, always traveling, and we end up thinking, oh, my life is so boring, my life has nothing so good about it. So sometimes we end up, unconsciously or consciously, comparing ourselves to these perfect lives that are shown in the social networks, but which in fact is not that, and I believe that the same can happen with adolescents.

Finally, in Table 4, we present the quantitative data of the judges' judgment about the questions belonging to the Virtual Alienation category, in which, again, the percentage of agreement of these experts before the criteria of each item is represented by the CVI, as well as the general CVI* of the category.

Table 4: Application of the CVI in the Virtual Alienation category to validate the DVI-Q.

| Quest. | CVI of each criterion applied to the questions | CVI* of the Category | |||||

|---|---|---|---|---|---|---|---|

| Characteri-zation | Relevance | Vulnerability | Neutrality | Objectivity | |||

| Virtual Alienation | VA1 | 1,0 | 0.96 | 0.96 | 0.92 | 0.96 | 0.87 |

| VA2 | 0.81 | 0.81 | 0.81 | 0.77** | 0.85 | ||

| VA3 | 0.85 | 0.85 | 0.81 | 0.81 | 0.85 | ||

| VA4 | 0.92 | 0.88 | 0.88 | 0.85 | 0.92 | ||

| VA5 | 0.85 | 0.85 | 0.85 | 0.81 | 0.85 | ||

| VA6 | 0.96 | 0.92 | 0.92 | 0.85 | 0.96 | ||

| VA7 | 0.85 | 0.81 | 0.81 | 0.73** | 0.85 | ||

| VA8 | 0.85 | 0.81 | 0.81 | 0.77** | 0.85 | ||

| VA9 | 0.92 | 0.92 | 0.96 | 0.88 | 0.92 | ||

| VA10 | 0.88 | 0.88 | 0.92 | 0.85 | 0.88 | ||

Caption: CVI* overall category; ** criterion considered unsatisfactory in the judges' evaluation.

Source: Elaborated by the authors (2021).

Although the overall CVI of the Virtual Alienation category was also considered satisfactory, again the criterion "Neutrality" was evaluated as unsatisfactory in three questions, VA2, VA7 and VA8, and after the reformulation according to the judges' considerations, the questions were submitted to a second round of evaluation. This second round occurred exclusively through WhatsApp and Telegram within seven days, in which the evaluators could compare the questions before and after the changes based on the opinions raised by the group.

With the analysis of the new considerations of the judges, we found the need to significantly change only three questions, all belonging to the dimension Data Exposure, and after all the reformulated questions of the DVI-Q were considered valid, the next step corresponded to a pilot testing of this instrument, in a commented way, with a sample of the target audience to further qualify the semantic evaluation of the questions.

This new stage of semantic validation had the voluntary participation of 11 (eleven) students concluding the 3rd year of high school, enrolled in a school belonging to the Sergipe state education network, and occurred in remote mode, in which the questions were previously made available through Google Forms to be analyzed and, subsequently, a collective meeting was scheduled synchronously through the Google Meet platform, lasting approximately 40 minutes.

All students participating in this stage were over 18 years old and, therefore, could confirm their participation through the informed consent sent along with the instrument. As for the gender and age distribution, seven students were female, aged between 18 and 20. and the remaining four were male, aged between 18 and 22.

In general, this stage basically aimed to verify if the DVI-Q was really understandable to this sample of students, that is, if the items offered clarity and objectivity, as well as to identify any problems of ambiguity and other inconsistencies that could compromise the understanding of the adolescent audience. During this process, the students were asked about the rationale behind the questions, whether they were clear, or whether there were any terms they did not understand, as well as about the presence of any items or passages that did not make sense or that bothered them, and whether they had any comments or suggestions to make.

At the end of this process, it was found that the students showed good acceptance to the DVI-Q, with no reports of comprehension difficulties and no other factors that could cause any embarrassment or discomfort to them. However, most of this audience showed some discouragement with the number of questions, that is, 40 (forty) questions.

It is worth mentioning that this concern had already been alerted in the initial stage of evaluation by some judges, but we chose to keep the quantity for this moment with the purpose of knowing the opinion of these participants about which would be the most relevant items and that should be kept in the final version of the instrument. Thus, the students were asked to indicate four questions from each category to be excluded from the DVI-Q, which, at first, resulted in differences of opinion when asked why the questions they indicated should be excluded. To shed some light on this step, here are some fragments of the discussions.

Student 07: I saw no problem with the questions, no, on the contrary, I thought it was cool to answer them, like this, there are a couple of questions that I have already committed, like spreading news without knowing if it is true, installing viruses on the cell phone and having to format it, and even this thing of intimate photos, even without showing the face it is still a danger if the cell phone falls into the wrong hands [...]. Another thing, it's not my case, but I know a lot of people who get sick when they can't get good reviews on the internet, I don't care about that, but I have friends who practically beg us to follow them, like their pictures and comment on them.

Student 09: [...] it is very difficult to choose which questions should be excluded, I thought about this one about nudes, because I have never sent nudes and I don't even think of sending them, but after you asked me if I have any friends or know someone who has already shared this type of photo, I remembered some of my friends who have already done this and had a bad experience, so I changed my mind, I think it is an important subject to think about [...]. This question five of the third category [PE5], in my opinion, I would remove from the questionnaire because it is very similar to the first one of the previous category [TD1], and this last one that talks about addiction, shortness of breath and fast heartbeat [TD10], I also think it is important, but the question was too big, if I could make it smaller, it would be better.

It was possible to notice, based on the students' narratives, that the DVI-Q was well accepted by the target audience. The biggest difficulty was to reach a consensus about which questions should be excluded, but after some questions about why certain questions should remain and others not, new reflections and debates were raised, and, finally, most students agreed to eliminate the following questions: DE1, DE5, DE7 and DE10 (Data Exposure category); TD2, TD3, TD4 and TD6 (Technology Dependence category); PE3, PE5, PE9 and PE10 (Physical/Emotional Aggravation category); and VA2, VA4, VA6 and VA8 (Virtual Alienation category). This step also allowed making new changes in some questions, making them clearer and more consistent with the reality of this public.

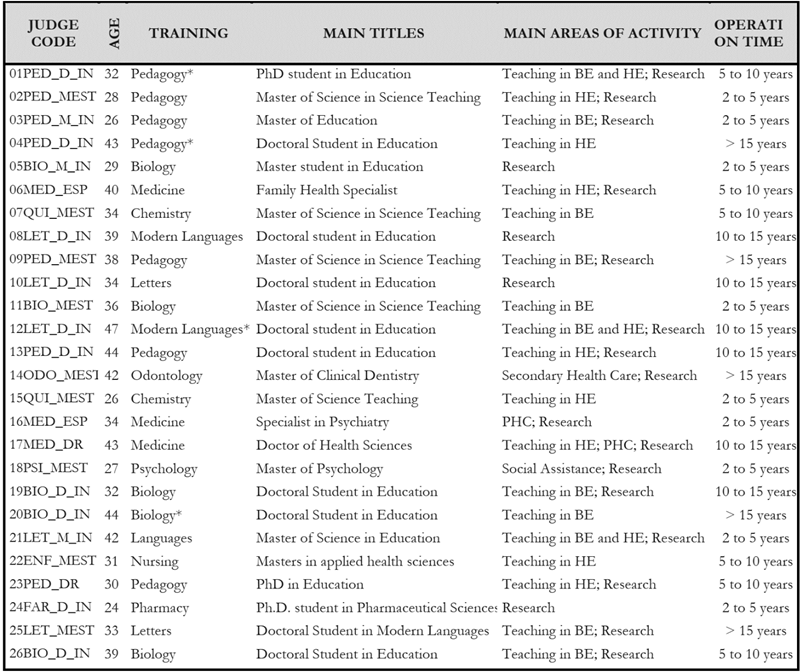

In this context, Hill, and Hill (2012), Cunha (2015) and Alves (2019) corroborate when they point out that this semantic validation stage contributes to qualify the instrument with even more relevant questions, allowing to assess the degree of understanding, clarity, and comprehension of the statements. Thus, the final version of the validated DVI-Q had 24 questions evenly distributed into four categories, as shown in Table 4.

Pilot application, reliability, and interpretation of the DVI-Q

After completion of the processes of content validation of the DVI-Q, the next step corresponded to the pilot application of this instrument, along with a socioeconomic questionnaire, which were answered by 3rd year high school students enrolled in four schools of the State Secretariat of Education, Sports, and Culture of Sergipe (SSESCS) in October and November 2021. To ensure statistical reliability, the choice of regional directorates and the respective school units invited to carry out this stage of the research was made by means of probability sampling techniques of the simple random type, in the perspective of Barbetta (2007), through an unbiased draw.

It is important to emphasize that, although the Statute of the Child and Adolescent (SCA) considers adolescence a complex process of growth and biopsychosocial development within the age range of 12 to 18 years, and may extend under some conditions to 21 years, as well as the Statute of the Youth classifies as young people those aged between 15 and 29 years, we prefer to adhere in this study to the WHO classification that circumscribes adolescence to the second decade of life, i.e., from 10 to 19 years of age, because, in addition to chronological criteria, we consider that biological, psychological and social factors are also important and should be valued in this conceptual approach (BRASIL, 1990. 2007, 2013).

In this context, the grand total of students with valid answers included in this research corresponded to 147 participants. This number is considered satisfactory to perform statistical processing and analysis in SPSS. A balance was noticed regarding the gender distribution of the participants, since the sample consisted of 53% (n 78) of female students and 47% (n 69) of male students, and, regarding the age range, the minimum age corresponded to 16 and the maximum to 19 years, with a mean of 17.37 years and standard deviation of 0.907, prevailing the range between 17 and 18 years, with a frequency of 45.6% (n 67) and 25.2% (n 37), respectively

Regarding the analysis of the internal consistency of the surveyed instruments, it is worth mentioning that reliability corresponds to a statistical measure of the reproducibility or stability of the data collected in a consistent manner in time and space. Thus, based on the assumption that any assessment instrument involving data collection is subject to errors of inaccuracies and other biases, it becomes necessary to test its accuracy through reliability techniques (PASQUALI, 2009; JESUS, 2013; ALVES, 2019).

Considering that the reliability analysis is a necessary condition for the research instruments to present consistent results about what they propose to measure, and with the least amount of bias possible, among the numerous techniques used, Cronbach's alpha coefficient is one of the most used due to its vast consolidation and contribution in the academic and statistical circles. Thus, all responses collected from 147 participants were imported into a database in SPSS software and submitted to statistical tests to evaluate the homogeneity of the DVI-Q through Cronbach's alpha coefficient, thus allowing an analysis of the internal consistency of the questions. This coefficient allows analyzing the profile of the answers provided by respondents to calculate the reliability between the items of the questionnaire, whose values range between 0 and 1, in which, the closer to 1, the greater the reliability of the dimensions of the construct (CRONBACH, 1951; PASQUALI, 2009; SILVA, 2017).

It is important to note that the level of acceptability of this coefficient depends on the type of research conducted, but in general, Landis and Koch (1977) classify indices between 0 to 0.20 as small or unsatisfactory consistency; 0.21 to 0.40 as reasonable; 0.41 to 0.60 moderate; 0.61 to 0.80 substantial; and 0.81 to 1.0 almost perfect. However, the most recent literature points out that values above 0.80 are considered ideal for research in the health sphere, while for studies related to the social sphere Cronbach's alpha values above 0.60 are reliable (STREINER, 2003; HILL; HILL, 2012; ALVES, 2019).

In this context, in order to analyze the internal consistency of the DVI-Q, we initially calculated Cronbach's alpha coefficient in general with all 24 questions, and obtained the index α = 0.821 (based on standardized items), considered satisfactory, which shows the existence of internal consistency of this research instrument, thus being possible to verify its validity in the qualitative-quantitative aspect and create the latent variable "Indicators of Digital Vulnerability" for the population studied. When we calculate this coefficient separately with respect to the categories presented in Chart 1: "Data Exposure", "Technological Dependence", "Physical/Emotional Aggravation", and "Virtual Alienation", in isolation, although all categories have presented satisfactory indices (α > 0.600), we realize that they have better consistency and reliability when applied together, as we can see in Table 5, below:

Table 5: Demonstration of Cronbach's alpha coefficient calculated on the DVI-Q.

| Categories | N of items | Cronbach’s Alpha |

|---|---|---|

| Data Exposure | 6 | 0.608 |

| Technological Dependence | 6 | 0.608 |

| Physical/Emotional Aggravation | 6 | 0.641 |

| Virtual Alienation | 6 | 0.675 |

| Complete DVI-Q | 24 | 0.821 |

Source: Elaborated by the authors (2022) with SPSS software.

Considering that, although the DVI-Q has four blocks of categories subdivided into six questions each, this instrument was designed to have its full applicability with all 24 items, and for this reason, the results obtained through Cronbach's alpha coefficient are considered satisfactory and consistent with what was expected, thus meeting the purpose of its development. Although the target audience of this version of the DVI-Q corresponds to adolescent high school students, it was possible to infer, through psychometric analyses, that this instrument, through cross-cultural adaptation processes, may also have its applicability validated for different audiences, and may even be applied along with other instruments to investigate various correlations through statistical analyses, according to the purpose of the applicators, thus contributing to new research related to the use of digital technologies.

Chart 4shows the final version of the DVI-Q after all validation stages were performed: content validation, by means of the judges' evaluation using the Delphi method; qualitative validation, by means of semantic analysis with the target audience sample; and quantitative validation, resulting from the pilot application and statistical analyses to calculate internal consistency and reliability.

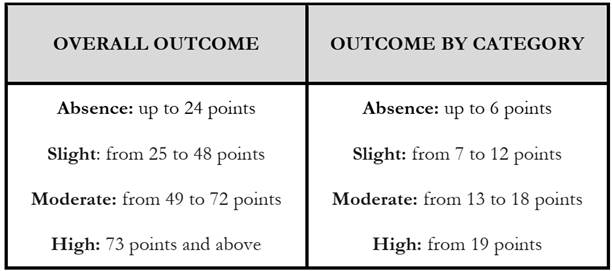

Regarding the different analytical and interpretative possibilities of the DVI-Q in light of the literature that guided its preparation, we defined that, once answered, the results may be established in two ways according to the score obtained, "general" and "by categories", with the following levels of indicative of digital vulnerability: absence of vulnerability; mild vulnerability; moderate vulnerability; and high vulnerability. We present in Chart 5, below, the classification of these levels according to the respondent's score.

Source: Alves (2023, p. 134).

Chart 5: Classification of digital vulnerability indicator levels by DVI-Q score.

For disclosure purposes, we present in Figure 3, below, a QR Code that will give access to the prototype of the digital version of the DVI-Q compatible with different interfaces and digital devices; however, we point out that this version will be improved soon, with the linking of a database so that it can be used in new research.

Source: Elaborated by the authors (2022).

Figure 3: QR Code to access the digital version of the DVI-Q4 on different devices.

The analysis of the results obtained with the application of the DVI-Q, both in general and fragmented by categories, will make it possible to establish a wider interpretative range of these results in a qualified and systematized way, because it is possible, for example, that a certain student does not show an alarming level of digital vulnerability in the general score of the questionnaire, but, when investigating the results of each category, it is possible to see that this same student showed himself vulnerable in some of them, thus allowing the teacher to intervene educationally with strategies aimed at the conscious and responsible use of technologies.

CONSIDERATIONS

The technologies play a fundamental role in people's lives, especially regarding the forms of communication and the rapid dissemination of information, but for these and other benefits to be consolidated, it is necessary that the users use them in an ethical, qualified, and reflective way in the different social practices.

The purpose of the development and validation of the DVI-Q was not to be contrary to technological advances. On the contrary, precisely because we recognize and value the important benefits derived from technologies during the development of humanity, especially in education, health, science, and other dimensions, as well as the sharp increase in the number of people who are increasingly immersed in cyberspace, we consider it relevant to also investigate possible negative aspects arising from the inappropriate use of technological resources, as indicators of digital vulnerability among adolescents. We infer that this instrument has remarkable relevance for the educational field, since it will help in the expansion of debates and reflections about how the teaching digital literacy is increasingly necessary for teachers to guide and promote the conscious and healthy use of digital technologies by students.

In this context, we emphasize that the elaboration of the DVI-Q required a long and systematic trajectory full of theories and methodological steps in accordance with the parameters of the scientific literature that guide the process of questionnaire construction and validation. Excellent results were obtained in the processes of content validation of this instrument, with CVI above 0.80 in all questions and categories, and later its reliability and consistency were proven through pilot application and statistical tests, specifically through Cronbach's alpha coefficient of 0.821.

Although content validation is essential to investigate the ability of an instrument to accurately measure the phenomenon to be studied, as well as to develop new measures associated with abstract concepts with observable indicators, it is worth noting that this step may not be permanent, given the possibility that the context may change over time. Thus, the theoretical procedures in the construction of measurement instruments are not always finalized after the judges' evaluation, which highlights the need to continue improving the DVI-Q with new statistical and empirical tests so that this instrument can be directed to other populations and be consolidated as a reference for future research related to the theme.

Finally, we reaffirm that the DVI-Q will contribute to reflect on and analyze the digital culture of young Basic Education students, not only with respect to the conscious and healthy use of technologies, but also to the development of a critical sense to decode and understand the different media languages conveyed and shared in cyberspace.

REFERENCES

ALEXANDRE, N. M. C.; COLUCI, M. Z. O. Validade de conteúdo nos processos de construção e adaptação de instrumentos de medidas. Ciência & Saúde Coletiva, v. 16, n. 7, p. 3061-3068, 2011. Disponível em:<Disponível em:https://www.scielo.br/pdf/csc/v16n7/06.pdf >. Acesso em:03/07/2018. [ Links ]

ALVES, M. M. S. Tecnologias móveis para formação docente: validação de um instrumento de identificação de vulnerabilidade digital. 2023. 219p. Tese (Doutorado em Educação) - Universidade Federal de Sergipe, São Cristóvão, 2023. [ Links ]

ALVES, M. M. S. Vulnerabilidade às IST/AIDS: desenvolvimento e validação de um instrumento de avaliação inspirado nas questões sociocientíficas. 2019. 217p. Dissertação (Mestrado em Ensino de Ciências e Matemática) - Universidade Federal de Sergipe, São Cristóvão, SE, 2019. [ Links ]

ANTUNES, M. M. Técnica Delphi: metodologia para pesquisas em educação no Brasil. Rev. Educação PUC-Camp ., v. 19, n. 1, p. 63-71, jan./abr. 2014. Disponível em:<Disponível em:http://periodicos.puc-campinas.edu.br/seer/index.php/reveducacao/article/view/2616 >. Acesso em:17/02/2020. [ Links ]

AYRES, J. R. C. M.; FRANÇA JÚNIOR, I.; CALAZANS, G. J.; SALETTI FILHO, H. C. O conceito de vulnerabilidade e as práticas de saúde: novas perspectivas e desafios. In: CZERESNIA, D.; FREITAS, C. M. (Orgs.). Promoção da saúde: conceitos, reflexões, tendências. Rio de Janeiro: FIOCRUZ, 2003. p. 117-139. [ Links ]

AYRES, J. R. C. M.; PAIVA, V.; FRANÇA JÚNIOR, I. Conceitos e práticas de prevenção: da história natural da doença ao quadro da vulnerabilidade e direitos humanos. In: PAIVA, V.; AYRES, J. R.; BUCHALLA, C. M. Vulnerabilidade e direitos humanos. Curitiba: Editora Juruá, 2012. p. 71-94. [ Links ]

BARBETTA, P. A. Estatística Aplicada às Ciências Sociais. 7. ed.Florianópolis: Ed. da UFSC, 2007. 315p. [ Links ]

BIERNACKI, P.; WALDORF, D. Snowball sampling: problems and techniques of chain referral sampling. Sociological Methods & Research, Thousand Oaks, CA, v. 10, n. 2, 1981. Disponível em:<Disponível em:http://smr.sagepub.com/content/10/2/141.abstract >. Acesso em:11/03/2021. [ Links ]

BITTENCOURT, H. R.; CREUTZBERG, M.; RODRIGUES, A. C. M.; CASARTELLI A. O.; FREITAS, A. L. S. Desenvolvimento e validação de um instrumento para avaliação de disciplinas na educação superior. Est. Aval. Educ. [on-line], v. 22, n. 48, p. 91-113, 2011. Disponível em:<Disponível em:http://educa.fcc.org.br/pdf/eae/v22n48/v22n48a06.pdf >. Acesso em:05/04/2020. [ Links ]

BRASIL. Base Nacional Comum Curricular: Educação Infantil, Ensino Fundamental e Ensino Médio. Brasília: MEC/Secretaria de Educação Básica, 2018. Disponível em:<Disponível em:http://basenacionalcomum.mec.gov.br/images/BNCC_EI_EF_110518_versaofinal_site.pdf >. Acesso em:11/04/2021. [ Links ]

BRASIL. Ministério da Saúde. Marco legal: saúde, um direito de adolescentes. Secretaria de Atenção à Saúde, Área de Saúde do Adolescente e do Jovem. Brasília: Editora do Ministério da Saúde, 2007. Disponível em:<Disponível em:https://bvsms.saude.gov.br/bvs/publicacoes/07_0400_M.pdf >. Acesso em:13/10/2022. [ Links ]

BRASIL. Lei n° 8.069, de 13 de julho de 1990. Estatuto da Criança e do Adolescente. Brasília: Diário Oficial da União, 1990. [ Links ]

BRASIL. Lei n° 12.852, de 05 de agosto de 2013. Estatuto da Juventude. Brasília: Diário Oficial da União, 2013. [ Links ]

COLUCI, M. Z. O.; ALEXANDRE, N. M. C.; MILANI, D. Construção de instrumentos de medida na área da saúde. Ciência & Saúde Coletiva, v. 20, n. 3, p. 925-936, 2015. Disponível em:<Disponível em:https://www.scielo.br/pdf/csc/v20n3/1413-8123-csc-20-03-00925.pdf >. Acesso em:05/04/2020. [ Links ]

CONTI, N. A.; JARDIM, A. P.; HEARST, N.; CORDÁS, T. A.; TAVARES, H.; ABREU, C. N. Avaliação da equivalência semântica e consistência interna de uma versão em português do Internet Addiction Test (IAT). Rev. Psiq. Clín., v. 39, n. 3, p. 106-110, 2012. Disponível em:<Disponível em:https://www.scielo.br/scielo.php?script=sci_arttext&pid=S0101-60832012000300007 >. Acesso em:11/11/2021. [ Links ]

CRONBACH, L. J. Coefficient alpha and the internal structure of test. Psychometrika. 1951. [ Links ]

CUNHA, C. O desempenho escolar em ciências e o pluralismo epistemológico: a elaboração de questões do eixo temático “vida e ambiente”. 2015. 115 p. Dissertação (Mestrado em Ensino de Ciências e Matemática) - Universidade Federal de Sergipe, São Cristóvão, SE, 2015. [ Links ]

DeVON, H. A.; BLOCK, M. E.; MOYLE-WRIGHT, P.; ERNST, D. M.; HAYDEN, S. J.; LAZZARA, D. J.; SAVOY, S. M.; KOSTAS-POLSTON, E. A psychometric toolbox for testing validity and reliability. J. Nurs Scholarsh., v. 2, n. 39, p. 155-164, 2007. Disponível em:<Disponível em:https://sigmapubs.onlinelibrary.wiley.com/doi/abs/10.1111/j.1547-5069.2007.00161.x >. Acesso em:05/11/2020. [ Links ]

FLICK, U. Introdução à pesquisa qualitativa. Tradução Joice Elias Costa. 3. ed. Porto Alegre: Artmed, 2009. 405p. [ Links ]

GARCÍA, V. M.; SUÁREZ, M. M. Delphi method for the expert consultation in the scientific research. Rev. Cub. Salud. Pública., v. 39, n. 2, p. 253-267, 2013. Disponível em:<Disponível em:https://www.medigraphic.com/pdfs/revcubsalpub/csp-2013/csp132g.pdf >. Acesso em:03/07/2020. [ Links ]

GONZÁLEZ-CABRERA, J.; LEÓN-MEJÍA, A.; PÉREZSANCHO, C.; CALVETE, E. Adaptation of the Nomophobia Questionnaire (NMP-Q) to Spanish in a sample of adolescents. Actas Espanolas de Psiquiatría, v. 45, n. 4, p. 137-144. 2017. Disponível em:<Disponível em:https://pubmed.ncbi.nlm.nih.gov/28745386/ >. Acesso em:21/01/2020. [ Links ]

HILL, M. M.; HILL, A. Investigação por questionário. 2. ed.Lisboa: Sílabo, 2012. [ Links ]

JESUS, E. M. S. Desenvolvimento e validação de conteúdo de um instrumento para avaliação da assistência farmacêutica em hospitais de Sergipe. 2013. 152p. Dissertação (Mestrado em Ciências Farmacêuticas) - Universidade Federal de Sergipe, São Cristóvão, SE, 2013. [ Links ]

KING, A. L. S.; NARDI, A. E.; GUEDES, P.; PÁDUA, M. S. K. L. Livro de escalas delete: detox digital e uso consciente de tecnologias. Rio de Janeiro: Barra Livros, 2020. 154p. [ Links ]

KING, A. L. S.; NARDI, A. E.; SILVA, A. C. Nomofobia: dependência do computador, internet, redes sociais? Dependência do telefone celular? O impacto das novas tecnologias no cotidiano dos indivíduos. 1. ed.São Paulo: Atheneu, 2014. 328p. [ Links ]

ING, A. L. S; VALENÇA, A. M.; NARDI, A. E. Nomophobia: The mobile phone in panic disorder with agoraphobia: Reducing phobias or worsening of dependence? Cognitive and Behavioral Neurology, v. 23, n. 1, p. 52-54, 2010. Disponível em:<Disponível em:https://journals.lww.com/cogbehavneurol/Abstract/2010/03000/Nomophobia__The_Mobile_Phone_in_Panic_Disorder.10.aspx >. Acesso em:05/11/2019. [ Links ]

KOSOWSKI, T. R.; McCARTHY, C.; REAVEY, P. L.; SCOTT, A. M.; WILKINS, E. G.; CANO, S. J.; KLASSEN, A. F.; CARR, N.; CORDEIRO, P. G.; PUSIC, A. L; A systematic review of patient-reported outcome measures after facial cosmetic surgery and/or nonsurgical facial rejuvenation. Plast Reconstr Surg., v. 123, n. 6, p. 1819-1827, jun. 2009. Disponível em:<Disponível em:https://pubmed.ncbi.nlm.nih.gov/19483584/ >. Acesso em:17/01/2020. [ Links ]

LEE, S.; KIM, M. W.; MCDONOUGH, I. M.; MENDOZA, J. S.; KIM, M. S. The Effects of Cell Phone Use and Emotion-regulation Style on College Students’ Learning. Appl. Cognit. Psychol., 2017. Disponível em:<Disponível em:https://onlinelibrary.wiley.com/doi/abs/10.1002/acp.3323 >. Acesso em:21/01/2021. [ Links ]

LOUREIRO, D. F.; GALHARDO, A. O. Desenvolvimento da Versão Portuguesa do Questionário de Nomofobia (NMP-Q-PT): estudo da estrutura fatorial e propriedades Psicométricas. Dissertação (Mestrado em Psicologia Clínica) - Instituto Superior Miguel Torga, Coimbra, out. 2018. [ Links ]

MARQUES, J. B. V.; FREITAS, D. de. Método DELPHI: caracterização e potencialidades na pesquisa em Educação. Pro-Posições, v. 29, n. 2, p. 389-415, maio/ago. 2018. Disponível em:<Disponível em:https://www.scielo.br/pdf/pp/v29n2/0103-7307-pp-29-2-0389.pdf >. Acesso em:11/01/2019. [ Links ]

MATOS, F. R.; ROSSINI, J. C.; LOPES, R. F. F.; AMARAL, J. Dee H. F. Tradução, adaptação e evidências de validade de conteúdo do Schema Mode Inventory. Psicologia: Teoria e Prática, São Paulo, v. 22, n. 2, p. 18-38, maio/ago., 2020. Disponível em:<Disponível em:https://www.scielo.br/pdf/csc/v16n7/06.pdf >. Acesso em:03/02/2021. [ Links ]

PAGAN, A. A.; TOLENTINO-NETO, L. C. B. Desempenho escolar inclusivo. 1. ed. Curitiba, PR: CRV, 2015. 222 p. [ Links ]

PASQUALI, L. Psicometria. Rev. Esc. Enferm. USP, v. 43(Esp.), p. 992-999, 2009. Disponível em:<Disponível em:https://www.scielo.br/j/reeusp/a/Bbp7hnp8TNmBCWhc7vjbXgm/?format=pdf&lang=pt >. Acesso em:11/03/2021. [ Links ]

PIRES, D. A.; BRANDÃO, M. R. F.; SILVA, C. B. Validação do questionário de burnout para atletas. R. da Educação Física/UEM., Maringá, v. 17, n. 1, p. 27-36, 2006. Disponível em:<Disponível em:https://periodicos.uem.br/ojs/article >. Acesso em:11/11/2020. [ Links ]

POLIT, D. F.; BECK, C. T.; The content validity index: are you sure you know what’s being reported? Critique and recommendations. Res. Nurs. Health., v. 29, n. 5, p. 489-97, 2006. Disponível em: <Disponível em: https://onlinelibrary.wiley.com/doi/abs/10.1002/nur.20147 >. Acesso em:03/07/2020. [ Links ]

POWELL, C. The Delphi technique: myths and realities. Journal of Advanced Nursing, v. 41, n. 4, p. 376-382, 2003. Disponível em: https://onlinelibrary.wiley.com/doi/abs/<10.1046/j.1365-2648.2003.02537.x>. Acesso em:03/07/2020. [ Links ]

REVORÊDO, L. S.; DANTAS, M. M.; MAIA, R. S.; TORRES, G. V.; MAIA, E. M. Validação de conteúdo de um instrumento para identificação de violência contra criança. Acta Paulista de Enfermagem, v. 29, n. 2, p. 205-217, 2016. Disponível em:<Disponível em:https://www.scielo.br/pdf/ape/v29n2/1982-0194-ape-29-02-0205.pdf >. Acesso em:03/07/2020. [ Links ]

RIBEIRO, J. C.; LEITE, L.; SOUSA, S. Notas sobre aspectos sociais presentes no uso das tecnologias comunicacionais móveis contemporâneas. In: NASCIMENTO, A. D.; HETKOWSKI, T. M. (Orgs.). Educação e contemporaneidade: pesquisas científicas e tecnológicas [on-line]. Salvador: EDUFBA, 2009. p. 186-201. Disponível em:<Disponível em:https://books.scielo.org/id/jc8w4/pdf/nascimento-9788523208721-09.pdf >. Acesso em:17/04/2020. [ Links ]

ROWE, G.; WRIGHT, G. The Delphi technique as a forecasting tool: issues and analysis. International Journal of Forecasting, v. 15, p. 353-375, 1999. Disponível em:<Disponível em:https://www.sciencedirect.com/science/article/abs/pii/S0169207099000187 >. Acesso em:03/07/2020. [ Links ]

SENADOR, A. Nomofobia 2.0 e outros excessos na era dos relacionamentos digitais. São Paulo: Aberje, 2018. 160p. [ Links ]

SILVA, C. A. Transtornos da dependência de internet. Edição do Kindle. 2017. 114 p. [ Links ]

SILVA, J. B. et al. Validação de um manual de cuidados fisioterêuticos no pós-parto para puérperas. Rev. Ciênc. Ext., v. 16, p. 209-222, 2021. Disponível em:<Disponível em:https://ojs.unesp.br/index.php/revista_proex/article/view/3317 >. Acesso em:23/01/2022. [ Links ]

SOUZA, A. C. de.; ALEXANDRE, B. M. C.; GUIRARDELLO, E. B. Propriedades psicométricas na avaliação de instrumentos: avaliação da confiabilidade e da validade. Epidemiol. Serv. Saude, Brasília, v. 26, n. 3, p. 649-659, jul./set., 2017. Disponível em:<Disponível em:http://scielo.iec.gov.br/pdf/ess/v26n3/2237-9622-ess-26-03-00649.pdf >. Acesso em:05/04/2020. [ Links ]

STREINER, D. L. Being inconsistent about consistency: when coefficient alpha does and doesn´t matter. Journal of Personality Assessment. v. 80, p. 217-222. 2003. Disponível em:<Disponível em:https://pubmed.ncbi.nlm.nih.gov/12763696/ >. Acesso em:03/05/2021. [ Links ]

TUMELEIRO, L. F.; COSTA, A. B.; HALMENSCHLAGER, G. D.; GARLET, M.; SCHMITT, J. Dependência de internet: um estudo com jovens do último ano do ensino médio. Gerais, Rev. Interinst. Psicologia, Belo Horizonte, v. 11, n. 2, jul./dez. 2018. Disponível em:<Disponível em:http://pepsic.bvsalud.org/pdf/gerais/v11n2/07.pdf >. Acesso em:11/04/2021. [ Links ]

WESTMORELAND, D.; WESORICK, B.; HANSON, D.; WYNGARDEN, K. Consensual Validation of clinical practice model guidelines. J. Nurs. Care Quality, v. 14, n. 4, p. 16-27, 2000. Disponível em:<Disponível em:https://journals.lww.com/jncqjournal/Abstract/2000/07000/Consensual_Validation_of_Clinical_Practice_Model.5.aspx >. Acesso em:21/01/2019. [ Links ]

WIDYANTO, L.; McMURRAN, M. The psychometric properties of the internet addiction test. Cyberpsychology Behav., v. 7, n. 4, p. 443-450, 2004. Disponível em:https://www.liebertpub.com/doi/<10.1089/cpb.2004.7.443>. Acesso em:11/11/2020. [ Links ]

YILDIRIM, C.; CORREIA, A. Exploring the dimensions of nomophobia: Development and validation of a self-reported questionnaire. Computers in Human Behavior, n. 49, p. 130-137, 2015. Disponível em:<Disponível em:https://www.sciencedirect.com/science/article/abs/pii/S0747563215001806?via%3Dihub >. Acesso em:21/01/2021. [ Links ]

YOUNG, K. S. Internet addiction: the emergence of a new clinical disorder. Cyberpsychol Behav., v. 1, n. 3, p. 237-244, 1998. Disponível em:<https://www.liebertpub.com/doi/pdf/10.1089/cpb.1998.1.237>. Acesso em:05/11/2019. [ Links ]

1The translation of this article into English was funded by the Fundação de Amparo à Pesquisa do Estado de Minas Gerais - FAPEMIG, through the program of supporting the publication of institutional scientific journals.

2The Reference Matrix of the final version of the DVI-Q, together with the descriptors established for the questions, is organized by categories and can be found at the following website: https://drive.google.com/file/d/1_NEYpLSSVNVBgqnZtefVQchiGzdpitHj/view?usp=sharing.

DECLARATION OF CONFLICT OF INTEREST

Received: May 19, 2022; preprint: May 18, 2022; Accepted: May 03, 2023

texto en

texto en